SAN FRANCISCO - Today's CT scanners can deliver, at best, about two-thirds of the signal and dose they output into their images. But continued progress toward sharper images and lower dose will require future generations of CT scanners to do better -- and within a few years they will, according to an opening-day talk at the International Symposium on Multidetector-Row CT (MDCT).

As robust as CT development has been, coming improvements in scanner design will tread much farther down the road toward perfect efficiency, said Norbert Pelc, ScD, who chairs the department of bioengineering at Stanford University. Future scanners will incorporate a broad range of more efficient technologies, including energy-discriminating photon-counting detectors, dynamic bow-tie filters, and advanced reconstruction techniques.

"The question for this talk is, is there some kind of design that can address some of the limitations" of current scanners -- "and what might we gain from it?" he asked. The talk, he cautioned, was "not intended to describe the next generation of scanners produced by the vendors, but maybe the one after that."

Sources of inefficiency

Today's scintillator photodiode detectors are an important source of inefficiency owing to their very modest geometric efficiency of about two-thirds. Their noise limits the possibilities for higher spatial resolution, Pelc said. Quantum noise dominates at high dose levels but is taken over by electronic noise as dose levels drop.

"As we go down to very low signals, electronic noise becomes the limit to the precision of our measurements, and not quantum noise anymore," he said. Put them together "and we end up with a dose efficiency that is, at best, somewhere in the 60% to 70% range, and at very low signal levels can be much, much worse."

Counting photons

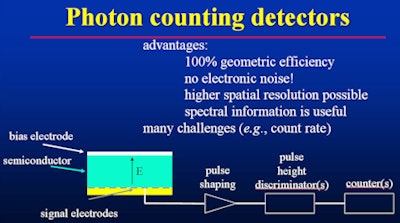

Photon-counting detectors can be a way of getting around both the electronic and quantum noise problems, Pelc said. In a direct-conversion photon-counting detector, the detector isn't pixilated but rather comes in a solid block, and the signals from different cells are kept apart from each other by the electric field imposed on the semiconductor. The result is 100% efficiency without gaps.

"If we take the signals from this direct-conversion detector, shape them, and look for blips in the signal that exceed a threshold, then we are counting individual photons, and as long as the photon exceeds that threshold and the threshold is set high enough, we will have no electronic noise," he said. In addition, the cells comprising the detectors can be made much finer, which again permits higher spatial resolution.

A number of challenges also face the development of photon-counting detectors. "One of them is what happens when photons come too close together in time," Pelc said, referencing the problem of pulse pile-up and count loss described by Taguchi et al (2013).

"If a single photon comes in isolated from all other events, we can measure the pulse shape, but if two of them come close together in time, we may not be able to resolve that and their pulse type gets added," he said. When this occurs, at least two bad things have happened: First, the photon was missed, and second, the energy was miscalculated.

There are also situations in between the correctly measured photon and the two incorrectly measured photons, where the signal is simply distorted. "At very high flux, you start missing photons and distorting the spectrum," he said. So while photon-counting detectors have an efficiency closer to 100% rather than the 60% in current standards, it falls off again as photons start getting missed at very high flux rates.

"So if we are going to use these photon-counting detectors, we have to make sure to protect them from these very high flux rates," Pelc said. "If we're able to find the sweet spot for imaging and avoid these extremes, then the spectral information comes for free." And that's what developers are promising, he added.

Photon-counting detectors offer significant benefits, along with challenges. Image courtesy of Norbert Pelc, ScD.

Photon-counting detectors offer significant benefits, along with challenges. Image courtesy of Norbert Pelc, ScD.Photon weighting

An additional advantage of photon-counting detectors is that photons can be weighted appropriately, with low-energy photons weighted more than high-energy photons, yielding "significant improvements in dose-efficiency if we can make photon-counting detectors work," Pelc said.

A 2009 paper by Schmidt et al calculated a contrast-to-noise improvement of 1.25 compared to current detectors with simple photon counting; efficiency rose to 1.57 with image-based energy-discriminating photon counting and 1.59 using optimal projection-based energy-discriminating photon counting, according to Pelc.

Counting ability in terms of speed is another development challenge for the detectors; the problem might be solved by slowing down the scanner -- for example, by cutting gantry rotation speeds to modest levels. As a result, the photon-counting detectors wouldn't have to count as fast, permitting the mA to be dialed down.

In a related problem, today's detectors have imperfect and count-rate-dependent energy response, which can be quite challenging, he said. Several vendors, including Philips Healthcare, GE Healthcare, and Siemens Healthcare, have long been working on solutions that optimize detector efficiency. And while great progress has been made, the first commercial photon-counting detectors are unlikely to be incorporated into the fastest and widest-beam scanners available today because of these challenges. And they will be expensive, Pelc added.

Photon-counting detectors, like all detectors, also face a radiation scatter penalty that increases with greater z-axis coverage, Pelc said, referencing a 2008 paper by Vogtmeier et al. "One way to reduce the scatter problem is to narrow the beam, and this is another reason why they may want to build systems where they don't all have 16 cm of coverage" per rotation, Pelc said. Additionally, steps will need to be taken to control the flux distribution on the patient.

Dressing up the bow tie

Sculpting and tailoring the illumination directed at the patient to optimize the exposure is a lesson that was already learned with the very difficult-to-build inverse-geometry scanner. This problem was successfully addressed in the "dynamic bow-tie" filter developed by Scott Hsieh and colleagues, Pelc said.

The dynamic bow tie consists of triangular wedges that move precisely to control the illumination field, both as a function of position within the fanbeam and also in synch with the gantry rotation. In this way, the dynamic bow tie is able to change its attenuation to customize the illumination field and more efficiently measure the projections.

Putting it all together

Summing up, Pelc said his proposal for a more efficient CT scanner consists of several components working together, including the following:

- An energy-discriminating photon-counting detector (benefits: no electronic noise, higher geometric efficiency, optimal energy weighting)

- Dynamic bow tie (reduce dose, lower peak count rate)

- Slower gantry rotation speed (e.g., 1 sec, control count rate)

- Modest beam width (e.g., 1 cm, mitigate scatter and cost)

- No (or at least a coarse) antiscatter grid to eliminate associated geometric efficiencies

- Advanced reconstruction

In particular, the dynamic bow tie is a simple solution -- the low-hanging fruit of the more efficient scanner, he called it. The dynamic bow tie will be very helpful because it lowers the peak count rate and eases the workload of the photon-counting detector, according to Pelc.

The proposed scanner would have twice the spatial resolution of any clinical scanner currently on the market, and it represents "the largest spatial resolution improvement we've had since 1980," leading to clinical benefits so substantial they cannot be predicted, he said. And, of course, spectral data would be available on all scans.

"I think the speed of this scanner would be sufficient for most applications, except for cardiac and whole-organ perfusion studies, which it would not support," Pelc said. And it will do all these things "at about one-fifth the dose of current systems, with comparable or better low-contrast performance."