An artificial intelligence (AI) algorithm may be able to help overcome one of the biggest issues limiting the clinical use of radiomics in CT -- the low reproducibility of quantitative imaging features on images from different reconstruction algorithms, according to research published online June 18 in Radiology.

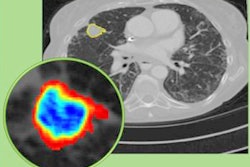

A team of researchers led by Jooae Choe from Asan Medical Center in Seoul, South Korea, trained a convolutional neural network (CNN) to convert chest CT images acquired with two different reconstruction algorithms, or kernels. The team found that the deep-learning model significantly improved the reproducibility of radiomics features for pulmonary nodule on images produced by different reconstruction kernels.

"We believe that our study provides a basis for image conversion using CNNs in radiomics and that it will help promote related research," the authors wrote.

Radiomics refers to the use of algorithms to extract large volumes of data from medical images for additional analysis. But the current lack of a standardized chest CT protocol for evaluating radiomics makes it difficult to compare studies, as different reconstruction kernels can produce variance in radiomic measurements. This impacts the generalizability of radiomics results, according to the researchers.

To assess the value of a CNN-based image conversion model for improving reproducibility, the researchers retrospectively evaluated 104 patients who were found to have pulmonary nodules or masses between April and June 2017 after receiving noncontrast-enhanced or contrast-enhanced axial chest CT exams at their institution. All images from the Somatom Definition Edge (Siemens Healthineers) CT scanner had been reconstructed with soft kernel (B30f) and sharp kernel (B50f) reconstruction.

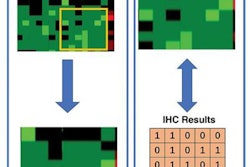

Using residual-learning techniques, a CNN was developed to generate kernel-converted images, converting images from B30f to B50f and vice versa. Two chest radiologists then segmented the pulmonary nodules in a semiautomated manner. Next, 702 radiomic features -- including tumor intensity, texture, and wavelet features -- were extracted using an open-source program.

After calculating concordance correlation coefficients, the researchers found that the radiomic features were reproducible among the two readers on the same image kernel but not reproducible for different image kernels. Reproducibility improved significantly, however, on the CNN's kernel-converted images.

| Impact of CNN on reproducibility of radiomics measures | |||

| On the same image kernel | On different image kernels | On CNN-converted images | |

| Concordance correlation coefficient | 0.92 | 0.38 | 0.84 |

| Number of reproducible radiomic features | 592 of 702 (84.3%) | 107 of 702 (15.2%) | 403 of 702 (57.4%) |

In other findings, the researchers noted that texture and wavelet features are more affected by different reconstruction kernels than tumor intensity features. That's because texture and wavelet features are sensitive to changes in spatial and density resolutions, they said.

In an accompanying editorial, Dr. Chang Min Park of Seoul National University Hospital said that the study represents a major step forward in the field of radiomics.

"Considering the rapid pace of deep-learning technologies today, I am certain that a variety of deep learning-based solutions will eventually solve nearly every step of the radiomics process, including CT acquisition, target identification, segmentation, and feature extraction in the exciting years to come," Park wrote.