An artificial intelligence (AI) algorithm can accurately differentiate between benign and malignant small solid masses on multiphase contrast-enhanced CT scans, Japanese researchers reported in a study published online January 8 in the American Journal of Roentgenology.

In a retrospective project involving nearly 170 solid renal masses ≤ 4 cm in size, a team of researchers from Okayama University Hospital in Okayama found that a convolutional neural network (CNN) trained using image data from only the corticomedullary CT phase produced the highest accuracy -- 88% -- compared with five other models trained using other individual or combinations of CT phases. It also outperformed two abdominal radiologists.

"The current study suggests that CNN models could also have a good diagnostic performance and potential to help triage patients with a small solid renal mass to either biopsy or active surveillance," wrote lead author Dr. Takashi Tanaka and colleagues.

Dynamic CT can provide useful diagnostic information to differentiate small solid renal masses, but evaluation of these images can be subjective and affected by radiologist experience. In addition, some incidentally detected small renal masses can't be clearly classified on imaging alone, according to the group.

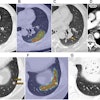

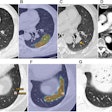

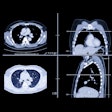

To see if deep learning could help classify these masses, the researchers gathered 1,807 image sets from 168 pathologically diagnosed solid renal masses 4 cm or smaller in 159 patients. The image sets included four CT phases: unenhanced, corticomedullary, nephrogenic, and excretory. Of the 168 masses, 136 were malignant and 32 were benign.

The researchers randomly divided the dataset into five subsets, including four sets encompassing 48,832 images that were used for augmentation and supervised training. The remaining 281 images served as the test set. Six individual deep-learning models were trained, including four trained solely on images from one phase, one trained using only the contrast-enhanced triphasic images, and one trained on all four CT phases.

Two abdominal radiologists with nine and 10 years of experience, respectively, also evaluated all phase images of the test set, blinded to the final diagnosis and the results of the CNN models.

The researchers found that the model trained using only the corticomedullary phase images performed the best out of the six, including the model trained using all four CT phases.

| Performance of deep-learning model trained using corticomedullary phase CT images for characterizing small solid renal masses | |

| Area under the curve (AUC) | 0.846 |

| Sensitivity | 93% |

| Specificity | 67% |

| Positive predictive value | 93% |

| Negative predictive value | 67% |

| Accuracy | 88% |

In contrast, one radiologist produced their highest AUC (0.624) from analysis of all CT phases, while the second radiologist generated their best AUC (0.648) from evaluation of the contrast-enhanced triphasic images.

The authors emphasized, however, that the study results are not presumed to indicate that the CNN models offer superior diagnostic performance to radiologists.

"Rather, the study was designed to show imaging similarity between malignant and benign masses," the authors wrote. "However, by preparing and adjusting the appropriate images for training, we might be able to create more promising models to support our daily work."