An artificial intelligence (AI) algorithm for detecting cervical spine fractures may not generalize well to local patient populations, according to research published online June 11 in the American Journal of Neuroradiology.

After previously reporting that a commercial intracranial hemorrhage detection algorithm yielded lower sensitivity and positive predictive value than in published studies in the literature, researchers from the University of Wisconsin-Madison have now found that an AI model could detect only a little over half of cervical spine fractures on CT exams in a retrospective study.

"Many similar algorithms have also received little or no external validation, and this study raises concerns about their generalizability, utility, and rapid pace of deployment," wrote the authors led by Andrew Voter, PhD, and senior author Dr. John-Paul Yu, PhD. "Further rigorous evaluations are needed to understand the weaknesses of these tools before widespread implementation."

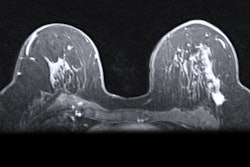

In an effort to evaluate the performance of AI software for detecting cervical spine fractures, the researchers used AI software from Aidoc to retrospectively evaluate 1,904 noncontrast cervical spine CT scans of adult patients, including 122 fractures. After the algorithm and an attending neuroradiologist analyzed the studies for the presence of a cervical spine fracture, any discrepancies were independently adjudicated.

Although the software yielded concordant results with neuroradiologists in over 91.5% of cases, it also missed over half of the cervical spine fractures and had 106 false-positive studies:

- Sensitivity: 54.9%

- Specificity: 94.1%

- Positive predictive value: 38.7%

- Negative predictive value: 96.8%

After conducting failure-mode analysis, the researchers found that the software's diagnostic performance was not significantly associated with variables such as imaging location, scanner model, or study indication.

"However, the sensitivity was affected by patient age and characteristics of the underlying fracture, specifically the fracture acuity and location of the fracture, with chronic fractures and fractures of osteophytes and the vertebral body overrepresented among the missed fractures," Voter and colleagues wrote.

Unexpectedly, the software also produced a significant number of false positives, most commonly involving an etiology of spine degeneration, according to the researchers.

"This is perhaps not surprising because degeneration occurs with aging and generates abnormalities such [as] ossicles or irregularities in the bony surface that could be mistaken for fractures," the authors wrote.

The researchers noted that although the nature of neural-network algorithms prevents full understanding of the reasons behind the lower diagnostic accuracy, their failure-mode analysis did identify several potential areas of improvement.

"Nevertheless, the overall performance of this AI [decision-support system] at our institution is different enough and raises potential concerns about the generalizability of AI [decision-support systems] across heterogeneous clinical environments and motivates the creation of data-reporting standards and standardized study design, the lack of which precludes unbiased comparisons of AI [decision-support system] performance across both institutions and algorithms," they wrote. "Adoption of a standardized design for all AI [decision-support system] algorithms will help speed the development and safe implementation of this promising technology as we aim to integrate this important tool into clinical workflow."