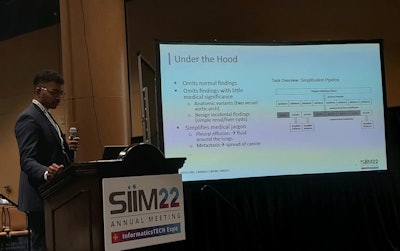

KISSIMMEE, FL - Artificial intelligence (AI) can help simplify medical language for patients undergoing chest CT imaging, a presenter explained on June 10 at the Society for Imaging Informatics (SIIM) annual meeting.

In his talk, Pratheek Bobba from Yale University presented a proof of concept showing how publicly available AI algorithms shaped to parameters set by researchers help eliminate medical jargon in radiology reports and in turn, could help patients adhere to health guidelines more.

"We think that patients who can wrap their minds around radiology findings are more likely to be better engaged and have better clinical outcomes," Bobba said.

While radiology reports contain vital health information for patients, verbiage used in reports equate to college-level language. The resulting confusion can lead to patients not adhering to report recommendations, leading to costly, avoidable follow-up visits.

A 2020 study found that lung cancer screening adherence is at about 55% and called for interventions toward patients with lower education levels.

The 21st Century Cures Act, signed into law in 2016, requires practices to make healthcare information readily available to patients. Bobba said such information also needs to be understandable.

"Given that the average U.S. resident reads at an 8th-grade reading level and the average Medicare beneficiary reads at a 5th-grade reading level, the American Medical Association recommends that health information be relayed to patients at a 4th- to 6th-grade level," Bobba said.

To do this, Bobba and colleagues aimed to use publicly available AI to simplify radiology reports to the point where it would only need a final check for accuracy by the dictating radiologist prior to releasing reports to patients.

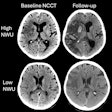

The researchers used PubMedBERT to determine whether a sentence should be included in a report. They then utilized the Bidirectional Auto-Regressive Transformers (BART) AI base to simplify sentences. Bobba et al included nearly 500 CT images from their database for the pilot study.

Pratheek Bobba from Yale University presented a proof of concept at the SIIM annual meeting that showed the potential of an AI model that simplifies medical language for patients to better understand radiology reports and increase adherence to recommendations. Although more data and training are needed, Bobba and colleagues said the initial results are encouraging.

Pratheek Bobba from Yale University presented a proof of concept at the SIIM annual meeting that showed the potential of an AI model that simplifies medical language for patients to better understand radiology reports and increase adherence to recommendations. Although more data and training are needed, Bobba and colleagues said the initial results are encouraging.The study authors found that cross-validation following the first phase resulted in an average recall of 0.9583, a precision of 0.6494, and an f3 threshold of 0.9129. They concluded that while their initial results are "encouraging," larger amounts of training data are needed before implementing the model into clinical practice, as well as measuring patient satisfaction.

"We also want to conduct more rigorous, quantitative analysis of the model's accuracy in addition to the qualitative parts," Bobba said. "Ultimately, we envision that this application should be able to be used for much more than just CT. We hope to expand to not only other radiology scans and modalities but also to other forms of medical imaging and scientific texts."

He told AuntMinnie.com that the team plans to improve its training database to improve the accuracy of the model.

"So far, we've manually simplified about 500 chest CT reports, but we have a database of about 2,000 reports," he said.