An artificial intelligence (AI) algorithm designed to screen x-rays may improve the performance and efficiency of radiologists diagnosing major chest abnormalities in real-world settings, according to a study published August 31 in JAMA Network Open.

A team at Massachusetts General Hospital (MGH) in Boston investigated the potential of a commercially available algorithm (Insight CXR 3, Lunit) to assist radiologists with varying levels of experience diagnose four major chest abnormalities. The group found the algorithm improved the radiologists' performance, as well as saved reporting time.

"The chief implication of our study is the improved accuracy in detecting four target chest radiograph findings (pneumonia, lung nodule, pleural effusion, and pneumothorax) with AI-aided interpretation while improving overall efficiency," wrote corresponding author Dr. Mannudeep K. Kalra, an assistant professor of radiology at Harvard Medical School, and colleagues.

Studies have shown that concurrent use of AI algorithms can improve the performance of clinician readers when interpreting chest x-rays. Yet concerns remain over the impact of AI in the real world, since most research is conducted in simulated settings without performance tools that mimic the real-world workflow, according to the authors.

There is also a lack of evidence on the impact of AI on reader efficiency, especially in terms of the time it takes for radiologists to complete their reports, they added.

Thus, Kalra and colleagues aimed to explore the impact of AI on reader performance, both in terms of accuracy and efficiency, using Insight CXR, an algorithm that has garnered clearance for several indications in the U.S., Europe, and Asia.

The multicenter study was conducted from April to November 2021 and involved two attending radiologists, two thoracic radiology fellows, and two residents who independently participated in two performance test sessions. The sessions included a reading session with AI and a session without AI. Each reader documented their findings in a customized template that reflected real-world reporting, with time taken recorded for each chest x-ray.

Sensitivity, specificity, and area under the receiver operating characteristic curve were calculated for each reader's target findings, with labels created by two thoracic radiologists serving as the ground truth.

The study involved a total of 497 frontal chest x-rays from adult patients with and without the four target abnormalities. This number was chosen to represent the approximate number of chest x-rays that individual radiologists report over two full days at MGH, the authors wrote.

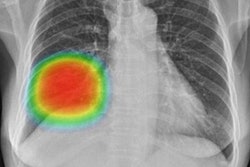

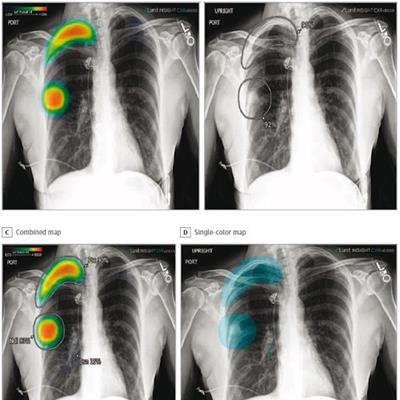

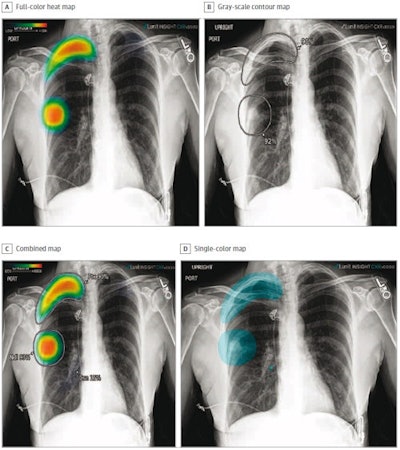

The different display modes of AI output: Shown are the color heat map (A), grayscale contour map (B), combined map (C), and single-color map (D). Image courtesy of JAMA Network Open.

The different display modes of AI output: Shown are the color heat map (A), grayscale contour map (B), combined map (C), and single-color map (D). Image courtesy of JAMA Network Open.According to the findings, on its own, compared with the ground truth, the algorithm achieved detection rates of 82.5% for lung nodules (94 of 114 findings), 88.7% for pneumonia (173 of 195 findings), 87.2% for pleural effusions (130 of 149 findings), and 100% for pneumothoraces (80 of 80 findings).

For all four target abnormalities, AI-assisted interpretation was associated with a significant improvement in the radiologists' sensitivities compared with unassisted reporting, while maintaining specificity, the authors wrote.

| Performance of radiologists without and with AI for chest x-ray | ||

| Without AI | With AI | |

| Nodules | 0.567 | 0.629 |

| Pneumonia | 0.673 | 0.719 |

| Pleural effusion | 0.889 | 0.895 |

| Pneumothorax | 0.792 | 0.965 |

Moreover, the algorithm appears to improve efficiency in reporting, the authors noted. Specifically, using the templates developed by the authors with AI to report findings saved 3.9 seconds compared with reporting without AI.

"These findings suggest that an AI algorithm can improve the reader performance and efficiency in interpreting chest radiograph abnormalities," the team wrote.

The authors noted that concerns about AI reducing the efficiency of radiologists are warranted for chest x-rays, given the sheer volume of these images in hospital settings, low reimbursement, and short reporting times for experienced radiologists. For wider adoption of AI algorithms in the field, it is crucial to show there is no slowdown in terms of accuracy and time taken to complete reporting with AI-assisted interpretation, they wrote.

Ultimately, the study highlights the need for future research and clinical adoption of AI algorithms with simultaneous evaluations of diagnostic accuracy and workflow efficiency, the authors suggested.

"Demonstration of noninferiority of interpretation time with AI vs non-AI interpretation will become more critical as AI algorithms expand their target findings beyond a handful to a comprehensive, multifinding detection," Kalra and colleagues concluded.