A new teaching tool called Real View Radiology (RVR) is showing early success by improving the ability of first- and second-year residents to interpret musculoskeletal MRI cases. The system was developed by a group of residents at IU Health, the health system affiliated with Indiana University (IU).

In a study published online May 12 in Academic Radiology, Real View Radiology significantly increased confidence scores among junior residents after training, compared with results from untrained third-year residents. The trained cohort also outperformed the untrained group on overall confidence scores for knee and shoulder images.

Real View Radiology uses a series of questions to guide resident trainees through MR musculoskeletal images and direct their attention to certain aspects of the case. The program also provides immediate feedback on whether the reader's answers are correct or incorrect to help keep him or her on the right path.

RVR evolution

The origins of Real View Radiology came two years ago, when a group of residents on IU Health's quality improvement committee began brainstorming about a better way to perform resident training. Dr. Jared Bailey, now a fourth-year resident and lead author of the Academic Radiology study, said it became apparent during his second year that they needed greater exposure to cross-sectional musculoskeletal cases.

Dr. Jared Bailey from Indiana University.

Dr. Jared Bailey from Indiana University.

"By no means did I have an understanding of how [RVR] would turn out now," Bailey said. "It is still in evolution, but it has been a blessing to a lot of people."

Traditionally, radiology residents learn through a case-based method in which they are exposed to a single image, such as in a PowerPoint slide or textbook, and then given an "educational snippet" about that image, he explained. Residents are expected to convert that knowledge into a real-world interpretation environment, where they are exposed to hundreds of images in a scrollable stack and must make the connection.

"No one has ever shown that static image learning is equivalent to doing interpretation accurately in the real world," Bailey said. "The only evidence we have is that we all take our board exams and pass them, but even our board exams are not scrollable image stacks."

Until now, technology has not exposed residents to cross-sectional cases as part of their training, Bailey contended. That exposure doesn't come until they see real-life cases.

"We all know that just showing up to work every day as a resident is not sufficient, because you may be completely blind to a huge segment of pathology that you may never have seen during your training," he said.

Training technique

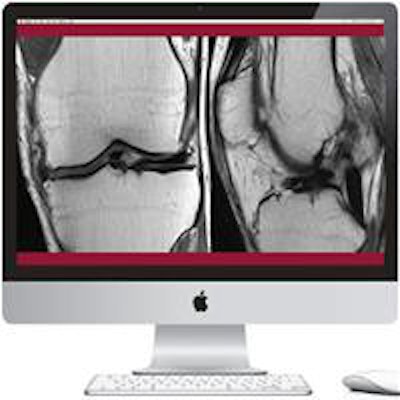

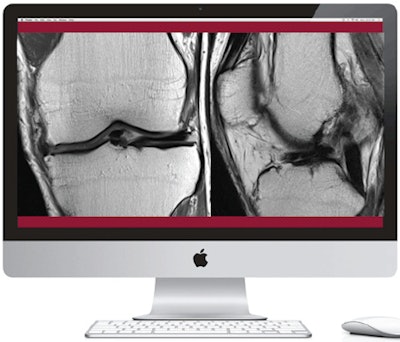

Real View Radiology uses two monitors set up side by side, just as radiologists use them. One monitor has an open-source PACS application (OsiriX, Pixmeo) that allows scrolling at window level and cross-referencing between coronal, sagittal, and axial views to see pathology on multiple planes.

The other monitor has educational software known as EDACTIC (Education Delivered and Composed Through Internet Communication). EDACTIC was developed by Dr. Mark Frank in the department of radiology and imaging services at Indiana University School of Medicine, and it features software tools designed to create and deliver educational content.

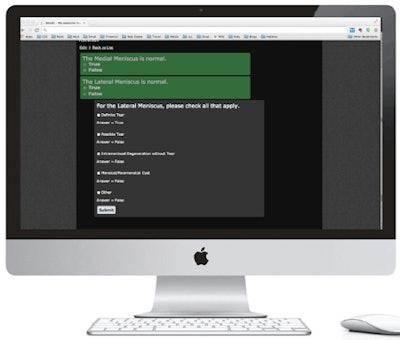

EDACTIC uses a question-based format through which the trainer offers "branch points" based on the way a resident answers certain questions. The program then takes the user down a certain path based on how the educator wants the trainee to proceed through a case.

For example, if the image is of a medial meniscus, the resident is asked if it is normal or abnormal. "If they say it's abnormal, they can then answer more specific types of questions about why they think it's abnormal," Bailey said. The feedback from the program is immediate.

The EDACTIC software was slightly modified to accommodate RVR's training program, Bailey noted. RVR plans to release its own version of the software next month.

In beginner mode, if the trainee mistakenly replies that a normal image is abnormal, RVR will not let the resident venture too far toward the wrong path before revealing that he or she has made an error. In advanced mode, the learner can basically answer every possible question an examiner thinks is necessary until the trainee has finished the case. The trainee then submits his or her performance for grading.

Real View Radiology uses two monitors side by side to train residents, with MR images on the left monitor (shown above) and a series of questions on the right (shown below). Images provided by Real View Radiology.

Real View Radiology uses two monitors side by side to train residents, with MR images on the left monitor (shown above) and a series of questions on the right (shown below). Images provided by Real View Radiology.

In the published study, Bailey and colleagues evaluated the performance of 12 first-year residents and 13 second-year residents who were trained on Real View Radiology. They compared their results with third-year residents who were not schooled on the program.

During their four-week musculoskeletal rotations, first- and second-year residents completed an average of 29.3 MRI knee and 17.4 MRI shoulder cases using RVR.

Overall search pattern scores for the trained cohort increased significantly both from pre- to post-training and compared to the third-year residents, the researchers found. The trained cohort's confidence scores also increased significantly from pre- to post-training for all knee, shoulder, pelvis, and ankle joints.

Further analysis showed that RVR-trained first-year residents had higher confidence scores than untrained third-year residents for knee and shoulders images, but not for pelvic or ankle images. Trained second-year residents had greater confidence scores for shoulder images compared to untrained third-year residents, but not for the knee, pelvis, or ankle.

The study had a relatively small sample size, but Bailey believes the results would be the same with more study participants "because [RVR] requires everybody to systematically go through a case over and over again."

"We all know that repetition is the mother of learning," he said.

Branching out

Real View Radiology is now being used in musculoskeletal training for IU Health residents, and there are plans to expand it to other specialties, such as cardiac and obstetrics.

RVR may also soon be available to other healthcare facilities. The plan in the next nine to 12 months is to offer the program to five or so institutions for a free six-month trial. "We will see what kind of feedback we get from others and will go from there," Bailey said. Depending on the results, RVR may become available commercially.

Bailey believes that radiology's growing workload requires the development of tools like Real View Radiology.

"I think there are so many ways to improve our education, and we have to work harder as radiologists because we are getting more cases during the day," he said. "Search patterns really are everything in radiology, and anything we can do to help residents or trainees improve their search patterns will ultimately help them be more efficient and more effective."