AI algorithms appear to have clinical value based on detecting normal x-rays – that is, by flagging chest x-rays as normal versus abnormal, they may reduce reading times for radiologists, according to research presented recently at the RSNA meeting in Chicago.

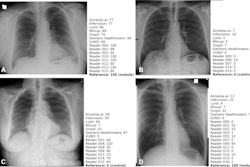

In a session on chest imaging, scientists from AI developers Lunit and DeepTek.ai presented separate studies of AI algorithms configured to segregate patient chest x-ray images into those with significant clinical findings and those considered normal. In both cases, the algorithms ruled out a potentially significant number of reads.

“Actually, what we want to do is spend time on non-plain radiographs now, but then what should we do for the chest x-rays?” said Simon Sanghyup Lee, medical director of Lunit's Cancer Screening Group.

Lee and colleagues tested a newly designed algorithm trained on about 400,000 chest x-rays from various sources. The model was trained to consider any finding, including medical devices, incorrect patient positioning, or poor image quality as abnormal, given that it is “actually impossible to define a single definition of what is normal,” Lee noted.

The model was then evaluated on three independent retrospective external data sets that included a total of 8,505 images, with 3,567 labeled as normal and 3,853 labeled as abnormal.

According to the analysis, the algorithm correctly classified 22% of all chest x-rays (n = 1,765) as normal, and these cases could be potentially removed from formal reporting, with the AI serving as a second reader, Lee said. The overall sensitivity of the model for distinguishing between normal and abnormal x-rays was 97.8%.

In addition, 16.7% (4/24) of missed significant/critical abnormal cases by the normal filtering model were detected by a second sequentially run commercially available model (Insight CXR, version 3), he added.

“Normal filtering AI has the potential to reduce the workload of radiologists, while combined use of detection AI may act as a safety net for missed cases,” Lee said.

In a second presentation during the session, Richa Pant, PhD, a lead research scientist at DeepTek.ai, shared results from a study that tested a normal filtering AI model on 17,500 frontal chest x-rays collected from four different sites between April 2020 and November 2022.

Three radiologists annotated the images, with labels of the most experienced radiologist considered the ground truth, Pant said. The model was used to process the scans and segregate them as “likely normal” or “suspected abnormal.”

According to the analysis, the AI segregated normal scans in up to 63% of cases, with an error rate of up to 11%, Pant said. Comparatively, human readers segregated up to 76% of total cases with an error rate also up to 11%. Moreover, the human readers agreed with the AI results for 95% of the scans, and expert readers found abnormalities in only 1% of the remaining 5% of the scans.

Ultimately, the results demonstrated that the company’s algorithm could segregate normal scans from abnormal scans as effectively as a human reader, Pant said.

“The approach can be used to optimize the radiology workflow and reduce human fatigue in reporting scans,” she concluded.