Integrating eye-tracking data that map how radiologists interpret x-rays into deep learning AI algorithms could be key to developing more “human-centered” AI, according to a recent study.

A group led by researchers in Lisbon, Portugal, conducted a systematic literature review of the use of eye gaze-driven data in chest x-ray deep learning (DL) models and ultimately proposed improvements that could enhance the approach.

“There is a clear demand for more human-centric technologies to bridge the gap between DL systems and human understanding,” noted lead author José Neves, of the University of Lisbon, and colleagues. The study was published in the March issue of the European Journal of Radiology.

DL models have demonstrated remarkable proficiency in various tasks in radiology, yet their internal decision-making processes remain largely opaque, the authors explained. This presents a so-called “black-box” problem, where the logic leading to a model’s decisions remains inaccessible for human scrutiny, they wrote.

A promising method to help overcome this problem is to integrate data from studies that use eye-tracking hardware and software to characterize how radiologists read normal and abnormal chest x-rays, the authors added.

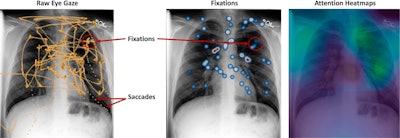

This data is focused on saccades (rapid eye movements that occur when the viewer shifts gaze from one point of interest to another) and fixations (periods when the eyes remain relatively still), for instance, and can be presented as attention maps, the researchers wrote.

Difference between saccades, fixations, and attention heatmaps in radiologist eye-tracking studies. Image courtesy of the European Journal of Radiology.

Difference between saccades, fixations, and attention heatmaps in radiologist eye-tracking studies. Image courtesy of the European Journal of Radiology.

To explore optimal methods for integrating such data, the group performed a systematic review and delved into current methods. The initial search yielded 180 research papers, with the final selection including 60 papers for a detailed analysis.

Primarily, the researchers answered three questions, as follows:

- What architectures and fusion techniques are available to integrate eye-tracking data into deep-learning approaches to localize and predict lesions?

- How is eye-tracking data preprocessed before being incorporated into multimodal deep-learning architectures?

- How can eye-gaze data promote explainability in multimodal deep learning architectures?

Ultimately, incorporating eye-gaze data can ensure that the features a DL model selects align with the image characteristics radiologists deem relevant for a diagnosis, according to the researchers. Thus, these models can become more interpretable and their decision process more transparent, they suggested.

The significance of this review is that it provides concrete answers regarding the role of eye-movement data and how to best integrate it into radiology DL algorithms, they noted.

“To our understanding, our survey is the first to conduct a comprehensive literature review and a comparative analysis of features present in gaze data that have the potential to lead to deep learning models better suited for aiding clinical practice,” the researchers concluded.

The full article can be found here.