GPT-4 Vision (GPT-4V) performs well on text-based radiology exam questions but struggles to answer image-related questions accurately, according to a study published September 3 in Radiology.

The finding sets the bar for the model’s baseline knowledge in radiology, noted lead author Nolan Hayden, MD, of Henry Ford Health in Detroit, MI, and colleagues.

“Given the current challenges in accurately interpreting key radiologic images and the tendency for hallucinatory responses, the applicability of GPT-4V in information-critical fields such as radiology is limited,” the group wrote.

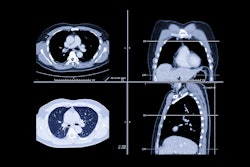

Until recently, ChatGPT’s lack of image processing capabilities limited its potential use in radiology to text-based interactions. However, in September 2023, OpenAI released GPT-4V, which offers potential new applications in radiology, the researchers wrote.

To gauge the model’s baseline knowledge, the researchers tested it on American College of Radiology (ACR) Diagnostic Radiology In-Training (DXIT) exam questions. The ACR DXIT exam is used by many radiology residency programs to benchmark residents’ progress during training.

In total, the group asked GPT-4V 377 retired DXIT questions across 13 domains, one general and 12 subspecialties. The 377 questions comprised 182 image-containing and 195 text-only questions. The ground truth for the DXIT questions was the ACR-determined correct choice.

Overall, GPT-4V answered 65.3% (246 of 377) of all questions correctly. The model showed better performance in answering text-only questions, achieving 81.5% (159 of 195) accuracy, in contrast to its 47.8% (87 of 182) accuracy in responding to image-based questions (χ2, p < 0.001).

The 81.5% accuracy for text-only questions mirrors the performance of the model’s predecessor, the authors noted.

“The consistent and relatively good performance on text-based questions, despite differences in question sets and input methods, may suggest that the model has a degree of textual understanding in radiology,” they wrote.

Nonetheless, also consistent with the literature on large language models (LLMs), the study showed evidence of well-described hallucinatory responses by LLMs. For instance, GPT-4V incorrectly localized a lesion on an abdominal CT image to an entirely different organ on the contralateral side, leading to an incorrect diagnosis, the group wrote.

In addition, the researchers noted a new development not seen in other initial explorations of the model – a phenomenon whereby GPT-4V declined to answer questions, particularly image-based questions.

“This newfound response declination phenomenon suggests an internal tightening of the model’s safeguards,” the authors wrote. “While these safeguards may be critical for ensuring general user safety, they may also inadvertently obscure our ability to gain a full understanding of GPT-4V's capabilities within the radiology domain in its current state.”

Ultimately, the findings underscore the need for more specialized and rigorous evaluation methods to accurately assess the performance of large language models (LLMs) in radiology tasks, the group concluded.

The full study is available here.