Findings of a large clinical implementation study of an AI tool for pulmonary embolism (PE) diagnosis warrant continued expert review of AI results, with researchers recommending a quality oversight process for both clinical oversight and continuous education in how to use AI tools in the clinical environment, according to research presented December 4 at RSNA 2024 in Chicago.

The session demonstrated the impact of an AI diagnostic tool for PE that was provided through a clinical AI operating system (OS) platform deployed at scale. Specifically, the demonstration involved a large integrated healthcare network with 19 hospitals and 20 imaging centers in New York, and an AI Quality Oversight Process (AIQOP) overseeing AIDOC's AI tool for PE detection.

Shlomit Goldberg-Stein, MD, associate professor of radiology at Zucker School of Medicine at Hofstra Northwell, presented data gathered through the study of the AI tool. It was used retrospectively in a real-world cohort, using 32,501 CT pulmonary angiogram (CTPA) scans.

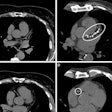

The AI tool provides a heat map colorized to indicate sites of acute PE in context with the CT image.

This study in over 30,000 patients demonstrates high agreements rates of 98% between AI-informed radiologists and the AI tool, Goldberg-Stein explained for AuntMinnie. Within the 2% of disagreements, the AI tool was still correct in 23% of cases, therefore, the researchers recommended a quality oversight process.

At Northwell, CTPA were routinely subjected to AI processing over a period of approximately 17 months, between September 9, 2021, and February 20, 2023. To ensure a fully validated dataset, the study called for extracting interpreting radiologists' findings of yes or no PE from required custom fields within their report templates and cross-checking the findings by using a locally developed natural language processing tool that was applied to all reports. Any discrepancies were checked by manual review.

As part of the study, Goldberg-Stein and team explored agreement rates between AI-informed radiologists and the AI tool in real-time clinical practice. They assessed the patterns of agreement for positive PE and negative PE between the two groups and odds of correctness of the AI tool versus the radiologist after expert adjudication through Northwell's AIQOP program.

The team hypothesized that there would be very high agreement rates between interpreting radiologists and the AI tool. However, when disagreements did occur, expert adjudicating radiologists would more often agree with the original interpreting radiologist, rather than with the AI tool.

Goldberg-Stein explained that the clinical patient report would be addended as necessary, with the AIQOP expert result serving as the reference standard.

Among the data shared, Goldberg-Stein reported the overall positive PE rate at 7% in the emergency department, 7% in outpatients, and 13% in inpatients (p < 0.0001). Agreement was very high overall at 97.82%, as hypothesized, with a similar pattern observed across all patient settings.

Agreement was significantly higher for the AI-negative result (where the interpreting radiologist agreed with AI -- 98.44%) than for the AI-positive result (where the interpreting radiologist agreed with the AI -- 91.51%), with the same pattern observed across all three settings, Goldberg-Stein added.

When the disagreements were analyzed, the team found a low overall disagreement rate (2.18% for 708 of the 32,501 scans). In those instances, the radiologist was correct 76.98% of the time (545 scans) but, importantly, the AI was correct 23.02% of the time (163 scans), according to Goldberg-Stein.

"After expert adjudication, AI was still correct in 23% of cases," Goldberg-Stein said. Within the 2.18% of disagreements, the radiologists' correctness rate was found to be higher for positive PE at 84.85% and, similarly, the AI correctness rate was also higher for positive PE at 37%. The 37% were true positives for AI, she said.

"Both AI and the radiologist rendered the correct diagnosis not reported by the other," Goldberg-Stein said, but overall, analysis of the correctness rate of radiologists was deemed statistically significant, at nearly three times as accurate compared to the AI in cases where they disagreed. Out of 3,137 positive PE exams, 3% were correctly identified by AI only, and 12.5% were correctly identified by the radiologist only, a ratio of 1 to 4.

Goldberg-Stein noted that this was not a diagnostic accuracy study. While the limitations of the study included its retrospective design and postdeployment strategy, a strength was in the clinical implementation design. Also, because the radiologists were AI-informed, the results couldn't completely measure the influence of AI on the radiologists' perspectives and diagnoses.

More is expected to come from the clinical implementation of AI across Northwell's facilities. A study of using this AI algorithm as a triage tool across all patient settings is forthcoming, Goldberg-Stein said.

For full 2024 RSNA coverage, visit our RADCast.