Deep convolutional neural network (DCNN) classification systems could help with breast positioning for mammography, suggest Japanese findings published May 1 in Nature Scientific Reports.

A team led by Haruyuki Watanabe, PhD, from Gunma Prefectural College of Health Sciences in Maebashi found that their DCNN method had moderate accuracy when it came to breast positioning classification and nipple profile.

"The results of this study suggest that DCNNs can be used to classify mammographic breast positioning to evaluate imaging accuracy," Watanabe and colleagues wrote. "The recognition of positioning criteria accuracy provides feedback to radiological technologists and can contribute to improving the accuracy of mammographic techniques."

Previous research suggests that inappropriate breast positioning is a common cause of mammographic imaging failure. Technologists must be trained on proper positioning, but the researchers noted that such training can be labor-intensive and difficult due to the subjective evaluation of mammography via visual inspection.

Breast imaging departments are increasingly implementing artificial intelligence (AI) into the breast cancer screening process. In particular, DCNN-based learning has shown the ability to distinguish benign from malignant breast lesions.

Watanabe and colleagues pointed out that, to the best of their knowledge, there are no reports on the use of DCNN for verifying breast positioning. They sought to add to the literature by proposing such a classification system for quality control and validation of breast positioning in mammography.

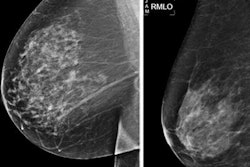

The team designed two steps for verifying mammograms: automated assessment of positioning and classification of three scales (that is, criteria that determine breast positioning quality using DCNNs). After acquiring labeled mammograms with three measures visually evaluated based on guidelines, the researchers used image processing to automatically detect the region of interest.

They then classified mammographic positioning accuracy by using four representative DCNNs described in previous studies. These included four previously established DCNN frameworks: VGG-16, Inception-v3, Xception, Inception-ResNet-v2, and EfficientNet-B0.

The study authors tested their method on 1,631 mediolateral oblique mammographic views collected from an open database. They found that the DCNN model achieved the best positioning classification accuracy of 78% using VGG-16 in the inframammary fold and classification accuracy of 73% using Xception in the nipple profile.

"Furthermore, using the 'softmax' function [an activation function in DCNNs for image classification], the breast positioning criteria could be evaluated quantitatively by presenting the predicted value, which is the probability of determining positioning accuracy," Watanabe et al wrote.

The team plans to explore a quality control system using DCNNs on other imaging positioning applications and improve the classification performance on the latest mammographic database.