AI improves chest x-ray imaging interpretation by nonradiologist practitioners, which could be useful in low-resource settings, according to research published January 29 in Chest.

A team led by Jan Rudolph, MD, from University Hospital, LMU Munich in Germany found that a convolutional neural network (CNN)-based AI system focusing on chest x-rays improved the performance of nonradiologists in diagnosing several chest pathologies.

“In an emergency unit setting without 24/7 radiology coverage, the presented AI solution features an excellent clinical support tool to nonradiologists, similar to a second reader, and allows for a more accurate primary diagnosis and thus earlier therapy initiation,” the Rudolph team wrote.

Chest x-rays are the go-to modality for assessing whether or not a disease requires immediate treatment. But determining this isn’t easy, with experts needed to evaluate the presence of projection phenomena, superimpositions, and similar representations of different findings.

That last part can be challenging for nonradiologists who do not constantly interpret diagnostic imaging exams. Still, they may be tasked with clinical decision-making based on such findings in emergency settings without radiologists being there all the time.

Previous studies have explored AI’s potential in interpreting chest x-rays and thus, helping to streamline clinical workflows and improve patient care.

Rudolph and co-authors evaluated the performance of an AI algorithm that was trained on both public and expert-labeled data in chest imaging.

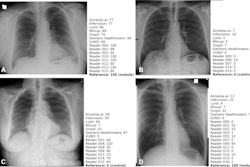

They included 563 chest x-rays that were retrospectively assessed twice by three board-certified radiologists, three radiology residents, and three nonradiology residents with emergency unit experience. The researchers also tested nonradiologists on diagnosing four suspected pathologies: pleural effusion, pneumothorax, consolidations suspicious for pneumonia, and nodules.

On internal validation, the AI algorithm achieved area under the curve (AUC) values ranging between 0.95 (nodules) and 0.995 (pleural effusion).

The team reported varying increases in accuracy among nonradiologist readers for all four pathologies.

| Accuracy of nonradiologist readers in interpreting chest x-rays with and without AI assistance | ||

|---|---|---|

| Pathology | Without AI assistance | With AI assistance |

| Pleural effusion | 84% | 87.9% |

| Pneumothorax | 92.8% | 95% |

| Pneumonia | 79% | 84.9% |

| Nodules | 74.6% | 79.7% |

Additionally, the team reported that AI assistance improved consensus among nonradiologist readers in detecting pneumothorax. This included an AUC increase from 0.846 to 0.974 (p < 0.001). This represented a 30% increase in sensitivity and a 2% increase in accuracy while maintaining an optimized specificity.

The researchers also highlighted that nodule detection experienced the largest impact by AI assistance among the nonradiologist readers. AI assistance led to a 53% increase in sensitivity and a 7% increase in accuracy, as well as an AUC increase from 0.723 to 0.89 (p < 0.001).

Finally, the team tested the performance of radiologists on the AI algorithm and found smaller, “mostly nonsignificant” increases in performance, sensitivity, and accuracy.

The study authors highlighted that AI assistance in this area could aid less experienced physicians when support by experienced radiologists or emergency physicians is not guaranteed.

“In this case, the number of potentially missed findings could be significantly reduced,” they wrote.

The full study can be found here.