An external validation study conducted at a primary care clinic in Spain has demonstrated the value -- as well as important limitations -- of AI software for interpreting chest x-rays in clinical practice, according to an article published on March 3 in Scientific Reports.

In a prospective study involving 278 chest radiographs and reports from a clinic in the region of Catalonia, researchers found that a commercial AI algorithm called ChestEye produced 95% accuracy, but also 48% sensitivity. The algorithm performed better on some conditions than on others, noted lead author Queralt Catalina, of University of Vic-Central University of Catalonia, and colleagues.

“The algorithm has been validated in the primary care setting and has proven to be useful when identifying images with or without conditions,” the authors noted.

However, in order to be a valuable tool to help and support radiologists, it requires additional real-world training to enhance its diagnostic capabilities for some of the conditions analyzed, the group added.

ChestEye uses a computer-aided diagnosis (CAD) algorithm that analyzes x-rays for 75 different findings and localizes the features on images as heatmaps. It can also generate preliminary text reports that incorporate relevant findings in chest x-ray images.

The algorithm was developed by a Lithuanian company called Oxipit and was trained on more than 300,000 images during its development, the authors noted.

In this study, the researchers aimed to externally validate the technology in a prospective observational study in patients who were scheduled for chest x-rays. They obtained a radiologist’s report for each patient (considered the gold standard) and subsequently compared the findings to the AI algorithm’s findings on the same reports.

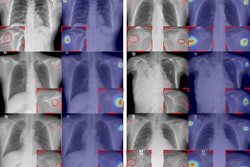

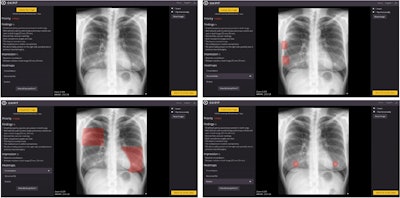

Image of patient (upper-left) in which, according to the radiologist's report, there is only consolidation, but the algorithm detects an abnormal rib (upper-right), consolidation (lower-left), and two nodules (lower-right). It is worth noting the confusion of a consolidation with mammary tissue and of two nodules with the two mammary areolae. Image courtesy of Scientific Reports.

Image of patient (upper-left) in which, according to the radiologist's report, there is only consolidation, but the algorithm detects an abnormal rib (upper-right), consolidation (lower-left), and two nodules (lower-right). It is worth noting the confusion of a consolidation with mammary tissue and of two nodules with the two mammary areolae. Image courtesy of Scientific Reports.

The study was performed with a sample of 278 images and reports, 51.8% of which showed no radiological abnormalities according to the radiologist's report. An analysis revealed that the AI algorithm achieved an average accuracy 95%, a sensitivity of 48%, and a specificity of 98%, according to the researchers.

On the plus side, the conditions where the algorithm was most sensitive were in detecting external, upper abdominal, and cardiac and/or valvular implants, the group noted. On the other hand, the conditions where the algorithm was less sensitive were located in the mediastinum, vessels, and bone, they wrote.

Ultimately, the implementation of AI in healthcare appears to be an imminent reality that can offer significant benefits to both professionals and the general population, yet it is essential to validate tools in real clinical settings to balance a phenomenon called “digital exceptionalism,” which they may achieve in development, the researchers wrote.

External validation is crucial for ensuring nondiscrimination and equity in healthcare and should be a key requirement for the widespread implementation of AI algorithms, the researchers added. However, it is not yet specifically mandated by European legislation, they noted.

“Our study emphasizes the need for continuous improvement to ensure the algorithm’s effectiveness in primary care,” the group concluded.

The full article is available here.