A commercially available AI algorithm shows potential for off-label use as a way to generate automatic reports for “unremarkable” chest x-rays, according to a study published August 20 in Radiology.

The finding by researchers in Copenhagen, Denmark, suggests that AI could eventually help streamline high-volume radiology workflows by handling some of the more “tedious parts of the work,” lead author Louis Plesner, MD, of Herlev and Gentofte Hospital in Denmark told AuntMinnie.com.

Among questions that remain unanswered about AI is whether the quality of its mistakes is different than those of radiologists and if AI mistakes, on average, are objectively worse than human mistakes.

To explore the issue, Plesner and colleagues evaluated Enterprise CXR (version 2.2, Annalise.ai), software cleared in Europe and the U.S. that can detect up to 124 findings, of which 39 are considered unremarkable, according to its developer.

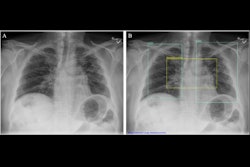

First, two thoracic radiologists labeled 1,961 patient chest x-rays as “remarkable” (1,231, or 62.8%) or “unremarkable” (730, or 37.2%) based on the reference standard. These included chest radiographs that displayed abnormalities of no clinical significance, which are typically treated as normal. Reports by radiologists for the images were classified similarly.

The researchers adapted the AI tool to generate a chest x-ray “remarkableness” probability, which was used to calculate its specificity (a measure of a medical test’s ability to correctly identify people who do not have a disease) at different AI sensitivities.

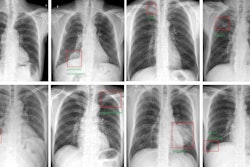

In addition, a thoracic radiologist graded the missed findings by AI and/or the radiology reports as critical, clinically significant, or clinically insignificant.

To assess the specificity of the AI, the researchers configured it at three different sensitivity thresholds: 99.9%, 99%, and 98%. At these thresholds, the AI tool subsequently accurately identified 24.5%, 47.1%, and 52.7% of unremarkable chest radiographs, while only missing 0.1%, 1%, and 2% of remarkable chest radiographs.

Comparatively, for radiology reports, the sensitivity for remarkable radiographs was 87.2%, with a lower rate of critical misses than AI when AI was fixed at similar sensitivity. Plesner noted, however, that the mistakes made by AI were, on average, potentially more clinically severe for the patient than mistakes made by radiologists.

“This is likely because radiologists interpret findings based on the clinical scenario, which AI does not,” he said.

Plesner noted that a prospective implementation of the model using one of the thresholds suggested in the study is needed before widespread deployment can be recommended.

The full study can be found here.