Medical imaging is the economic artery of modern diagnostics, with AI-driven advancements prompting clinicians to rethink how they interpret scans. For instance, the global medical imaging AI market1 is projected to expand eightfold by 2030, reaching an estimated $8.18 billion.

Ravinder Singh.

Ravinder Singh.

It signifies the sector’s accelerating adoption of AI-driven diagnostics, shifting what was once a supplementary tool into a linchpin of modern radiology. Yet, the fidelity checks between the original images used for AI algorithm development and the rendered images used for diagnosis purposes are often overlooked.

The methodologies governing image fidelity assessment remain mired in conventional techniques. Metrics like mean squared error (MSE) and peak signal-to-noise ratio (PSNR), long considered gold standards, are now facing scrutiny for their potential to capture the true essence of diagnostic quality.

Under scrutiny

As medical imaging platforms evolve, even subtle changes in how images are rendered on new viewers can alter how they appear to radiologists, despite the underlying data remaining unchanged. These variations, while often imperceptible to the untrained eye, can influence clinical interpretation.

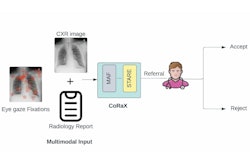

The difference between the rendered image which is used for diagnosis and the original image which is used for AI algorithm training can impact performance of the model developed. Ensuring that the fidelity of the newly rendered image matches the original diagnostic intent is becoming a critical priority.

Vaibhavi Sonetha, PhD.

Vaibhavi Sonetha, PhD.

Furthermore, image fidelity between original and rendered images has traditionally been evaluated using pixel-based metrics like MSE and PSNR, which prioritize mathematical precision and often fall short of capturing perceptual or diagnostic relevance. However, more than three-quarters of AI-based medical devices authorized by the U.S. Food and Drug Administration (FDA) are dedicated to analyzing CT scans, MRIs, and x-rays. This compels a deeper examination: Do conventional fidelity assessments align with real-world clinical decision-making?

The industry is already signaling a shift. A recent sentiment analysis2 revealed that 55% of discussions around AI in medical imaging expressed positive views, highlighting its potential to enhance diagnostic accuracy and efficiency. This optimism reflects a growing reliance on AI-driven insights. Advanced image fidelity evaluation methods are proving essential in tackling fidelity challenges and ensuring consistency in how diagnostic images are interpreted across systems and software platforms.

Illusion of perfection

Conventional Full-Reference IQA (FR-IQA) models, especially those based on pixel-wise comparisons, are still widely used in medical imaging to evaluate rendering pipelines, acquisition methods, and image transformations. However, by treating image quality as a purely numerical problem, these models fail to account for the perceptual and diagnostic complexities of medical imaging. This disconnect introduces critical blind spots in AI-assisted diagnosis.

So, there is a clear need for advanced techniques that tackle these challenges and set new standards for medical image fidelity.

Pixels to perception

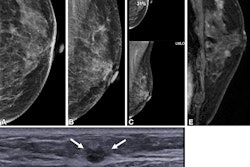

Radiologists rely on context, medical expertise, and years of pattern recognition experience to identify structural distortions, contrast variations, and textural inconsistencies that could indicate critical conditions. Inspired by this, the industry is now embracing perception-based IQA frameworks, designed to emulate human vision.

These advanced IQA methods use a computerized human vision model that replicates retinal optics, spatial contrast sensitivity, and visual cortex frequency processing. It generates a spatial map of visual differences between two images, identifying distortions that affect clinical interpretation. These models include the perceptual difference model (PDM), structural similarity index (SSIM) family, learned perceptual image patch similarity (LPIPS), and deep image structure and texture similarity (DISTS), among others.

Unlike pixel-based metrics, perception-driven IQA prioritizes:

- Structural integrity: Ensuring that medical images retain their anatomical accuracy and diagnostic reliability

- Contrast and texture consistency: Accounting for subtle variations that influence clinical decisions

- Cross-modality standardization: Making sure MRI, CT, PET, and ultrasound images maintain fidelity across imaging platforms

Key drivers

Deep learning has transformed medical imaging by making image fidelity assessments more clinically meaningful and adaptable to AI-driven workflows. From feature maps to similarity indexes, the technology has been setting the bar higher with models such as:

- Learned Perceptual Image Patch Similarity (LPIPS): Uses deep neural networks to extract feature maps from medical images, applying a weighted distance metric to assess visual similarity. By leveraging pre-trained CNN architectures, LPIPS ensures that AI models recognize clinically significant variations rather than being misled by pixel-based discrepancies.

- Deep Image Structure & Texture Similarity (DISTS): Unlike most IQA models, DISTS accounts for both structure and texture perception, making it highly effective in evaluating compressed, processed, or modified medical images without losing critical diagnostic fidelity.

- Structural Similarity Index (SSIM) and Multi-Scale Structural Similarity (MS-SSIM): SSIM assesses luminance, contrast, and structural fidelity to determine perceived image distortion. MS-SSIM extends this concept and operates at multiple resolutions, allowing for accurate image fidelity assessment across diverse imaging modalities. By considering both local and global distortions, MS-SSIM maintains structural consistency across imaging platforms.

Each metric has distinct advantages and limitations, and its suitability is determined by factors such as the specific application, nature of distortions, computational demands, and perceptual accuracy requirements.

Why perception-driven IQA outperforms

For AI-assisted diagnostics to be reliable and effective, image fidelity must maintain anatomical accuracy and diagnostic clarity. Perception-based IQA delivers this reliability and effectiveness by mirroring how radiologists interpret visual data, ensuring that medical images reflect true-to-life anatomical structures rather than just mathematical approximations.

Simultaneously, it aligns with how AI systems analyze imaging inputs, refining their ability to detect clinically significant patterns and deviations. This leads to greater diagnostic accuracy, workflow efficiency, and cross-modality consistency. Advancements in perception-driven IQA redefine medical imaging fidelity with advantages such as:

- Enhanced diagnostic confidence: Unlike pixel-based assessments, perception-based IQA prioritizes anatomical structures, contrast integrity, and texture fidelity, ensuring that radiologists can trust the diagnostic accuracy of the images they review.

- Optimized AI performance: By ensuring fidelity between the original and rendered images, the AI model minimizes errors caused by variations in display settings, contrast adjustments or processing artifacts. As a result, it produces models that are more consistent, interpretable and aligned with clinical expectations, ultimately leading to better performance in real-world medical applications.

Next-gen image fidelity

As the healthcare industry undergoes rapid digital transformation, medical image fidelity standards must evolve to support AI-driven innovation and clinical accuracy alike.

The next generation of medical imaging will not be defined by what’s visible but by what’s actionable. As AI systems become more autonomous and decision-support tools grow in sophistication, the demand will shift from mere image clarity to diagnostic intent recognition. Perception-based IQA plays a critical role here by quantifying fidelity in ways that reflect both how radiologists see and how algorithms reason.

It enables consistency in image interpretation across diverse systems, user environments, and AI models, helping standardize diagnostic outcomes even when different tools are in play. By embedding perception-aligned fidelity checks into the imaging workflow, healthcare providers can confidently scale AI adoption without compromising diagnostic integrity. Organizations that prioritize perception-based IQA today will improve diagnostic precision and lead the way in defining standards for AI-integrated healthcare.

Ravinder Singh is senior vice president of Citius Healthcare Consulting and Vaibhavi Sonetha, PhD, is assistant vice president.

The comments and observations expressed herein do not necessarily reflect the opinions of AuntMinnie.com, nor should they be construed as an endorsement or admonishment of any particular vendor, analyst, industry consultant, or consulting group.

References

[1] https://www.grandviewresearch.com/industry-analysis/artificial-intelligence-medical-imaging-market