In a study by researchers from London, virtual colonoscopy computer-aided detection (CAD) software beat the experts in detecting medium-sized polyps, though the system is designed for interaction with radiologists rather than competition.

CAD marked a number of false positives, too, and the authors cautioned that even the correct CAD marks would only translate to sensitivity if the radiologists correctly read them. Still, the study made it clear that the detection of nondiminutive polyps could be aided by using the software, the authors wrote.

"Inconsistent accuracy (in large VC trials) is likely multifactorial, possibly due to differing scanning parameters, quality of bowel preparation, the use of tagging agents, and the method of primary interpretation," wrote Drs. Stuart Taylor, Steve Halligan, David Burling, and colleagues from St. Mark's and Northwick Park Hospitals, University Hospital, and Charing Cross Hospital in London. "However, it is increasingly clear that individual variation in the interpretive capabilities of the reviewers plays a significant role. A definite, as yet undefined, learning curve exists for interpreting CT colonography examinations, and perceptual errors perhaps account for more than 50% of missed lesions" (American Journal of Roentgenology, March 3, 2006, Vol. 186:3, pp. 696-702).

Studies to date have mainly looked at colon CAD algorithms as a second reader, but the system used in the present study (ColonCAR, version 1.2, MedicSight, London) can be used interactively at the time of primary interpretation, allowing the reader to alter the polyp detection filters to match the clinical requirements, such as accepting lower sensitivity for detecting small polyps in exchange for higher specificity, according to the authors.

The researchers sought to assess the sensitivity of their CAD polyp-enhancement filter for polyp detection compared to expert reviewers.

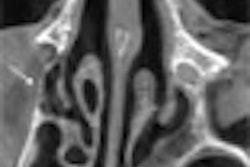

In the study, colonoscopy-validated VC datasets (prone and supine) from five institutions were divided into training and test sets according to the time of accrual. There were 242 training cases (159 men and 83 women, median age 62 years), including 147 average-risk screening patients with the rest higher-risk or symptomatic. A subset of 45 training cases containing 100 polyps 6 mm or larger underwent batch analysis using the ColonCAR software to determine optimal polyp-enhancement filter settings for polyp detection, the authors wrote.

The data were acquired using 1.25-mm collimation in 38 cases, 2.5-mm collimation in 193 cases, and 3-mm collimation in 11 cases; 120-140 kVp; and 25 mAs in five cases, 50 mAs in 30 cases, 100 mAs in 181 cases, 120 mAs in 21 cases, and 240-320 mAs in three cases.

Twenty-five consecutive positive test datasets were interpreted individually by three radiologists experienced with a minimum of 200 cases in VC interpretation, who were unaware of the colonoscopy results. The results were read on a workstation equipped with VC software (MedicColon, version 1.1, MedicSight).

There was a determined effort to find polyps identified by colonoscopy, but also a careful look for any endoscopic false negatives indicated by the VC results. The ColonCAR software was applied to the test cases after the polyp-enhancement filter settings had been optimized by the test cases, and the false positives were classified according to their importance.

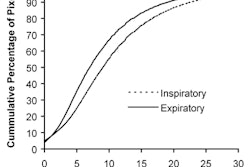

The 25 test cases contained 32 polyps ranging from 6-35 mm in diameter. Of these, ColonCAR identified 26 (81%) of 32 polyps, compared with an average sensitivity of 70% for the expert readers.

"The average sensitivity of the three expert reviewers unaided for polyp detection against the consensus-derived reference panel with optical colonoscopy for lesions of 6-9 mm, 10 mm and greater, and overall was 70% (14/20), 92% (11/12), and 78% (25/32), respectively," Taylor and colleagues wrote. "The corresponding figures for the polyp-enhancement filter in isolation were 75% (15/20), 92% (11/12), and 81% (26/32), respectively. The polyp-enhancement filter highlighted all but one polyp 10 mm or larger, missing one 11-mm lesion that was submerged in tagged fluid on both the supine and prone datasets."

The filter missed five lesions measuring 6-9 mm; all but one were visible on supine and prone datasets, according to expert reader consensus.

"All of the polyps missed by experts 1 (n = 4) and 2 (n = 3), and 12 (86%) of 14 polyps missed by expert 3 were detected by ColonCAR," the authors noted. "The median number of false-positive highlights per case was 13, of which 91% were easily dismissed." Still, the number of false positives was large compared to other studies.

The rest required further investigation with supine-prone correlation, multiplanar reconstructions, or 3D problem solving, and most turned out to be residual fecal matter or bulbous haustral folds. A few intracolonic or extracolonic findings were easily dismissible at 2D scrolling of the data.

"It is interesting that the polyp-enhancement filter detected all polyps missed by reviewers 1 and 2, and all but two polyps missed by reviewer 3, suggesting the software is complementary even to expert reviewers," the team wrote. Assuming all reviewers had correctly classified objects highlighted by the filter, the potential contribution of CAD to the reviewers' sensitivity was 10%, 13%, and 38% for reviewers 1-3, respectively.

For this to occur, of course, the reviewers would need to interpret the system's highlighted areas correctly. Because the system is intended to work synergistically with the reviewer, it cannot replace "the fundamental need for adequate reviewer training in the interpretation of (VC) datasets," the researchers wrote.

Study limitations included an indirect design that did not directly assess reviewer performance with and without the polyp-enhancement filter, and interpretation times were not compared.

"One strength of the protocol was that the test cases were entirely novel to the software because it had been developed using other data (i.e., the training set)," the group wrote.

The sensitivity of the early-version software package tested at default settings is complementary to the performance of expert reviewers of VC data, the researchers concluded.

"Further work is required to optimize the polyp-enhancement filter settings according to the individual case specifics, and to assess directly the clinical impact of the software on reviewer performance and productivity," they wrote.

By Eric Barnes

AuntMinnie.com staff writer

March 16, 2006

Related Reading

AuntMinnieTV: NIH researchers add CAD to virtual colonoscopy, February 3, 2006

VC CAD found equivalent to colonoscopy in screening population, December 21, 2005

New VC reading schemes could solve old problems, October 13, 2005

'Filet view' VC software slices reading time, October 6, 2005

Colon CAD: VC's extra eyes face new challenges, August 5, 2005

Copyright © 2006 AuntMinnie.com