Can computer workers without medical training distinguish true polyps from false ones as effectively as virtual colonoscopy computer-aided detection (CAD) software? Actually, they do almost as well as CAD -- and sometimes better -- according to a study of Web-based "crowdsourcing" applied to polyp classification.

Researchers from the U.S. National Institutes of Health found no significant difference in performance for polyps 6 mm or larger between minimally trained knowledge workers reading in a distributed human intelligence, or "crowdsourcing" model, and the group's CAD algorithm. Furthermore, the novice readers were able to achieve this level of performance with substantially fewer training cases than CAD, the group reported.

"Crowdsourcing definitely does have a future in this kind of application," wrote co-investigator Dr. Ronald Summers, PhD, in an email to AuntMinnie.com. "The advantage of crowdsourcing is that the experiments can be done rapidly and many more interventions can be tried. For example, it might be possible to try different types of training for CAD systems to see which is most effective."

Origins of crowdsourcing

Web-based crowdsourcing, first used in 2005, has generally been confined to business and social sciences research, explained Tan Nguyen; Shijun Wang, PhD; Summers; and colleagues in Radiology (January 24, 2012). As a tool for evaluating observer performance, crowdsourcing in the form of distributed human intelligence is more efficient "than traditional recruitment methods that may require substantial financial resources and physical infrastructure," they wrote.

Crowdsourcing assigns tasks traditionally performed by specific individuals to a group of people, relying on the hypothesis that multiple decision-makers together are more accurate than any one individual.

Nguyen and colleagues obtained their distributed human intelligence data from a group of minimally trained individuals the researchers described as "knowledge workers." They used the information as a classifier to distinguish true- from false-positive colorectal polyp candidates in conjunction with CAD-detected polyp candidates in virtual colonoscopy (also known as CT colonography or CTC) data.

The CTC images were obtained from 24 randomly selected patients from a study by Pickhardt et al; all patients had at least one colorectal polyp 6 mm or larger in diameter (New England Journal of Medicine, December 4, 2003, Vol. 349:23, pp. 2191-2120). Patients were scanned prone and supine at MDCT after cathartic bowel preparation and insufflation with room air.

The researchers' previously validated investigational CAD software was used to analyze the data, identifying 268 polyp candidates using a leave-one-out test paradigm that trained the CAD algorithm based on the other 23 cases.

For each patient treated as the test case, CAD was trained using the other cases to create a set of polyp detections and their associated support vector machine (SVM) committee classifier scores for the test case, and the procedure was repeated to create an average of 99 polyp candidates for each of the 24 study subjects. Forty-seven of the 2,374 total detections made by CAD represented a total of 26 polyps 6 mm or larger, with their presence confirmed at optical colonoscopy.

Twenty knowledge workers participating in a commercial crowdsourcing platform labeled each polyp candidate as a true or false polyp. The researchers believed that workers with minimal training would be able to recognize differences in 3D shapes and other features of polyp candidates on CTC images that would enable them to classify polyps.

Before starting their task, the novice readers were given minimal background information about colonic polyps and were shown a set of five images of each polyp candidate. They were also shown images of true- and false-positive candidates but were blinded to the results as well as the proportion of candidates that were true or false polyps. The workers were permitted to label a specific polyp candidate only once, but there were no restrictions on the number of assignments they could complete.

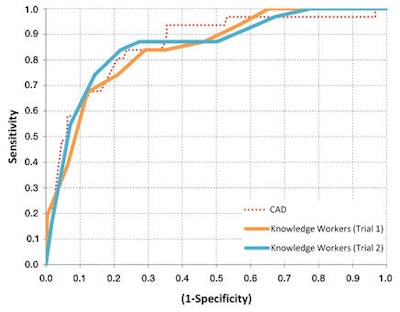

The researchers conducted two trials involving 228 workers to assess reproducibility of the method, evaluating its performance by comparing the area under the receiver operator characteristics curve (AUC) of the novice readers with the AUC of CAD for polyp classification as true or false.

There was no significant difference in performance between the distributed human intelligence model and the CAD algorithm for polyps 6 mm or larger, Nguyen and colleagues wrote.

"More important, distributed human intelligence was able to achieve this level of performance with substantially fewer training cases than CAD," they wrote. Knowledge workers "were only shown 11 polyp candidates for training, whereas CAD was trained by using ground truth data for 2,374 polyp candidates generated from 24 patients."

The detection-level area under the curve for the novice readers was 0.845 ± 0.045 in trial 1 and 0.855 ± 0.044 in trial 2. The results were not significantly different from the AUC for CAD, which was 0.859 ± 0.043, Nguyen and colleagues reported.

When polyp candidates were stratified by difficulty, the knowledge workers performed better than CAD on easy detections; AUCs were 0.951 ± 0.032 in trial 1 and 0.966 ± 0.027 in trial 2, compared with 0.877 ± 0.048 for CAD (p = 0.039 for trial 2).

|

| Comparison of receiver operator characteristics curves for CAD, novice readers in trial 1, and novice readers in trial 2. All three classifiers show similar performance, with AUCs of 0.859, 0.845, and 0.855, respectively. Image republished with permission of the RSNA from Radiology (January 24, 2012). |

The performance advantage of the novice readers over CAD for easy polyp candidates suggests that certain features associated with easy polyp candidates, such as shape, geometry, or location in the context of the local environment, enable humans to easily classify polyps, the authors stated.

"In contrast, the CAD algorithm, which is strongly based on surface curvature, may not have evaluated the polyp candidates by using these same features, thus making it more difficult for CAD to distinguish between true and false polyps," they wrote.

In addition, the novice readers who participated in both trials showed significant improvement in performance going from trial 1 to trial 2. For this group of readers, AUCs were 0.759 ± 0.052 in trial 1 and 0.839 ± 0.046 in trial 2 (p = 0.041).

"Even though the [knowledge workers] were not given feedback on their performance at any point, they were still able to develop an intuition in regard to the features associated with true polyps and false polyps," the authors wrote.

Accurate in the aggregate

The study results indicate that distributed human intelligence is reliable for high-quality classification of colorectal polyps, the authors concluded. The use of distributed human intelligence may also provide insights that guide future CAD development.

A proposed fusion classifier that integrates different features the novice readers used to classify polyp candidates has already shown promise for improving overall responses, according to the group.

"We have preliminary data ... [indicating] that we can use crowdsourcing to identify interventions (better training, better image display) that lead to better performance by human observers when they use computer-aided polyp detection," Summers wrote in his email. "The same interventions could potentially be adapted to improve performance by experts (like radiologists)."