Using midlevel image features in computer-aided detection (CAD) algorithms represents a step back from the latest deep machine-learning techniques, but such features produce a more transparent process with potentially more accurate results, according to new research from the U.S. National Institutes of Health (NIH).

Incorporated into a CAD scheme used to detect enlarged lymph nodes, the midlevel image features yielded better results in most cases than either features based on a low-level histogram of oriented gradients (HOG) technique, or high-level convolutional neural network (CNN)-based machine-learning features in lymph nodes, the NIH team reported.

The system involving the so-called midlevel cue maps "outperformed the system that only used the raw intensity and the HOG features, and it outperformed the latest CNN system," said Dr. Ronald Summers, PhD, in a presentation at a CAD session at RSNA 2015. Summers is a senior investigator and chief of image processing at the NIH Clinical Center.

Dr. Ronald Summers, PhD, from the NIH.

Dr. Ronald Summers, PhD, from the NIH.Detecting enlarged lymph nodes (those ≥ 10 mm) is critical for cancer staging and assessment of treatment response, but lymph nodes are hard to see on CT because they share similar CT attenuation values with surrounding tissues and are not enhanced with contrast. Manual delineation of lymph nodes is time-intensive and variable, so better, automated ways are needed to visualize the nodes reliably, Summers said.

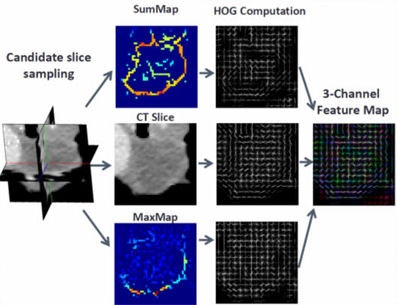

"Our core hypothesis is that by leveraging midlevel, semantic boundary cues of lymph nodes, we can develop enhanced input for HOG computation," he said.

The enhanced input maps will be learned from manual annotations produced by radiologists on a training set of CT scans of lymph nodes. The process produces a three-channel feature map containing higher-level semantic information derived from lymph node images. These features are used to train a support vector machine (SVM) for binary classification of 2D views as "either containing a lymph node or not," Summers said. The SVM scores for views sampled from the same volume of interest are averaged to obtain the final candidate-level predictions.

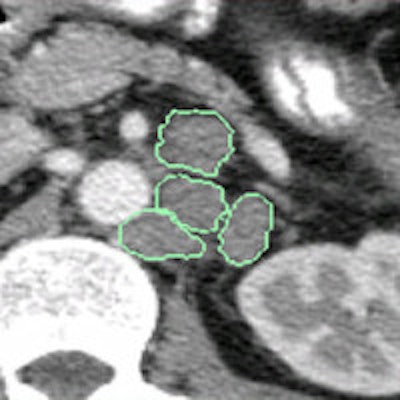

In their study, Summers and co-investigators Ari Seff and Le Lu, PhD, used CT data from 176 patients to create an automated system for detecting mediastinal and abdominal enlarged lymph on CT scans, with a process of making a low-level machine-learning method deeper with the use of midlevel cues.

Better alternatives needed

Low-level features alone can't reliably distinguish lymph nodes at CT, Summers told AuntMinnie.com. But the use of midlevel features enables a higher level of abstraction -- "similar to the way our brain takes low-level features like edges and constructs objects and faces in our minds," he said.

The addition of midlevel boundary cues to the low-level data provided by the radiologists' manual tracings "provided the little bit extra that was needed to boost the performance of the low-level systems," he said.

The retrospective study included two lymph node CT datasets: 90 patients with 388 mediastinal lymph nodes and 86 patients with 595 abdominal lymph nodes. All CT images were acquired in the portal venous phase with a slice thickness of 1 mm to 1.25 mm.

A radiologist manually examined each scan, segmenting the enlarged lymph nodes and sampling 2D views of voxels for each lymph node candidate in three planes. From these, the group extracted 15 x 15-pixel squares of the lymph node boundaries and used a technique called sketch tokens to extract shapes within these regions using k-means clustering with 150 representative lymph node contour classes.

The sketch tokens "look like little binary images showing the various types of shapes that occur on the boundaries of lymph nodes," Summers said.

An image transformation scheme was designed to produce enhanced input for low-level HOG features to make them "deeper." The scheme would learn lymph-node-selective visual responses from manual radiologist annotations. All images courtesy of Dr. Ronald Summers, PhD.

An image transformation scheme was designed to produce enhanced input for low-level HOG features to make them "deeper." The scheme would learn lymph-node-selective visual responses from manual radiologist annotations. All images courtesy of Dr. Ronald Summers, PhD. Enhanced three-channel feature map. Contained within the enhanced map is higher-level semantic information derived from the preprocessed lymph-node-selective visual responses. These features are then used to train an SVM for binary classification of 2D views as either containing a lymph node or not. The SVM scores for views sampled from the same volume of interest are averaged to obtain the final candidate-level predictions.

Enhanced three-channel feature map. Contained within the enhanced map is higher-level semantic information derived from the preprocessed lymph-node-selective visual responses. These features are then used to train an SVM for binary classification of 2D views as either containing a lymph node or not. The SVM scores for views sampled from the same volume of interest are averaged to obtain the final candidate-level predictions.The patch-level gradient channels were used to train a random forest classifier for contour classification.

"Using the small patches from the CT images, the random forest classifier figures out which sketch most closely approximates these particular patches," he said.

Midlevel boundary cues look like nothing more than squiggly lines, Summers allowed, but they "eliminate lots of shapes that don't look like lymph nodes," he said. "There are a lot of other shapes in those parts of the body that are more linear than curved, and might have more jagged edges rather than smooth, so some of these things like smoothness and rate of curvature are what characterize lymph nodes. Things like bones, blood, and bowel get excluded."

Two sets of boundary cue maps were created, one for the abdomen and one for the mediastinum, to be used as additional inputs for HOG computation. The three feature sets were also used to train a linear support vector machine that could distinguish views containing lymph nodes from those that did not. The mean of the resulting view-level scores served as the lymph node candidate score.

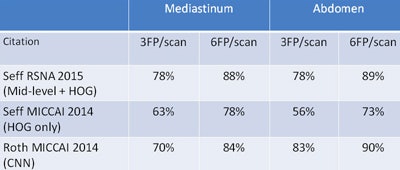

As for the results, a sixfold cross-validation process showed that the enhanced feature maps produced 15% to 23% greater recall than the baseline HOG (78% sensitivity versus 56% at three false positives per scan in the abdomen; 78% sensitivity versus 63% at three false positives per scan in the mediastinum).

The midlevel feature scheme (top) outperformed both the low-level HOG computations and the high-level CNN scheme.

The midlevel feature scheme (top) outperformed both the low-level HOG computations and the high-level CNN scheme.Paired sample t-tests showed the improvement to be statistically significant over the low-level method (p < 0.01). The midlevel scheme even outperformed the state-of-the-art deep-learning system in the mediastinum (but not the abdomen), with 78% sensitivity versus 70% at three false positives per scan.

"We propose a novel method for automated lymph node detection which leverages hybrid image feature maps based on object boundary cues," Summers concluded. "These learned maps can be used in place of or in addition to raw CT intensity images for HOG feature computation."

The evaluation "demonstrates that the midlevel information supplied by the new representations leads to an enhanced feature set for this complex object recognition task."