A prospective study in a live radiology clinical practice illustrates the potential for radiologist and generative AI collaboration to improve the delivery of clinical care, according to an article published June 5 in JAMA Network Open.

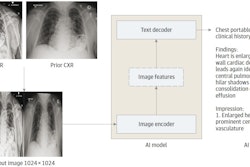

In the study, a generative AI model that drafts x-ray reports was integrated into the workflow and resulted in a 15.5% documentation efficiency benefit with no decrease in clinical accuracy. The model also flagged cases of clinically significant, unexpected pneumothorax, noted lead author Jonathan Huang, PhD, of Northwestern University in Chicago, and colleagues.

“Our results provide initial evidence for benefits of draft reporting using generative AI tools and a framework by which clinician-AI collaboration may effectively integrate into and improve existing clinical workflows,” the group wrote.

Considering increasing demand for radiological services on top of radiologist shortages worldwide, improving efficiency with the use of generative AI is of great interest, the researchers wrote. While retrospective studies using adapted and bespoke vision-language models have shown promise, prospective clinical evaluations of these models remain unpublished, they noted.

Thus, the group performed a clinical evaluation of a generative AI model capable of producing draft radiology reports for all plain x-rays, which was implemented within the live clinical workflow at their institution between November 2023 and April 2024.

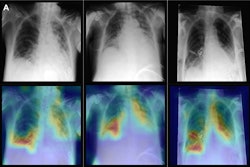

The researchers evaluated the association between the use of the generative model and radiologist documentation efficiency for x-rays documented with model assistance compared with a baseline set of radiographs without model use, matched by study type. Peer review was performed on model-assisted interpretations, and the authors also prospectively evaluated the accuracy of model-generated reports for flagging clinically significant, unexpected pneumothoraxes requiring physician intervention.

The datasets generated over the study period were derived from 122,411 x-ray studies: 23,960 studies from 14,460 unique patients were used to document the efficiency impact; 800 studies from 800 unique patients were used for peer review; and 97,651 studies from 73,881 unique patients were used to evaluate the model’s ability to flag pneumothorax.

According to the analysis, interpretations with model assistance (mean, 159.8 seconds) were faster than the baseline set of those without assistance (mean, 189.2 seconds), representing a 15.5% documentation efficiency increase, the authors reported.

Secondly, peer review of the 800 studies showed no difference in clinical accuracy (chi-squared = 0.68; p = 0.41) or textual quality (chi-squared = 3.62; p = 0.06) between model-assisted interpretations and nonmodel interpretations.

Finally, the analysis found that the model flagged 78 studies in real-time that contained a clinically significant, unexpected pneumothorax with a sensitivity of 72.7% and specificity of 99.9% among the 97, 651 studies screened.

“This study described, for the first time to our knowledge, prospective evaluation of a generative AI model for imaging interpretation in a live radiology clinical practice setting,” the group wrote.

The time saved represents a net time savings of over 63 documentation hours over the study period, or a reduction from roughly 79 to 67 radiologist shifts required to provide coverage, the authors noted.

Ultimately, due to the study’s nonrandomized nature, further experimental evidence is needed to build on its preliminary findings to establish generalizable results regarding draft reporting by AI, they added.

“The findings suggest the potential for radiologist and generative AI collaboration to improve clinical care delivery,” the researchers concluded.

The full study is available here.