CHICAGO - An artificial intelligence (AI)-assisted software tool can decrease PET/CT reporting time in lymphoma staging without adversely affecting report quality, according to research presented November 28 at RSNA 2022.

A group at Leeds Teaching Hospital in the U.K. evaluated an integrated prototype segmentation algorithm implemented in diagnostic PET/CT reading software (XD, Mirada Medical) on the speed and quality of reporting by readers with various levels of experience. The AI tool decreased reporting time without adversely affecting report quality, they found.

"An integrated AI-assisted reporting workflow has the potential to increase PET/CT reporting efficiency, which could reduce costs and report turnaround times," Dr. Andrew Scarsbrook told attendees.

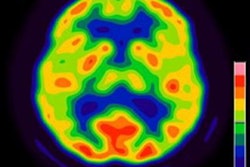

F-18 FDG-PET/CT is widely used for staging of high-grade lymphoma. Evaluation of scans can be time-consuming, depending on complexity and disease extent, taking up to an hour to analyze and report, Scarsbrook said.

In this study, Scarsbrook and colleagues hypothesized that an algorithm they developed over the past two years could help reduce reporting times when integrated with the automatic PET/CT image segmentation software.

Nine readers (three trainees, three junior, and three senior consultant radiologists) from three imaging centers participated, with 15 real-world clinical lymphoma staging PET/CT images evaluated twice -- initially, using a standard PET/CT reporting workflow and again after a six-week gap using the AI-assisted workflow.

The read duration for each case was calculated using screen recording software and file logs, while report quality was independently assessed by two radiologists with more than 15 years of experience using a five-point audit score, with five being perfect and one indicating major error.

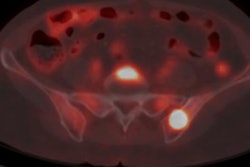

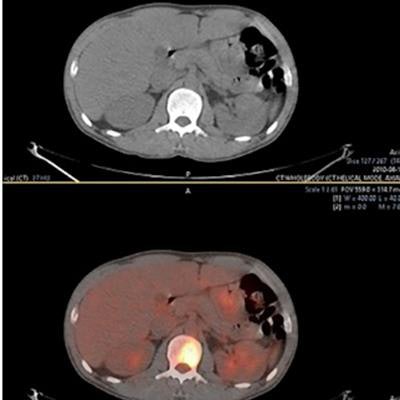

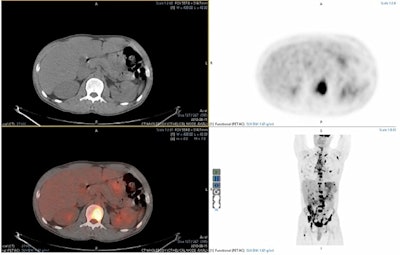

Image demonstrating the viewing panel from Mirada XD. Image courtesy of Dr. Andrew Scarsbrook.

Image demonstrating the viewing panel from Mirada XD. Image courtesy of Dr. Andrew Scarsbrook.Overall, there was a significant decrease in time between non-AI and the AI-assisted reads (a median of 15 minutes versus 13.3 minutes, according to the findings. A subanalysis by reporter experience showed that this held true for junior (14.5 minutes versus 12.7 minutes, p = 0.03) and senior consultants (15.1 minutes versus 12.2 minutes, p = 0.03), although no significant improvement was seen in trainees.

Importantly, there was no significant difference in report quality, Scarsbrook noted. The mode score was five (perfect) for both AI and non-AI assisted reads (p = 0.07). This also held true when splitting data into trainee (p = 0.7), junior (p = 0.7), and senior reporters (p = 0.06), the researchers found.

Limits to the study included the small number of patients, and perhaps fatigue on the part of the readers, as they were required to complete a survey after each read, Scarsbrook noted. Nonetheless, the AI-assisted software tool looks promising, appears scalable, and the researchers have laid plans to deploy the algorithm, he said.

"An AI-assisted tool has the potential to decrease reporting time without adversely affecting report quality," Scarsbrook concluded.