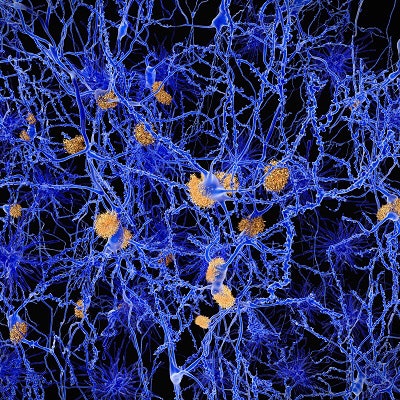

With the help of artificial intelligence (AI), researchers have developed a way to generate diagnostic-quality images from PET/MR scans to detect amyloid deposits with considerably less radiotracer dose. Their findings were published online December 11 in Radiology.

A deep-learning convolutional neural network (CNN) was trained to create PET/MR images resembling those from a full dose of florbetaben (Neuraceq, Life Molecular Imaging) -- but with just 1% of the radiotracer. Florbetaben is designed to bind to amyloid plaque in the brain, which has been associated with Alzheimer's disease.

"Our results can potentially increase the utility of amyloid PET scanning at lower radiotracer dose ... and can inform future Alzheimer's disease diagnosis workflows and large-scale clinical trials for amyloid-targeting pharmaceuticals," wrote the group led by Kevin Chen, PhD, from Stanford University.

Previous studies have shown the feasibility of using CNNs to obtain diagnostic image quality in PET scans acquired with low doses of FDG in patients with glioblastoma. Theoretically, those approaches should also work with other radiotracers, such as florbetaben, that are relevant to Alzheimer's disease populations, the authors hypothesized.

To test their theory, Chen and colleagues selected 40 separate datasets from 39 patients (mean age, 67 years), each of whom received an intravenous injection of 330 ± 30 MBq of florbetaben. PET and MRI data were acquired simultaneously on an integrated scanner (Signa PET/MR, GE Healthcare) with time-of-flight capabilities. PET scans were performed 90 to 110 minutes after radiotracer injection. One-hundredth of the raw list-mode PET data were randomly chosen to simulate an ultralow-dose scan of 1%.

In the attempt to simulate full-dose PET images, CNNs were used with low-dose PET alone (PET-only model) or with low-dose PET and multiple MR images (PET-plus-MR model). For further comparison, the researchers created a set of PET images at 1% dose without deep-learning methodology. Two physicians certified to read amyloid PET results rated the images on a five-point scale, with 1 as "uninterpretable" and 5 as "excellent."

All of the PET-plus-MR CNN images were rated as adequate, with a score of at least 3. The ultralow-dose PET-only model had fewer images of good or better quality. Interestingly, a comparable proportion of the PET-plus-MR images and the original full-dose PET results were rated at least 4. As one might expect, reconstructed PET images with 1% dose and no CNN were deemed inadequate, with 56 (70%) deemed uninterpretable.

Overall, the PET-plus-MR model outperformed the PET-only CNN in sensitivity, specificity, and accuracy, the researchers found.

| Performance of ultralow-dose PET after CNN image processing | ||

| PET-only model | PET-plus-MR model | |

| Sensitivity | 87% | 96% |

| Specificity | 81% | 86% |

| Accuracy | 83% | 89% |

"We have shown that high-quality amyloid PET images can be generated using deep-learning methods starting from simultaneously acquired MR images and ultralow-dose PET data," the researchers concluded. "The CNN can reduce image noise from low-dose reconstructions while the addition of multicontrast MR as inputs can recover the uptake patterns of the tracer."

Study disclosures

Piramal Imaging (now Life Molecular Imaging) provided support for the radiotracer and GE Healthcare provided research funding support. One study co-author is an employee of GE. The other co-authors controlled the inclusion of any data that might represent a conflict of interest.