Researchers from Memorial Sloan Kettering Cancer Center in New York City have developed a deep-learning network called DeepPET to generate high-quality PET images more than 100 times faster than currently possible, according to a study published in the May issue of Medical Image Analysis.

DeepPET is an image reconstruction method based on a deep neural network to collect and quickly process PET data to create diagnostic-quality images. In one comparison, DeepPET was significantly faster than the conventional method of ordered subset expectation maximization (OSEM) for PET images.

"The purpose of this research was to implement a deep learning network to overcome two of the major bottlenecks in improved image reconstruction for clinical PET," wrote the researchers led by Ida Häggström, PhD, from the center's department of medical physics (Med Image Anal, May 2019, pp. 253-262). "These are the lack of an automated means for the optimization of advanced image reconstruction algorithms and the computational expense associated with these state-of-the-art methods."

PET is a mainstay in nuclear medicine and radiology for assessing a variety of conditions, primarily cancer. While the modality is quite effective, it can take a long time to process images that are not exactly crystal clear. Häggström and co-creators developed DeepPET to simulate PET scans and generate realistic PET data, based on the open-source PET simulation software known as PET Simulator of Tracers via Emission Projection (PETSTEP).

The researchers tested DeepPET by randomly dividing their collection of more than 291,000 2D PET datasets into three parts: 203,305 datasets for training DeepPET, 43,449 datasets for validating the deep-learning model, and 44,256 datasets for testing the process.

Most importantly, the final qualitative test involved real clinical data from two separate PET/CT scanners (Discovery 710 and Discover 690, GE Healthcare). The clinical 3D PET images were converted into 2D slice sinograms, which were reconstructed through filtered back projection (FBP) and OSEM. The results were then inputted into DeepPET. Among the criteria used to evaluate image quality was the structural similarity index (SSIM) for visual assessment.

So, how did DeepPET perform?

| Performance of DeepPET for PET image reconstruction | |||

| FBP | OSEM | DeepPET | |

| Image execution speed | 19.9 msec (± 0.2) | 697 msec (± 0.2) | 6.47 msec (± 0.01) |

| Image quality (SSIM)* | 0.8729 | 0.9667 | 0.9796 |

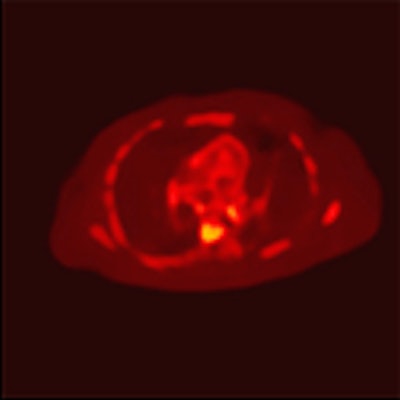

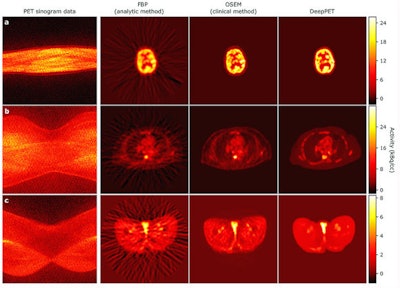

The series of PET images includes the cross sections of a brain (top), abdomen (middle), and hip (bottom). Each row depicts network input prior to reconstruction (sinogram), an older interpretation of data (FBP), the current method of interpretation (OSEM), and DeepPET, which produces smoother images and sharper detail compared with the other methods. Images courtesy of Häggström et al.

The series of PET images includes the cross sections of a brain (top), abdomen (middle), and hip (bottom). Each row depicts network input prior to reconstruction (sinogram), an older interpretation of data (FBP), the current method of interpretation (OSEM), and DeepPET, which produces smoother images and sharper detail compared with the other methods. Images courtesy of Häggström et al."By combining [radiologist] expertise with the state-of-the-art computational resources that are available here, we have a great opportunity to have a direct clinical impact," Häggström added in a statement. "The gain we've seen in reconstruction speed and image quality should lead to more efficient image evaluation, and more reliable diagnoses and treatment decisions, ultimately leading to improved care for our patients."