Augmented reality (AR) can provide an alternative to traditional MR console software for controlling the scanner and viewing images, according to research presented at this week's virtual conference and exhibition of the International Society for Magnetic Resonance in Medicine (ISMRM).

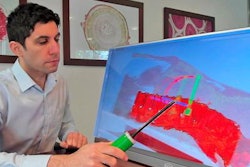

Developed to serve as a replacement for the conventional MR scanner console, a gesture-based AR interface developed by a team of researchers from Case Western Reserve University enables operators to control scanners wirelessly and visualize images in 3D, all while keeping patients in direct sight. After testing the platform with Microsoft HoloLens and a 3-tesla clinical scanner, the researchers found it to be reliable and easy for technologists and physicians to use.

"While the interface is significantly pared down compared to the scanner console, this encourages interaction with the image data rather than [looking] at the [console] controls," said first author and presenter Andrew Dupuis in a digital poster presentation. "The streamlined image acquisition requires minimal understanding of the scanner's user interface, allowing physicians to take a more active role during interventional imaging."

Dimensional disconnect

Although MRI acquisitions are inherently three-dimensional, users traditionally control scanners and review images in two dimensions, Dupuis said.

"This dimensional disconnect continues after reconstruction," he said. "Slices are displayed out of context, both from each other and from the patient."

In addition, scanner control software has always been designed to support highly granular control, emphasizing flexibility over intuition, according to Dupuis. This introduces the potential for human error, as well barriers to use for nontechnicians -- especially for physicians without MRI expertise, he said.

An intuitive replacement

In their project, the Case Western researchers sought to replace the conventional MRI scanner interface with an AR alternative that could enable gestures to be used to control the scanner and to explore the acquired MRI data in 3D.

"By leveraging recently developed protocols such as magnetic resonance fingerprinting that require less manual configurations for each patient, we aimed to develop a three-dimensional AR interface that intuitively replaces and enhances the capabilities of a scan console," he said.

They developed their interface and visualization system in the Unity game engine, and also used Unity coroutines for communication and input. As a result, the platform can be used with most AR and virtual reality headsets, desktops, laptops, Web Graphics Library (WebGL)-enabled browsers, and mobile phones, according to Dupuis.

Next, the researchers set up bidirectional communication between the platform and their institution's Skyra 3-tesla clinical MR scanner (Siemens Healthineers) using the company's Access-I software development kit, which enables remote control of certain scanner operations over restful hypertext transfer protocol (HTTP) requests.

They then tested their approach using a HoloLens AR headset (Microsoft). A gesture system was implemented to capture the user's hand motions for interaction with the scanner.

Gesture-based input

After a user dons an AR headset such as the HoloLens, a local origin, or marker, is established in the user's world space by an optical marker recognition system such as Vuforia or by some other spatial anchor, he said.

"This origin is then mapped to the scanner's coordinate system and a virtual scan console and visualization display region are instantiated around the user," he said. "This simplified console allows for gesture and gaze-based input in situ with incoming scan data."

Bidirectional communication is then established with the scanner via HTTP through a registration handshake that establishes permissions for the client to directly control the MR system. Once authenticated, WebSockets are utilized to transfer data directly between the scanner and the client in real-time, Dupuis said. Users can then utilize gestures to control scan parameters and to review image acquisitions in 3D.

Reliable real-time communication

In testing, the researchers found that bidirectional communication between the scanner and the HoloLens was reliable over a wireless local area network (LAN) connection. The average latency between image acquisition and visualization inside the HoloLens was 0.27 seconds for a 2562 matrix acquisition, including the time needed for online reconstruction, according to Dupuis.

The system streamlines the acquisition and visualization process, while retaining the 3D nature of an MRI scan, Dupuis said.

"This allows for clinicians and technologists to work in native 3D throughout the acquisition and reading," he said. "This type of control of scan targets and acquisition parameters is possible and reconstructed data can be immediately co-registered and rendered without input from the user."

Better user engagement

Furthermore, the bidirectional communication enables users to access most scanner functions without needing to disengage from the data or lose sight of the patient, he said.

Dupuis noted, however, that AR is not ideal for performing detailed scan modifications. These adjustments should be preconfigured ahead of time before switching to AR for image acquisition.

In future work, the researchers plan to add voice controls for input of data to further reduce gestural complexity.

"We also aim to increase support for interactive feedback, as well as scanner control during acquisitions, Dupuis said.