Radiation oncologists may favor radiation therapy plans produced by a machine-learning algorithm in simulations, but trusting in the plans for clinical use may be a different matter, according to research published online June 3 in Nature Medicine.

Researchers from Princess Margaret Cancer Centre in Toronto found that in over 80% of prostate cancer treatments, radiation oncologists retrospectively preferred radiation therapy plans generated by a machine-learning algorithm over plans produced by radiation therapy planning specialists in prostate cancer treatments.

However, that percentage dropped significantly when the model was prospectively deployed in clinical practice.

"Once you put [machine learning]-generated treatments in the hands of people who are relying upon it to make real clinical decisions about their patients, that preference towards [machine learning] may drop," said senior author Thomas Purdie, PhD, in a statement from Princess Margaret Cancer Centre. "There can be a disconnect between what's happening in a lab-type of setting and a clinical one."

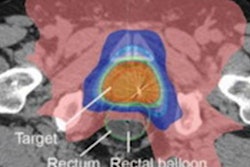

Although artificial intelligence (AI) algorithms have produced strong results in a wide range of clinical applications, most analyses have taken place in a simulated or lab environment. To assess AI's performance in a real-world clinical practice, the researchers performed a blinded, head-to-head study that compared plans developed with a random-forest AI algorithm with plans created by radiation therapy planning specialists. Radiation oncologists graded the acceptability of each plan and selected the one they would choose for treatment.

In the feasibility phase of the study, the researchers performed a retrospective simulation of machine learning-based planning for 50 cases. The second phase of the study compared AI plans and human plans in a prospective deployment as part of the clinical workflow (the clinical deployment phase).

While the radiation oncologists rated 83% of the AI-generated plans as suitable in the feasibility phase, that number dropped by over 20 percentage points when it came to the clinical deployment phase.

| Machine-learning algorithm's performance for producing radiation therapy plans | |||

| Feasibility phase | Clinical deployment phase | Overall | |

| AI plans selected for treatment | 83% | 61% | 72% |

| AI plans rated as clinically acceptable | 92% | 86% | 89% |

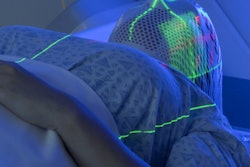

The machine learning-based planning process took a median of 47 hours, 60% faster than the 118 hours needed for the conventional planning process.

Although there's been a lot of excitement about AI algorithm performance in the lab, the study results show that researchers need to pay attention to the clinical setting, according to Purdie.

"Taken together, our analyses suggest that change in the perception and preference of the treating physician towards [machine learning] was the primary cause of the observed decline in [machine-learning radiation therapy] plan selection during the prospective deployment phase," the authors wrote. "We conclude that clinical judgment and treatment decision-making in a real-world prospective setting where patient care is directly impacted may not be effectively captured in studies conducted in retrospective or simulated settings."

As a result of the study, machine learning-generated treatments are now used in treating the majority of prostate cancer patients at Princess Margaret Cancer Centre. That implementation was made possible by careful planning, stepwise integration into the clinical environment, and involvement of many stakeholders, according to co-author Leigh Conroy, PhD.

The researchers are now exploring the use of the algorithm for other cancers, including lung and breast cancers. The goal is to reduce cardiotoxicity from treatments, they said.