Artificial intelligence (AI) in medicine is a hot topic, as demonstrated by the number of conferences, articles, discussions, new ventures, and investments in this space. Discussions on the topic naturally spark debate about the future of several medical professions.

In the realm of radiology, some are quick to state that radiologists will be replaced by computers. Others argue that radiologists would greatly benefit from embracing and adopting AI because the technology will only make them more efficient and better at their jobs, rather than replacing them.

Parallel lanes

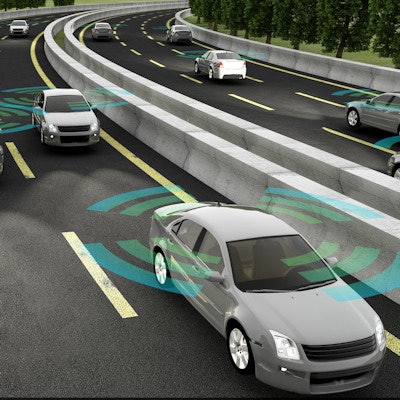

To assess how AI will affect radiology, it can be useful to observe parallel developments of technologies in other industries, especially those that are further along on the technology-adoption curve. Drawing these parallels and creating analogies can prove insightful, or at the very least add another perspective.

Eran Rubens from Philips Healthcare.

Eran Rubens from Philips Healthcare.One interesting parallel is that of self-driving cars. Self-driving technology is required to meet very high standards of accuracy, because the outcome of a mistake can be severe and lead to damages, injury, or even death. Medicine is similar, in that the wrong decision could result in the same negative results for the patient.

No one seriously doubts that self-driving cars will eventually become a reality -- the only question is how long it will take to get there. The technology still has a ways to go, but I find there are interesting things we can learn from the automotive industry about the adoption of AI in medicine.

One of the more interesting talks I've encountered on the subject was given at the Consumer Electronics Show (CES) earlier this year. Gill Pratt, PhD, CEO of Toyota Research Institute, gave a great presentation covering psychological questions around society's acceptance of the technology.

Pratt started by asking whether or not we would accept self-driving cars that would be as safe as a human driver. He then asked if we would accept a machine that was twice as safe, leading to the ultimate question of "How safe is safe enough?" As a society, we have little tolerance for machines to be inaccurate or flawed, while we are all aware and accepting of the fact that humans sometimes make mistakes.

The same question immediately translates to the domain of medicine. How accurate is accurate enough? How would our society accept medical decisions made by computers and AI if they sometimes got it wrong?

Intervention by standards authorities

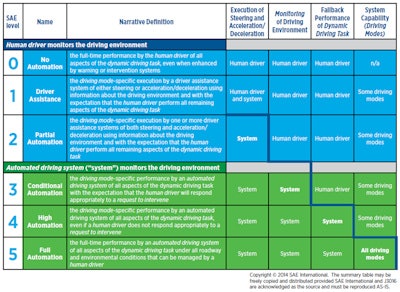

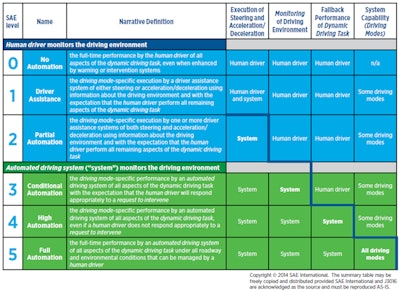

SAE International introduced the J3016 standard, which sets forth a taxonomy and a set of expectations for self-driving technology. It defines SAE levels ranging from no automation through simple driver-assist capabilities such as enhanced cruise control or parking aids, all the way to fully autonomous driving.

This standard clearly defines levels of autonomous driving that require driver supervision. Before reaching full autonomy, levels 3 and 4 constrain the environment (driving modes) in which autonomous driving is allowed to operate. Level 3 includes the need to alert the driver and hand off control of the vehicle in a timely manner when needed, which presents a major challenge in its own right.

SAE International levels of autonomous driving.

Interesting parallels can be drawn here, and we can potentially devise a similar model for AI in medicine. For example, "driving modes" could be restrictions of the application of AI to specific types of exams, patient conditions, or patient interactions and interventions.

The type of AI intervention itself can also be a factor in the model. Driver supervision can be mapped to the type of review and approval by the user, and the ability of the AI to explain the reasoning to the user can be another key factor of supervision for medicine.

Another key aspect would be the impact of the automation on clinical outcomes. For example, using AI for risk stratification is certainly different than having AI prescribe a treatment course. Developing such a model for medicine can define standardized levels of automation, potentially opening different regulatory paths as well.

Can we trust the drivers?

Another interesting point raised in Pratt's talk is the ability to trust the human driver to stay alert and focused. The issue here is psychological and has to do with our cognitive biases. The likelihood is that a few positive experiences with autonomous driving could create a false sense that the technology can be trusted despite its inherent limitations.

This kind of excess trust can lead to catastrophic outcomes. The recent report of facts collected by the U.S. National Transportation Safety Board (NTSB) on the fatal accident involving a Tesla Model S reveals that the driver ignored warnings from the autopilot system prompting him to keep his hands on the steering wheel.

"For the vast majority of the trip, the Autopilot hands-on state remained at 'hands required, not detected,' " the report states. A final conclusion by the NTSB on the accident is still pending.

In medicine, we know that having too many false positives could lead to alert fatigue, which potentially leads to ignoring true positives. Could the opposite experience also introduce the bias that leads to having too much trust in the technology?

AI itself is not enough

AI technology is surely transformative, and it will no doubt change many industries, including medicine. While much focus is being placed on the development of the AI components themselves, I believe it is just as important to focus on the integration of AI into the clinical workflow.

Much thought is required to find the correct approach from a human interaction perspective to enable the combination of human and AI to be better than either of them could be separately. One of the key elements is the interaction model between the end user and AI inputs and outputs. Another is the form of visualization and interaction with results coming from AI. An ongoing feedback loop is yet another aspect to consider.

Without paying attention to these challenges in workflow integration, adoption of AI may suffer from inhibitors and create cognitive biases that could limit its adoption and success. Conversely, a correct approach around workflow integration that addresses those challenges could facilitate and accelerate the adoption of AI in medicine.

Eran Rubens is chief technology officer for enterprise imaging at Philips Healthcare. A software professional with 20 years of experience, Eran spent the last 14 years at Philips driving software innovations in medical imaging and radiology informatics.

The comments and observations expressed herein do not necessarily reflect the opinions of AuntMinnie.com, nor should they be construed as an endorsement or admonishment of any particular vendor, analyst, industry consultant, or consulting group.