ChatGPT-4 received a score of 58% on an exam by the American College of Radiology (ACR) used to assess the skills of diagnostic and interventional radiology residents, according to a study published April 22 in Academic Radiology.

A team at Stony Brook University in Stony Brook, NY, prompted ChatGPT-4 to answer 106 questions on the ACR’s Diagnostic Radiology In-Training (DXIT) exam, with its performance underscoring both the chatbot’s potential and risks as a diagnostic tool, noted lead author David Payne, MD, and colleagues.

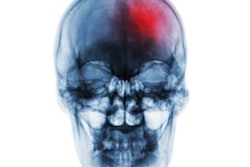

“While minimally prompted GPT-4 was seen to make many impressive observations and diagnoses, it was also shown to miss a variety of fatal pathologies such as ruptured aortic aneurysm while portraying a high level of confidence,” the group wrote.

Radiology is far at the forefront of the medical field in the development, implementation, and validation of AI tools, the authors wrote. For instance, studies have demonstrated that ChatGPT shows impressive results on questions simulating U.K. and American radiology board examinations. Yet most of these previous studies using ChatGPT have been based solely on unimodal, or text-only prompts, the authors noted.

Thus, in this study, the researchers put the large language model (LLM) to work answering image-rich diagnostic radiology questions culled from the DXIT exam. The DXIT exam is a yearly standardized test prepared by the ACR that covers a wide breadth of topics and has been shown to be predictive of performance on the American Board of Radiology Core Examination, the authors noted.

Questions were sequentially input into ChatGPT-4 with a standardized prompt. Each answer was recorded and overall accuracy was calculated, as was its accuracy on image-based questions. The model was benchmarked against national averages of diagnostic radiology residents at various postgraduate year (PGY) levels.

According to the results, ChatGPT-4 achieved 58.5% overall accuracy, lower than the PGY-3 average (61.9%), but higher than the PGY-2 average (52.8%). ChatGPT-4 showed significantly higher (p = 0.012) confidence for correct answers (87.1%) compared with incorrect (84%).

The model’s performance on image-based questions was significantly poorer (p < 0.001) at 45.4% compared with text-only questions (80%), with an adjusted accuracy for image-based questions of 36.4%, the researchers reported.

In addition, fine-tuning ChatGPT-4 -- prefeeding it the answers and explanations for each of the DXIT questions -- did not improve the model’s accuracy on a second run. When the questions were repeated, GPT-4 chose a different answer 25.5% of the time, the authors noted.

“It is clear that there are limitations in GPT-4′s image interpretation abilities as well as the reliability of its answers,” the group wrote.

Ultimately, many other potential applications of ChatGPT and similar models, including report and impression generation, administrative tasks, and patient communication, have the potential to have an enormous impact on the field of radiology, the group noted.

“This study underscores the potentials and risks of using minimally prompted large multimodal models in interpreting radiologic images and answering a variety of radiology questions,” the researchers concluded.

The full study is available here.