Peer review of prior readings is a time-consuming and politically charged process that radiologists might prefer to ignore. But they can't -- the Joint Commission on Accreditation of Healthcare Organizations (JCAHO) requires random review as a condition of accreditation, adding countless hours to U.S. radiologists' already heavy workloads.

Yet the University of Texas MD Anderson Cancer Center in Houston has hit on a novel solution by incorporating peer review into an automated clinical review workstation. A mouseclick now completes the process while new reports are being dictated, enabling the department to easily meet JCAHO requirements in a fraction of the time it took on paper.

"Peer review is something radiologists love to hate," said Dr. Kevin McEnery of MD Anderson, speaking earlier this month at the Symposium for Computer Applications in Radiology in Philadelphia. "If you ask radiologists in the audience whether they like to be peer-reviewed, I'd suspect there's not a single hand that goes up."

Yet it's considered essential for clinical quality control, which is why the JCAHO, which certifies more than 19,500 healthcare organizations in the U.S., requires a minimum 5% random-case peer review as a condition of accreditation. In a nutshell, random peer review requires a radiologist dictating a report to either agree or disagree with the report from a prior imaging study on the same patient.

In an institution as large as MD Anderson, however, with 64 staff radiologists and an estimated 260,000 reports this year, that 5% works out to more than 13,000 peer review cases a year.

The information required for confirming peer review -- including patient ID, accession number, and dictating radiologist -- can be collected in a variety of ways. The American College of Radiology, for example, provides a sample form that can be filled out fairly quickly. And database applications such as ACT! or Microsoft Access can increase the speed and functionality of data collection, McEnery said, but not nearly enough.

"If we assumed that each of those [entries] took one minute, that would be 218 hours of radiology productivity that would be devoted to peer review" this year, he said. "In our department, quite frankly, we don't have 218 hours of productivity to spare."

So the center built the process into radStation, a hybrid clinical review workstation it developed in-house. The system interfaces a Dictaphone Boomerang Microphone with a PC running the radStation application, all tied to the hospital's RIS using Windows NT-based servers.

The radStation product displays a wealth of patient data, including pathology reports, patient schedules, etc., although images have yet to be integrated into the system. With all of the patient information on the radStation display, McEnery said, "This is the perfect time to determine whether the prior report is correct or not."

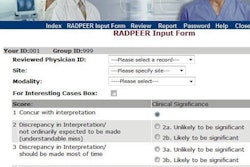

To agree with the prior report, the radiologist simply clicks on the "Agree" button, a process that takes less than one second. Disagreement takes about six seconds: the radiologist clicks on "Disagree," then fills in a dialogue box with the reason for the discordance, such as "missed metastasis."

Both kinds of messages are stored in the quality assurance database, which is separate from the hospital's RIS. Discordant reports generate an e-mail to the original reader one week later, in an effort to increase the anonymity of the process. Each month, quality-assurance reports of discordant cases are generated from the SQL database for review by the section chief, McEnery said.

The results have been impressive. In May 2000 the department generated 1,000 random peer reviews with six discordant submissions. Although submitting a peer review is optional, radiologists went ahead and did them in more than 50% of their cases, saving more than 17 hours of radiologists' time over a paper-based system for the month, McEnery said.

The current radStation system is limited, according to the study authors, in that it focuses on the interpretation of the exams and does not address other quality-assurance measures such as adherence to technical standards and appropriateness of exams ordered. An improved version might notify the radiologist when technical factors need comment, they wrote.

Yet the system, which the hospital has accepted for the department's peer review requirements, has saved an enormous amount of time over the hospital's previous paper-based system, McEnery said.

"RadStation incorporates peer review into the dictation process. It's an efficient method of producing quality-assurance documentation, and [is] clearly a process that can be easily mastered," he said. "The ability to have [radStation] documentation at my fingertips makes me a faster radiologist, and the QA piece is basically a slam-dunk to increase our productivity."

By Eric Barnes

AuntMinnie.com staff writer

June 20, 2000

Let AuntMinnie.com know what you think about this story.

Copyright © 2000 AuntMinnie.com