Monday, November 27 | 12:15 p.m.-12:45 p.m. | PH217-SD-MOA6 | Lakeside, PH Community, Station 6

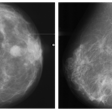

Illinois Institute of Technology researchers will present their work on a virtual dual-energy tool that enables studies to be produced from conventional digital x-ray exams without requiring double exposures.Dual-energy imaging can be a useful tool in digital x-ray to distinguish between bone and soft tissue, particularly in cases where there are overlapping bone structures, such as in chest studies. But dual-energy exams require specialized x-ray equipment and also two exposures of the patient, with double the radiation dose, according to Kenji Suzuki, PhD.

The Illinois Institute of Technology group theorized that a deep-learning algorithm could learn from dual-energy chest imaging how to separate bones from soft tissue on conventional chest radiographs. The algorithm is based on anatomy-specific orientation-frequency-specific (ASOFS) deep neural network convolution.

The algorithm was trained using four chest x-ray exams with nodules, and after training, it was able to provide a virtual dual-energy bone image in which soft tissue was substantially attenuated while bony structures were preserved. This image was then subtracted from the corresponding chest x-ray to provide a virtual dual-energy (VDE) soft-tissue image with bones removed.

The researchers tested the algorithm in 118 chest x-rays, providing bone-like and soft tissue-like images. The VDE images were judged to be closer to the gold standard of conventional dual-energy images.

The group has additional plans for VDE beyond chest imaging, according to Suzuki.

"We plan to extend our virtual dual-energy imaging to include areas other than the chest, such as bone density measurement and assessment, and we plan to implement our virtual dual-energy chest radiography in temporal subtraction of chest radiographs to reduce bone-induced artifacts in the temporal subtraction," Suzuki said. "By doing so, we expect to solve the major issue of bone-induced artifacts with temporal subtraction."