The verdict is in: radiologists shouldn't waste their time measuring lung nodules manually when computer algorithms can do it faster and far more accurately.

"If we find ourselves competing with computers, and realize we do not do so good a job compared to computers, maybe we should do something else," said Dr. Patrick Rogalla, who laid out the case against humans at Stanford University's 2007 International Symposium on Multidetector-Row CT in San Francisco.

Measuring solitary pulmonary nodules (SPN) isn't a job that can be avoided. Size matters in so many ways -- for disease staging, follow-up to assess growth, and treatment response. Larger SPN are more clinically significant than smaller ones, as they are more likely to represent lung cancer. Clinical management of disease is based on tumor load. And imaging results are critical in the lungs because biopsy is invasive and risky.

"There is not a single study from hundreds of studies in which radiology was not forced to measure SPN sizes and metastases," said Rogalla, a radiologist at Charité Hospital in Berlin.

Follow-up assessments are based on SPN volume change, he said. It has been reported that a 20% to 30% change in SPN volume in either direction represents no change, a decrease of 30% or more represents tumor regression, and an increase of 20% or more represents growth.

The exception is the World Health Organization (WHO) RECIST criteria, which records the largest diameter of the SPN, generally on axial slices. But this method opens the door to false results when lesions are irregularly shaped, Rogalla said.

"Even if the nodules maintained a round shape, you would need +73% or -66% (change) in volume before we could provide an accurate measurement," he said. "So there is a big, big problem in variability of these measurements, even though (RECIST) is the basis for almost all clinical trials."

A study of non-small cell lung tumors by Erasmus et al showed significant variability in reported lesion sizes post-treatment, measured using RECIST criteria. In 33 patients with tumors 1.5 cm or larger, tumors were erroneously classified as "progressive" in 9.5% (intraobserver) and 30% (interobserver) of the cases, Rogalla said.

"Measurements of lung tumor size on CT scans are often inconsistent and can lead to an incorrect interpretation of tumor response," Erasmus and colleagues wrote, adding that "consistency can be improved if the same reader performs serial measurements for any one patient" (Journal of Clinical Oncology, July 2003, Vol. 21:13, pp. 2574-2582).

On the other hand, software engineers have spent hundreds of hours developing algorithms to measure nodules accurately and they "are smarter than all of us," he quipped.

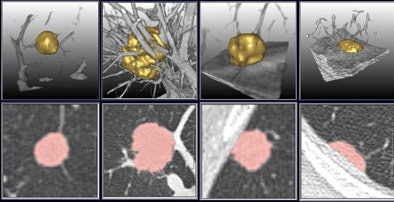

Colleagues from MeVis Technology of Bremen, Germany, Rogalla recounted, have told him that it's not just detection that the software has perfected. "There is a subtraction process, a dilatation process, a shrinking process," and "in the end, the computer comes up with something that looks almost like we have done it manually," Rogalla said. The firm's investigational nodule segmentation algorithm performed accurately in a recent study (RadioGraphics, March-April 2005, Vol. 25:3, pp. 525-536).

|

| A multistep segmentation process yields accurate volumetric measurements for solitary pulmonary nodules. Images courtesy of Lars Bornemann and Dr. Heinz-Otto Peitgen, MeVis Technology. |

Testing the method

Rogalla and his team at Charité sought to compare an automated measurements method to manual in the same group of patients. They reviewed a total of 202 pulmonary metastases in 20 patients in the retrospective study. CT data had been acquired twice on the same day using 1-mm slice thickness and 5 and 50 mAs, respectively, on either a 16- or 64-detector-row scanner (Aquilion 16 or 64, Toshiba Medical Systems, Tokyo).

"We had a total of 1,600 segmentations, by two readers using RECIST criteria," Rogalla said. MeVis' OncoTREAT software package was used to evaluate the same data to provide a true volumetric measurement, in both the high- and low-dose versions of the images. "The automatic volume measurement ... calculates the ideal diameter of the nodule to make the comparison possible," Rogalla said.

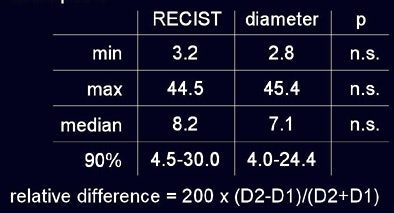

No nodules were excluded, though 12% were not segmented by the software, he said. As measured by radiologists using RECIST criteria and automated diameter measurements, respectively, the minimum tumor size was 3.2 mm versus 2.8 mm; maximum 44.5 mm versus 45.4 mm automated; and median size 8.2 mm versus 7.1 mm automated. Using RECIST criteria 90% of lesions ranged in size from 4.5 to 30 mm, versus the range at automated measurements of 4.0 to 24.4 mm; none of these differences were statistically significant.

"You can see that overall there was no difference in sizing, which makes you believe we are accurate," he said. But the problem lay elsewhere -- in measurement variability, where the results of the two methods were worlds apart.

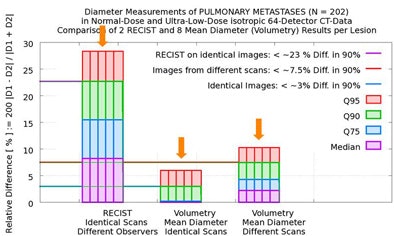

In 90% of the group's automated calculations on the initial scan, nodule size variation was less than 3% in two measurements by the algorithm. Even when the computer performed its calculations of the same nodule in different scans of the same patient, in 90% of the calculations the variation was still less than 7.5% in two measurements by the algorithm, Rogalla said.

But there was far more variation by human readers, who measured twice using RECIST criteria. In 90% of the cases, they reported variations as high as 23% between the two measurements -- a maximum diameter difference that exceeds the threshold for characterizing tumor growth as "progressive," and could therefore result in lesions being misclassified, Rogalla said. Using 100% of the data, the variation between the two measurements reached 43%.

|

| In a study of 20 patients with 202 non-small cell lung metastases, the overall diameter differences as measured by radiologists using RECIST criteria and investigational volumetry software were not statistically significant (above). However, manual measurements demonstrated variability as large as 23% in 90% of the cases (below) sufficient to misclassify lesion progression. |

|

A 2006 Dutch study performed on data from the Nelson lung screening trial showed similarly wide variances, Rogalla said.

In the study, Dr. Hester Gietema and colleagues from University Medical Center Utrecht in the Netherlands examined a total of 430 nodules by two different radiologists, based on results from the multicenter Nelson lung screening trial (Radiology, October 2006, Vol. 241:1, pp. 251-257).

Discrepant results were reported for 47 nodules (10.9%); in 16 cases (3.7%), the discrepancy was larger than 10%. The most frequent cause of variability was incomplete segmentation due to an irregular shape or irregular margins, they reported.

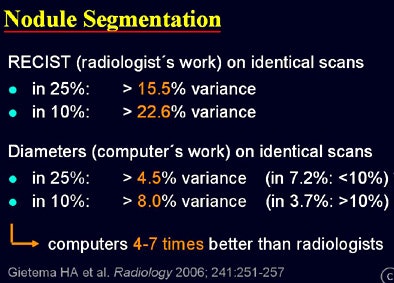

Rogalla's analysis of Gietema and colleagues' results found wide discrepancies. Two radiologists measuring each nodule manually using RECIST criteria produced 15.5% diameter variation in 15.5% of cases, and 22.6% variance in 10% of cases.

The computer software, measuring the identical lesions, produced 4.5% variance in 25% of the scans, and a maximum of 8% variance in 10% of the scans, rendering the software results four to seven times better than radiologists for the equivalent lung lesion.

|

| Results from 450 nodules measured by radiologists using RECIST criteria and automated volumetry show far greater variability in manual measurements -- as much as 22.6% variance in 10% of cases, versus 8% in the same group of automated measurements. |

"There is much lower variance when (software measurements) are applied twice to the same dataset," Rogalla said. "That is quite comparable to results in the literature, although the numbers are presented in a different way, they are pretty much alike. We can conclude that computers perform four to seven times better than radiologists. Radiologists should not waste their time sizing ... in this (task); they are superfluous."

Scanner brands consistent

A just-published study by Dr. Marco Das, Gietema, and colleagues from Aachen University in Germany took the question of automated volumetry a step further by comparing automated measurements across different scanner models in phantom measurements in five nodule categories (intraparenchymal, around a vessel, vessel attached, pleural, and attached to the pleura) with nodules ranging from 3 mm to 10 mm in diameter (European Radiology, August 2007, Vol. 17:8, pp. 1979-1984).

They scanned the phantom using four different 16-slice MDCT scanners (Siemens Medical Solutions, Erlangen, Germany; GE Healthcare, Chalfont St. Giles, U.K.; Philips Medical Systems, Andover, MA; and Toshiba Medical Systems), measuring nodule volume automatically, and calculating the absolute percentage volume (APV) errors for each machine.

"The mean APE (absolute percentage volume errors) for all nodules was 8.4% (± 7.7%) for data acquired with the 16-slice Siemens scanner, 14.3% (± 11.1%) for the GE scanner, 9.7% (± 9.6%) for the Philips scanner and 7.5% (± 7.2%) for the Toshiba scanner, respectively," the group wrote.

In results that could help validate the significance of follow-up studies performed on different scanners, the group concluded that automated "nodule volumetry is accurate with a reasonable volume error in data from different scanner vendors."

By Eric Barnes

AuntMinnie.com staff writer

August 31, 2007

Related Reading

SUV readings vary on different PET systems, study finds, July 25, 2007

Low-dose CT practical for lung cancer screening, April 30, 2007

Part I: Automated CT lung nodule assessment advances, April 17, 2006

Lung CT CAD boosts performance of less experienced radiologists, November 27, 2006

Copyright © 2007 AuntMinnie.com