A group of mostly medical students had no trouble accurately reading virtual colonoscopy (VC or CT colonography) studies after comprehensive training, according to a new study from the University of Chicago.

Several previous studies have examined the relationship between performance and training, but in all of them sensitivity and specificity for the detection of colon polyps and cancer have been highly variable among readers. There was one other thread linking these earlier studies, explained study author Dr. Abraham Dachman and colleagues in the online version of Radiology.

"In all studies published to date, a study design involving negligible or either minimal informal or semiformal individual training of the readers has been used," they wrote. "In contrast, according to American College of Radiology practice guidelines and American Gastroenterological Association recommendations, a relatively large number of cases should be reviewed before clinical participation in CT colonographic data interpretation" (Radiology, October 2008, Vol. 248:1, pp. 167-177).

Dachman, along with Dr. Katherine Kelly, Michael Zintsmaster, Dr. Rich Rana, and colleagues, implemented a "highly regimented and consistent" training program for novice readers, followed by performance evaluation in a polyp-rich cohort from a previous trial of colonoscopy-confirmed cases.

"The study design also required ongoing incremental learning during the formative evaluation phase," which included incremental unblinding of the results compared to colonography for each study read, they noted.

The study tested its novice readers on 60 virtual colonoscopy cases from a previously published trial. There were 10 normal and 50 abnormal cases containing a total of 93 polyps: 61 lesions 6-9 mm in diameter and 32 polyps 10 mm or larger.

An expert reader selected the cases carefully to represent a range of subjective difficulty ratings. The researchers aimed to select a balance of easy, moderate, and difficult cases in terms of polyp conspicuity and size, morphology, surrounding folds, retained fluid, colon distension, and other factors. Twenty percent of the cases had been determined to be false-negative detections in the original trial, the authors noted. Within each reading difficulty category, the cases were selected without regard to polyp size, excluding only inconclusive or technically inadequate cases.

The seven readers included one abdominal imaging fellow and six medical students (one to four years) who had expressed an interest in radiology, all without prior VC experience.

The multipart training program consisted of a one-day course that included software training, introductory lectures on performing VC, interpretation in 2D and 3D, reporting findings, and 3.5 hours of reading unknown cases.

Reading assignments included an atlas and a packet of key articles on diagnostic accuracy, interpretation and reporting pitfalls, and training issues.

The observers then spent approximately 1.8 hours working with a training laptop computer that had selected portions of 61 cases with examples of typical polyps (sessile, pedunculated, flat, and annular) and common sources of false-positive interpretations, including residual stool, complex folds, etc., Dachman and his team wrote.

Next, the readers were given two types of reading software (Vitrea 2, Vital Images, Minnetonka, MN; Advantage Windows Workstation, GE Healthcare, Chalfont St. Giles, U.K.) for practicing on 10 unknown cases and completing case reporting forms. The 60 study cases were read in groups of 20 cases at a time, using a primary 2D interpretation method with 3D problem solving and correlation of findings.

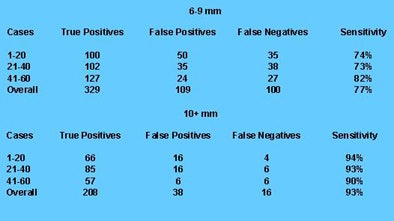

The results showed that the lowest per-patient sensitivity for polyps 6 mm and larger was 86% with 90% specificity. On a per-patient basis, four of the readers had 100% specificity for polyps 10 mm and larger, with specificity ranging from 82% to 97%. The mean sensitivity and specificity for polyps 10 mm and larger were 98% and 92%, respectively. On a per-polyp basis, overall observer sensitivity ranged from 64% to 87% (mean 77%) for 6-9-mm polyps, and from 91% to 97% (mean 93%) for polyps 10 mm and larger.

|

| Combined by-polyp results in 429 polyps 6-9 mm and 224 polyps ≥ 10 mm. All images courtesy of Dr. Abraham Dachman, from an earlier presentation of the study data. |

"The readers' detection of 6-9-mm polyps tended to improve as they gained more experience, but this trend was not significant (p > 0.05)," the authors wrote. "Conversely, the number of false-positive findings detected in each 20-case group decreased significantly with experience (p = 0.04)." False positives decreased from a mean 9.43 in the first 20 cases to a mean 4.29 in the last 20 cases.

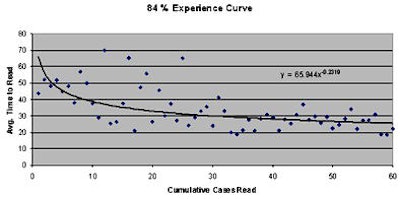

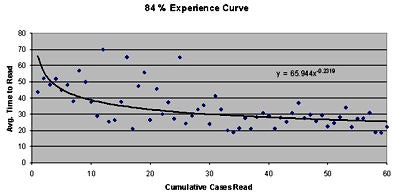

Reading time also improved significantly with experience, from a mean 43 minutes for the first 20 cases to a mean 25 minutes for the last 29 cases (p = 0.001). "As the trial proceeded, more cases were interpreted in 15 minutes or less, such that 18 of the last 20 cases were interpreted in 15 minutes or less by at least one observer: eight by one observer, three by two observers, and seven by three or more observers," the group wrote.

|

| Eighty-four percent experience curve means that for the 60 cases interpreted by each reader, the average time to read a case decreased by 16% for every doubling of experience. Average reading time is in minutes. |

Interobserver variability was low, as demonstrated by a range of areas under the receiver operator characteristics (ROC) curve across readers of 0.86-0.95.

"Reader performances in a screening setting are expected to differ owing to the nature of the sample and laboratory effects such as restricted reading time, but our results indicate that a two-arm evaluation or pre- versus post-training evaluation performed on an expensive and large scale could be justified," Dachman and his team wrote.

Multiple studies have described the highly variable performance of novice readers, but these studies have not accounted for the wide range of training components required for successful reading, they wrote.

"For example, because there are well-known interpretation pitfalls, at CT colonography, the selection of illustrative cases that demonstrate these pitfalls is imperative," the authors wrote. "Moreover, the number of cases does not convey observers' familiarity and comfort with interactive review at a computer workstation. Other challenges are related to polyp measurement, and proper point-to-point comparisons of 2D views versus 3D images and supine versus prone images. Therefore, studies of reader training in CT colonography should be highly specific in terms of the content and methods of the training and more and not merely describe the number of proved cases interpreted and/or the amount of time spent with an expert observer."

The methods applied to the training of these novice readers could be used for training, testing, and certifying radiologists to interpret VC data, and indeed some of them will likely be applied to future American College of Radiology (ACR) training courses.

"Baker et al recently demonstrated that the routine use of computer-assisted detection as a second reader improved the performance of inexperienced radiologists," the authors wrote. "In the same vein, experienced nonradiologists could be used as second readers to train radiologists or as standard second readers for clinical cases."

The main limitations of the study were its polyp-enriched cohort, and the lack of limitations on reading time, which might have boosted the results, the authors noted. In addition, the study design did not allow the investigators to select the most effective training components. Finally, the readers will need much additional training in the details of patient preparation, CT colonography data acquisition, and the detection of extracolonic abnormalities.

"In a polyp-enriched formative evaluation cohort, novice CT colonographic data readers can achieve high sensitivity and good specificity while undergoing a tailored but comprehensive training program," they concluded.

By Eric Barnes

AuntMinnie.com staff writer

September 18, 2008

Related Reading

Radiographers perform VC with CAD -- and controversy, June 24, 2008

Gastroenterologist beats radiologist in dual-prep VC match, June 6, 2008

Radiographers equivalent to radiologists in VC study, March 19, 2007

VC CAD helps junior readers catch up, May 4, 2006

Training key to VC performance, but how much is anyone's guess, February 8, 2005

Copyright © 2008 AuntMinnie.com