ChatGPT-4 demonstrated high accuracy in analyzing and interpreting thyroid and renal ultrasound images in a small study published March 19 in Radiology Advances.

Researchers led by Laith Sultan, MD, from Children’s Hospital of Philadelphia reported that the large language model could accurately perform image segmentation and classify lesions on ultrasound as normal or abnormal.

“These functionalities indicate the tool's potential to improve radiological workflows by pre-screening and categorizing ultrasound images,” Sultan told AuntMinnie.com. “This technology, if implemented in clinical practice, will have great potential in enhancing medical image interpretation and healthcare outcomes.”

While ultrasound is a versatile tool that can be performed in various settings inside and outside of radiology, owing to the advent of point-of-care ultrasound (POCUS), it is still a user-dependent modality.

AI algorithms, meanwhile, aim to aid radiologists and other medical practitioners who use ultrasound in image interpretation and lessen workloads. ChatGPT and other large language models continue to be explored for their clinical utility, with the researchers highlighting its potential and user-friendliness.

Sultan and colleagues tested the performance of ChatGPT-4, the latest iteration of ChatGPT, in analyzing thyroid ultrasound images.

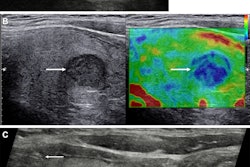

In one test, the team analyzed a thyroid nodule on ultrasound imaging using the large language model. It requested ChatGPT-4 to find and mark the lesion on the image and provide a differential diagnosis. ChatGPT-4 successfully placed a box around the lesion and provided a list of differential diagnoses for the outlined nodule, the team reported.

Additionally, the researchers tasked ChatGPT-4 with outlining the thyroid gland and lesion specifically, to which the model performed segmentation “with high accuracy.”

In another test, the team instructed ChatGPT-4 to identify images with normal findings and distinguish them from those with abnormalities. It reported that the model could classify cases with high accuracy and provide descriptions of findings and diagnoses in the images.

Sultan said that while the results are promising, there are key factors to consider for future improvements.

“Having a manual tool for image segmentation could greatly enhance utility. Additionally, potential technical challenges such as ChatGPT user load, internet connectivity, and limited memory capacity might arise,” he told AuntMinnie.com. “On the other hand, the strengths lie in its user-friendliness and accessibility, making ChatGPT-4 a potentially valuable asset in streamlining radiology workflows in the future.”

Sultan added that the researchers are conducting an extensive study that involves comprehensive data for image analysis.

“The outcomes of this study will be benchmarked against an established software,” he said. “Additionally, we have initiated a project focused on assessing the efficacy of GPT-4 in enhancing radiology report quality.”

However, the study has drawn criticism for its small sample size and concerns over ChatGPT-4’s actual classification abilities.

Woojin Kim, MD, a musculoskeletal radiologist and Chief Medical Information Officer at Rad AI, posted on X (formerly known as Twitter) that ChatGPT-4 has its shortcomings in outlining regions of interest and identifying abnormalities on thyroid and renal ultrasound images. These include having to ask the large language model multiple times.

The full study can be found here.