The U.S. National Institutes of Health (NIH) has highlighted a study that showed OpenAI’s multimodal AI model GPT-4 Vision (GPT-4V) can solve medical quiz questions based on clinical images and a brief text summary, but it was prone to mistakes.

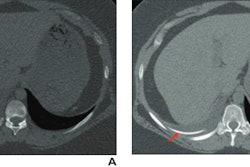

NIH researchers tested the AI model and nine nonradiologist physicians from different specialties on 207 questions from the New England Journal of Medicine’s “Image Challenge.” Both GPT-4V and the physicians scored highly in multi-choice answers, yet GPT-4V often made mistakes when describing the image and explaining its reasoning behind the diagnosis, even when it made the correct final choice.

“As this study shows, AI is not advanced enough yet to replace human experience,” noted National Library of Medicine (NLM) Acting Director Stephen Sherry, PhD, in a news release.

The New England Journal of Medicine’s “Image Challenge” is an online quiz that provides real clinical images and a short text description that includes details about a patient’s symptoms and presentation. It asks users to choose the correct diagnosis from multiple-choice answers. The quiz includes CT, x-ray, pathology, MRI, and macroscopic clinical images.

In the study, published July 23 in npj Digital Medicine, nine physicians and GPT-4V answered their assigned questions first in a “closed-book” setting, (without referring to any external materials such as online resources) and then in an “open-book” setting (using external resources).

The researchers then provided the physicians with the correct answer, along with the AI model’s answer and corresponding rationale. Finally, the physicians were asked to score the AI model’s ability to describe the image, summarize relevant medical knowledge, and provide its step-by-step reasoning.

According to the findings, the AI model and physicians scored highly in selecting the correct diagnosis, with the AI model selecting the correct diagnosis more often than physicians in closed-book settings (81.6% to 77.%), and physicians with open-book tools performed better than the AI, producing accuracy of 95.2%. Notably, GPT4-V correctly answered 7 of the 10 questions that were incorrectly answered by physicians in the open-book setting.

"This suggests that GPT-4V holds potential in decision support for physician," the authors wrote.

However, based on physician evaluations, the AI model often made mistakes when describing the medical image and explaining its reasoning.

In one example, the AI model was provided with a photo of a patient’s arm with two lesions, which physicians would easily recognize as being caused by the same condition. Yet the lesions were presented at different angles, which caused the illusion of different colors and shapes, and GPT-4V failed to recognize that they could be related to the same diagnosis, NIH said.

Ultimately, the researchers argue that these findings underpin the importance of evaluating multimodal AI technology further before introducing it into the clinical setting.

“Understanding the risks and limitations of this technology is essential to harnessing its potential in medicine,” added NLM Senior Investigator and corresponding author Zhiyong Lu, PhD.

Access to the full article is available here.