ChatGPT is on a par with medical students when it comes to passing free-response clinical reasoning exams that include radiology-related questions, according to research published on July 17 in JAMA Internal Medicine.

A team led by Eric Strong, MD, from Stanford University in California reported that the fourth model of ChatGPT (GPT-4) scored slightly higher than the students and had comparable passing rates for the exams.

"Our findings underscore challenges and opportunities for medical training and practice and suggest likelihood of more future dramatic advances," Strong and colleagues wrote.

ChatGPT has been buzzing in radiology circles, with the technology showing it could have a role in patient-facing communications and supporting diagnostic imaging decisions. However, it has also shown that it has a long way to go in the field, with the large language model struggling to provide simplified answers to medical questions and producing factually accurate articles.

Parent company OpenAI in March released an upgrade to the original system, GPT-3.5, with GPT-4. The company said the upgrade provides safer and more useful responses. A study published in March 2023 showed that GPT-4 successfully passed a simulated multiple-choice U.S. Medical License Examination.

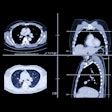

Strong and co-authors wanted to find out how well the chatbot could respond to free-response, multiphase, case-based questions. They used clinical reasoning final exams given to first- and second-year students at Stanford University. Exam prompts included clinical data such as chest x-rays and head CT exams. The team compared the performance of the students with that of GPT-4 and GPT-3.5.

The researchers reported that GPT-4 scored an average of 4.2 points higher than the students (p = 0.02). They also found that respective passing rates were comparable between the two, 93% for GPT-4 and 85% for the students (p = 0.40). Additionally, the team reported that GPT-4 scored 18 points higher than GPT-3.5 (p < 0.001) and that it had a higher passing rate than its predecessor, 93% versus 43% (p < 0.001).

The researchers also tested the models on a high-complexity case, which was run 20 times to evaluate variation in chatbot responses. They found that GPT-4 had higher scores and lower variation than GPT-3.5, with a higher passing rate at 100% versus 35% (p < 0.001).

Finally, the team reported that GPT-4 outperformed the students in creating a problem list for clinical reasoning by 16 points (p < 0.001). However, all other skills did not achieve statistical significance.

The study authors suggested that based on the results, medicine should integrate AI-related topics into clinical training and continuing medical education.

"As the medical community had to learn online resources and electronic medical records, the next challenge is learning judicious use of generative AI to improve patient care," they wrote.

The entire study can be found here.

In an accompanying editorial, Cary Gross, MD, from the Yale School of Medicine and Eric Ward, MD, from the University of California, San Francisco wrote that future studies should be designed to evaluate the performance of ChatGPT and other chatbots as they are updated and new iterations are developed. They added that evidence is needed to guide patient-centered adoption of these chatbots in healthcare and medical education.

"A failure to appreciate the unique aspects of the technology could lead to incorrect or unreproducible evaluations of its performance and premature dissemination into clinical care," they wrote.