ChatGPT-4 (GPT-4) has promise to improve disease diagnosis in complex cases by analyzing medical information such as radiology reports, suggests findings published August 14 in JAMA Network Open.

In a study involving different clinical scenarios in six patients who had a delayed diagnosis, researchers led by Yat-Fung Shea, MBBS, from Queen Mary Hospital in Hong Kong found that GPT-4 was accurate in two-thirds of primary diagnoses and over 80% of differential diagnoses, better than that of clinicians alone.

"Overall, GPT-4 has potential clinical use in older patients without a definitive clinical diagnosis after one month, but requires comprehensive entry of demographic and clinical, including radiological and pharmacological, information," Shea and co-authors wrote.

AI's use continues to increase in diagnosing diseases and conditions, relying on imaging data. AI could potentially aid in low-income countries, where specialist care may be few and far in-between.

OpenAI launched GPT-4 in 2023, improving on its base ChatGPT large-language model. In radiology, GPT-4 has shown that it can perform well in decision-making by following criteria from the American College of Radiology (ACR), as well as pass case-based imaging quizzes. The researchers noted that GPT-4 allows for clinical history in daily practice to be analyzed.

Shea and colleagues wanted to find out if GPT-4 could help improve accuracy by clinicians in supplying the most probable diagnosis or suggesting differential diagnoses in complex cases.

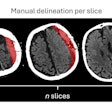

The team tested GPT-4, clinicians, and the Isabel DDx Companion diagnostic decision-support system (Isabel Healthcare) in diagnosing six patients aged 65 years and older based on their medical histories, including radiological and pharmacological data. The patients had a delay of definitive diagnosis longer than one month in 2022 and were retrieved after resolution.

The researchers found that GPT-4 outperformed the clinicians and Isabel DDx Companion in making both primary and differential diagnoses.

| Performance of GPT-4, clinicians, and decision support system | |||

| Isabel DDx Companion | Clinicians | GPT-4 | |

| Primary diagnoses | 0% | 33.3% | 66.7% |

| Differential diagnoses | 33.3% | 50% | 83.3% |

The researchers also studied changes in GPT-4's responses and found that certain keywords are needed for the model to make appropriate clinical responses. These included the following: abdominal aortic aneurysm (patient 1), proximal stiffness (patient 2), acid-fast bacilli in urine (patient 3), metronidazole (patient 4), and retroperitoneal lymphadenopathy (patient 6).

The team also reported that GPT-4 could suggest diagnoses that clinicians did not consider before definitive investigations. These included the following: mycotic aneurysm for patient 1 after CT imaging showed an abdominal aortic aneurysm; a drug cause of seizure in patient 5; and the presence of necrotic lymph nodes from a previous CT scan, which should have led to the diagnosis of lymphoma, in patient 6.

The study authors suggested that based on their results, GPT-4 could help increase confidence in diagnosis for clinicians. They also wrote that it could make suggestions like specialists and could be useful in low-income countries lacking resources for specialist care.

The full report can be found here.