Researchers have developed an augmented reality (AR) technique to visualize 3D virtual guidance lines directly on a patient during fluoroscopy-guided orthopedic surgery -- reducing procedure time and radiation, according to a study published in the April issue of the Journal of Medical Imaging.

Mathias Unberath, PhD, from Johns Hopkins University.

Mathias Unberath, PhD, from Johns Hopkins University.

"[Using a] marker that is visible by the x-ray system and the head-mounted display, we can immerse the surgeon in an augmented reality environment in unprepared operating rooms," co-author Mathias Unberath, PhD, told AuntMinnie.com. "From a clinical perspective, our hope is that such less-intrusive systems will find broader application since their benefit is not outweighed by notable disruption of the surgical workflow."

Assessing spatial relations

Fluoroscopic C-arms are the standard intraoperative modality for image-guided orthopedic surgeries. Though useful, the 2D nature of the images necessitates the acquisition of many x-ray scans from multiple angles for the surgeon to be able to estimate the 3D position of structures within the field-of-view.

For more continuous and 3D visualization during surgery, clinicians have turned to several different strategies that often involve attaching optical markers to patients and using infrared cameras to view previously acquired CT or MRI datasets on a monitor, the authors noted. Most of these methods come at a high cost and also require additional steps that increase procedure time.

Unlike these methods, augmented reality integrates image visualization into the surgical workflow and enables surgeons to visualize annotations to medical images directly on a patient, Unberath said. Prior studies using AR have relied on external navigation devices and pre- or intraoperative CT scanning only available on high-end C-arm machines.

A HoloLens screen capture in one-view mode of the surgical suite showing virtual lines pinpointing a targeted area on a marker. All images courtesy of Mathias Unberath, PhD.

A HoloLens screen capture in one-view mode of the surgical suite showing virtual lines pinpointing a targeted area on a marker. All images courtesy of Mathias Unberath, PhD."Our recent research on medical augmented reality has focused on enabling this technology in the operating room without substantial changes to the clinical workflow," he said. "We wanted to provide virtual content designated for procedural guidance at the surgical site to help hand-eye coordination in image-guided procedures, suggesting that we needed to somehow calibrate the AR display to the patient or images of the patient."

AR and C-arm calibration

To achieve this goal, Unberath and colleagues developed a technique combining AR and C-arm fluoroscopy. They started by creating an anatomical marker consisting of lead on one side and a paper printout of a conventional marker available in the open-source software ARToolKit on the other side. This multimodality marker was detectable by both AR devices and C-arms.

Next, they used a C-arm (Arcadis Orbic, Siemens Healthineers) to acquire fluoroscopy images of the marker and then wirelessly transferred these images to an optical see-through Microsoft HoloLens headset using a frame grabber (Epiphan Systems). They followed a series of steps to calibrate the C-arm machine to the "world coordinate system," or spatial map, associated with the HoloLens headset, including the following:

- Positioning the C-arm over a targeted region

- Situating the multimodality marker so that it was visible with the HoloLens in the C-arm field-of-view

- Using voice commands to acquire x-ray images

- Annotating landmarks around a targeted area on the x-ray images

They reported an average positional error of 21.4 mm and an average rotational error of 0.9° between the fluoroscopy images acquired with the C-arm and those seen with the HoloLens headset.

After calibration, they made point and line annotations on the x-ray images based on corresponding virtual lines and planes seen through the HoloLens. These markers can intraoperatively help surgeons locate entry points on the body for orthopedic procedures such as fracture repair, the authors noted.

To assess the accuracy and precision of the virtual 3D lines, they constructed a phantom with metal beads attached to several different targeted areas, acquired fluoroscopy images of the phantom, and annotated the images. They found an average difference of 9.84 mm between the lines drawn on the fluoroscopy images and the virtual 3D lines in relation to the targeted areas.

Integration with surgical workflow

Finally, the researchers evaluated the effectiveness of using their augmented reality technique for identifying the entry point in the implantation of surgical nails in patients with a femoral fracture. They built a semianthropomorphic femur phantom to resemble that of an obese patient and used the phantom in a surgical simulation.

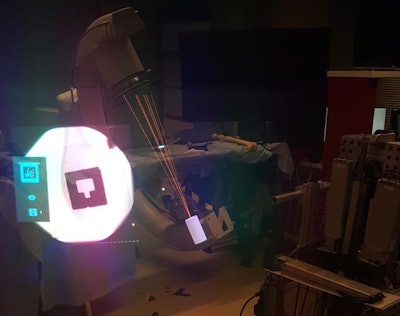

A fluoroscopy monitor contains x-ray images of a marker (left) that is surrounded by 3D virtual guidance lines on an operating table (center).

A fluoroscopy monitor contains x-ray images of a marker (left) that is surrounded by 3D virtual guidance lines on an operating table (center).For the simulation, two orthopedic surgeons navigated a Kirschner wire, or K-wire, onto the target point, first using the conventional approach of looking at fluoroscopic images on a monitor and then with the assistance of virtual 3D lines visible with the HoloLens headset.

On average, entry point localization using the AR technique was 5.2 mm away from the target, compared with 4.6 mm away with the conventional method. However, the AR technique reduced procedure time by nearly 10% and required only 10 x-ray acquisitions, compared with 96 for the traditional method.

Despite its slightly lower accuracy, the AR technique can provide intuitive in situ visualization of medical images in 3D and directly at the surgical site, which can simplify hand-eye coordination demands for the orthopedic surgeon, Unberath said.

As it stands, the technique is best suited for general guidance during surgery, such as bringing surgical instruments closer to anatomical landmarks, he said. The errors in accuracy suggest lackluster performance that would limit its clinical use for procedures demanding precise measurements.

"We have since worked on the next generation of camera-augmented C-arms that are equipped with an inside-out tracker and calibrated to the surgeon," he said. "This system allows for volume rendering of pre- or intraoperative 3D images on the patient and, at the same time, continuously tracks the C-arm position -- yielding a dynamic AR environment ideally suitable for achieving down-the-beam visualization of anatomy, e.g., for trocar placement in vertebroplasty."