A group in Seoul, South Korea, has developed an AI model that could help reduce radiology workflows by identifying “no changes” in follow-up chest x-rays of patients in critical care, according to a study published October 24 in Radiology.

In a test set of 533 pairs of patient x-rays (baseline and follow-up), the algorithm was highly accurate when interpreting those that showed no changes, wrote first authors Jihye Yun, PhD, Yura Ahn, MD, of the University of Ulsan, and colleagues.

“Radiologists routinely compare the current and previous chest radiographs during interpretation to enhance the sensitivity for change detection and provide information for differential diagnosis. However, this results in a large workload that could delay the timely reporting of significant findings,” the group wrote.

Moreover, most AI models developed to interpret chest x-rays so far are restricted to an image at a single time point. Thus, in this study, the group aimed to validate a deep-learning algorithm using thoracic cage registration and subtraction to triage pairs of chest radiographs showing no change.

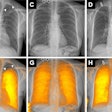

Example of triage of no change in a pair of chest radiographs in the emergency department. (A) Baseline posteroanterior chest radiograph in a 63-year-old female patient shows a small amount of left (L) pleural effusion and partial atelectasis of the right middle lobe. (B) Follow-up posteroanterior chest radiograph obtained in the same patient 1 day later shows no significant change. (C) Gradient-weighted class activation map shows the algorithm determined no change in the image pair, without relevant highlighting, as the highlighted area is located over the gastroesophageal junction. Image courtesy of Radiology.

Example of triage of no change in a pair of chest radiographs in the emergency department. (A) Baseline posteroanterior chest radiograph in a 63-year-old female patient shows a small amount of left (L) pleural effusion and partial atelectasis of the right middle lobe. (B) Follow-up posteroanterior chest radiograph obtained in the same patient 1 day later shows no significant change. (C) Gradient-weighted class activation map shows the algorithm determined no change in the image pair, without relevant highlighting, as the highlighted area is located over the gastroesophageal junction. Image courtesy of Radiology.

The researchers included 3.3 million chest x-rays acquired from 329,036 patients at their hospital between 2011 and 2018 to train and validate the model. The test set included 533 pairs of x-rays from emergency department (ED) patients (265 with no changes and 268 with changes) and 600 pairs from intensive care unit (ICU) patients (310 with no changes and 290 with changes).

Two thoracic radiologists reviewed the x-rays to establish the ground truth, including normal or abnormal status, while the algorithm’s performance was evaluated using area under the receiver operating characteristic curve (AUC) analysis.

The algorithm achieved an AUC of 0.77 when identifying no changes versus changes in the validation dataset and AUCs of 0.8 in the ED and ICU test sets, according to the findings.

In addition, with the algorithm set to a triage threshold of 40% (meaning its “confidence” level was 40%) the algorithm achieved a specificity of 88.4% (correct in 237 of 268 pairs) in the ED dataset and 90.0% (261 of 290 pairs) in ICU set. With a 40% triage threshold for urgent findings (consolidation, pleural effusion, and pneumothorax), the model’s specificity was 78.6% to 100% in the ED images and 85.5% to 93.9% in the ICU images.

“The deep-learning algorithm could triage pairs of chest radiographs showing no change while detecting urgent interval changes during longitudinal follow-up,” the authors wrote.

However, additional validation and refinement is required, they added.

In an accompanying editorial, Julianna Czum, MD, of Johns Hopkins University in Baltimore, MD, echoed the need for additional studies and noted that AUCs of 0.7 to 0.8 are considered to indicate good performance in machine learning.

“But excellent performance, which is what is expected of radiologists, and perfect performance, which is what radiologists strive for, were not achieved by the model,” she wrote.

Nonetheless, AI models that can help reduce work volumes may be important for burnout prevention, Czum wrote.

“So, do we just keep pressing forward with the status quo of trying to interpret ever more images with the tools we have, or do we turn our hopes to AI, which has its own controversies? That is, do we want change or no change?” she concluded.

The full article is available here.