Lung tumors are notorious in the challenges they present for effective radiotherapy -- meaning treatment that minimizes harm to surrounding healthy tissue, particularly in an age when image-guided radiation therapy systems have the functionality to "lock on" to targets and emit precise dosage only when the target is correctly positioned.

Motion is the biggest problem. Breathing can cause a tumor to move between 1 and 3 cm, making the painstakingly precise pretreatment plan less precise during beam-on time. And lung tumors are essentially indistinguishable from normal lung tissue on real-time images obtained with fluoroscopic imaging.

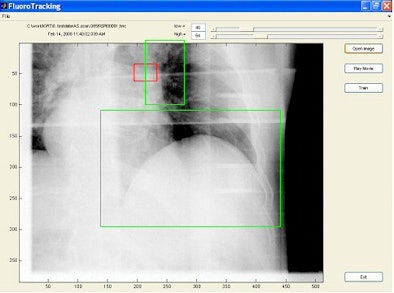

Working from the premise that other more visible anatomic features move in concert with the tumors, researchers at the University of California, San Diego (UCSD) have developed a computer algorithm that uses these visible features as tumor stand-ins. In a feasibility study, the method localized the tumor position to an accuracy of within 1 mm and 2.5 mm, according to Tong Lin, Ph.D., a researcher with the department of radiation oncology at UCSD, who presented the findings at the recent American Association of Physicists in Medicine (AAPM) meeting.

The researchers studied retrospective patient-specific motion analysis of 12 individuals. Adding to the variability, Lin observed that "as the exhale occurs we get less and less similar images; during inhale they come closer together." For each patient, 15 seconds of fluoroscopic images were taken before treatment. Anatomic features that expert observers believed correlated with tumor motion were manually selected and marked in the first image frame.

The researchers obtained correlations between the tumor position and the anatomic regions of interest using an algorithm based on principle component analysis and regression analysis.

Four different regression methods -- one-degree and two-degree linear regression, artificial neural network (ANN), and support vector machine -- were analyzed for accuracy through comparison of the prediction results with the stand-in markers. Lin said ANN gave the best results but that mean localization errors were smaller than 1 mm for most patients and under 2.5 mm in the worst case in all methods used.

"The algorithm that we are developing will be able to automatically select surrogate anatomic features whose motions are correlated with tumor motion," commented senior author Steve Jiang, Ph.D., associate professor and director of research at UCSD's department of radiation oncology. "Thus, by tracking their motion we can derive the positions of the unseen tumors."

|

|

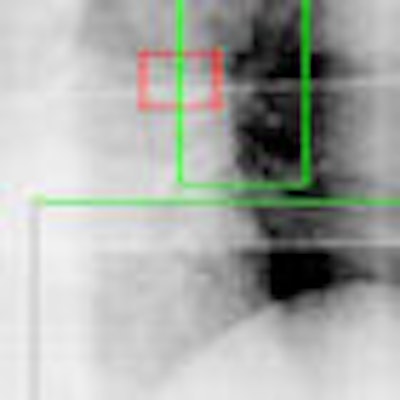

| Tumor is in red rectangular region and is invisible in the image. Two green regions are selected as surrogate windows, from which motion information is extracted using machine-learning techniques to derive the position of the invisible tumor. Image provided by Steve Jiang, Ph.D., University of California, San Diego. |

A separate team of researchers at Washington University in St. Louis is developing combined computer algorithms to follow tumor response over the course of lung tumor radiotherapy and determine the total dose delivered to tumors and surrounding tissues.

One algorithm maps tumor position and size over the course of treatment and another segments tumors from other lung tissue using CT images.

Issam El Naqa, Ph.D., an assistant professor of radiation oncology, and colleagues tested the combination algorithm in a study of four non-small cell lung cancer patients. In the preliminary analysis presented at the AAPM meeting, the estimated tumor volume reductions ranged from 3% to 46%, with a median of 8%, by midtreatment and from 26% to 51%, with a median of 34%, by the end of treatment.

By Kathlyn Stone

AuntMinnie.com contributing writer

August 8, 2008

Related Reading

Initial chemo beneficial in lung cancer brain metastasis, August 7, 2008

ASCO study: Curtailed radiotherapy effectively treats breast cancer patients, June 28, 2007

Motion and contrast problems spoil CTPA most often, October 19, 2005

Copyright © 2008 AuntMinnie.com