An artificial intelligence (AI) algorithm can characterize thyroid nodules on ultrasound with a high degree of accuracy, potentially helping to avoid unnecessary biopsies of indolent lesions, according to research published online in the Journal of Digital Imaging.

After fine-tuning an existing deep convolutional network, researchers from Canada found that their model had an accuracy rate of more than 95% for classifying thyroid nodules as malignant or benign. While more work is needed to improve the system, they believe it could ultimately be used to augment the work of radiologists and speed up interpretation times.

Incidental thyroid nodules

The success of cross-sectional imaging has resulted in a significant rise in the incidental identification of thyroid nodules, according to Dr. Paul Babyn, head of the department of medical imaging at the University of Saskatchewan/Saskatoon Health Region.

"As more and more CTs and MRs are being done, and there is more detection of abnormalities within the thyroid, you need to employ a standardized approach to follow them up in the system and ensure you will not biopsy unnecessarily," Babyn said.

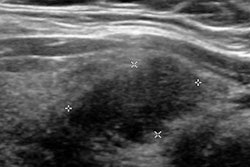

Ultrasound is typically used for follow-up of these incidentally detected nodules -- most of which are benign or have indolent behavior. When reading these studies, radiologists are now encouraged to use the Thyroid Imaging Reporting and Data System (TI-RADS) to classify thyroid nodules for potential malignancy, utilizing features that include hypoechogenicity, absence of a halo, microcalcifications, solidity, intranodular flow, and taller-than-wide shape, Babyn said. However, the time investment required in evaluating thyroid nodules with TI-RADS is not insignificant for practicing radiologists.

"Like any system where the radiologist needs to spend more time and attention, it can be difficult to get their full participation," he said.

Deep learning

As a result, the researchers sought to apply a deep convolutional neural network to expedite the assessment of thyroid nodules using TI-RADS. They fine-tuned a GoogLeNet model that had been pretrained on data from the ImageNet database (JDI, July 11, 2017).

"The differences between those classes of objects [in the ImageNet database] are much more obvious compared to the subtleties in ultrasound images," Babyn said. "A deep-learning algorithm requires a lot of examples to train it. We trained the network so it can classify images in the dataset that we have, and we have some evidence that [the algorithm] will generalize."

The researchers used two different datasets to train the network to be familiar with the range of thyroid nodules that are detected, and then they categorize them for the need or lack of need for further action, said senior author Mark Eramian, PhD, an associate professor in the department of computer science at the University of Saskatchewan. One was a publicly available database of 428 thyroid ultrasound images, while the second was a Saskatoon health database of 164 thyroid ultrasound images. Separate image sets from the databases were used for training, validating, and testing the network.

In addition, the group applied preprocessing algorithms to enhance image quality and improve deep-learning results. A data augmentation scheme also was used in the training process. This fine-tuning process enabled the network to recognize images and change the features that it considers important to distinguish one class of images from another, Eramian said.

The resulting model was highly accurate on testing cases from both datasets.

| Deep-learning model performance | ||

| Cases from publicly available database | Cases from Saskatoon health database | |

| Accuracy | 99.1% | 96.3% |

| Sensitivity | 99.7% | 82.8% |

| Specificity | 95.8% | 99.3% |

| Area under the curve* | 0.997 | 0.992 |

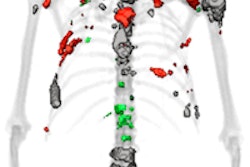

The researchers believe that the deep neural network will likely play an adjunctive role in stratifying thyroid nodules in terms of malignancy.

"We are hoping to obtain a similar grading in an automated fashion, so the radiologist could do their reading to ensure it is correct," Babyn said. "They do not have to fill it [radiology report] out de novo."

Room for improvement

The goal for the system is to potentially reduce patient harm and financial costs by decreasing the need for fine-needle biopsies, Eramian said.

"Our results clearly show promise, but the accuracy of the system needs to be improved a bit more, as well as the granularity of its predictions before it could be put into clinical practice," he said. "Right now, it can predict 'probably benign' and 'probably malignant.' I would like to get to the point where it can give recommendations such as 'definitely benign,' 'definitely suspicious,' and 'unsure.' "

Collecting more samples and more images with high-quality annotations would be a strategy to improve accuracy and granularity, Eramian said. A concerted effort to standardize image annotation is required to use deep neural networks on a more widespread basis, he said.

More annotated public datasets and common data standards for annotations are needed. For deep learning to be successful, hospitals have to get on board with common data standards, he said.

"This will avoid expensively difficult and sometimes insurmountable obstacles that can occur when attempting to integrate metadata from different databases with different organizational schemes and disparate data contents from different institutions," Eramian said. "The metadata that are stored with the images needs to be standardized."