Approximately 90% of biopsies performed on thyroid nodules have benign findings. Deep learning may help many of these patients avoid unnecessary procedures, according to a research team from the Mayo Clinic in Rochester, MN, and Arizona State University (ASU) in Tempe, AZ.

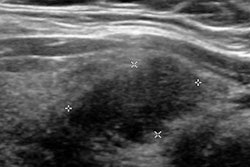

After training a deep-learning algorithm to differentiate thyroid nodules using texture measurements on B-mode ultrasound images, the researchers found that it could obviate the need for nearly half of these biopsies -- without sacrificing sensitivity.

"This preliminary study demonstrated that the number of [fine-needle aspiration] biopsies may be reduced by 45% without missing any patients with malignant thyroid nodules," said Zeynettin Akkus, PhD, from the Mayo Clinic. He presented the findings on behalf of co-author Zongwei Zhou of ASU at the recent Society for Imaging Informatics in Medicine's Conference on Machine Intelligence in Medical Imaging (C-MIMI 2017) in Baltimore.

Low malignancy rate

Zeynettin Akkus, PhD, from the Mayo Clinic.

Zeynettin Akkus, PhD, from the Mayo Clinic.

"Therefore, there is a clinical need for a dramatic reduction of these fine-needle aspiration biopsies," Akkus said.

The Mayo Clinic and ASU research team performed a feasibility study to explore whether a deep-learning network based on ultrasound image features could differentiate benign from malignant nodules and reduce the number of FNA biopsies.

"There are some suspicious features on ultrasound that can kind of help [clinicians decide] to send a patient for a biopsy," he said. "And we are trying to predict this from the ultrasound images using deep learning."

Training the algorithm

The research team gathered 174 thyroid nodules that had exact matches between the ultrasound images and FNA biopsies. Of these 174 thyroid nodules, 119 were benign and 55 were malignant.

The dataset used to train the deep-learning algorithms consisted of 100 benign cases and 50 malignant cases; the remaining 19 benign and five malignant cases were set aside to serve as the testing dataset. Given the limited size of the training dataset, the researchers used data augmentation to artificially increase the number of cases available for training the algorithms. These augmentation methods -- including rotation, horizontal and vertical shifts, zooming, and vertical and horizontal flipping of the images -- expanded the size of the training dataset to 1,500 benign cases and 1,500 malignant cases, Akkus said.

For this project, the researchers used a deep-learning architecture based on residual networks (ResNets) that were adapted to meet their classification needs, he said. Fivefold cross-validation was used during the training stage for the networks.

Texture analysis

After trying a number of approaches, the team found that the best-performing algorithm was a ResNet that utilized a "random-forest" classifier trained using nodule texture analysis data called Law's texture energy measurements. These measurements can be computed from 2D ultrasound images to help determine the malignancy of a thyroid nodule, Akkus said.

Operating at a 100% sensitivity rate for malignant nodules, the ResNet with the trained random-forest classifier could decrease the number of FNA biopsies performed on indeterminate thyroid nodules by 45%, according to the researchers. The receiver operating characteristic (ROC) analysis also found that the ResNet's optimal operating point would yield 82.3% sensitivity, 76.2% specificity, and an area under the curve of 0.72362.

Akkus said he anticipates that this performance could be improved further with the inclusion of more training data.

"So we are getting more data and hopefully we can increase the [size of the] dataset to thousands of images, and hopefully we are going to have better prediction power," he said. "In future work, we also expect to include Doppler images and possibly shear-wave elastography images. Then we will have multimodality information that could improve the prediction power of this classification."