Patient characteristics influenced false-positive results on digital breast tomosynthesis (DBT) when analyzed by an AI algorithm approved by the U.S. Food and Drug Administration (FDA), a study published May 21 in Radiology found.

Researchers led by Derek Nguyen from Duke University in Durham, NC found that false-positive case scores as assigned by the algorithm were significantly more likely in Black and older patients and less likely in Asian patients and younger patients compared to white patients and women between the ages of 51 and 60.

“Radiologists should exercise caution when using these AI algorithms, as their effectiveness may not be consistent across all patient groups,” Nguyen told AuntMinnie.com.

Radiology departments continue to have interest in implementing AI into their workflows, with the technology being used to manage workloads among radiologists. However, the researchers pointed out a lack of data on the impact of patient characteristics on AI performance.

Nguyen and colleagues explored the impact of patient characteristics such as race and ethnicity, age, and breast density on the performance of an AI algorithm interpreting negative screening DBT exams performed between 2016 and 2019.

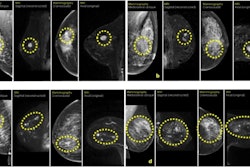

An example mammogram was assigned a false-positive case score of 96 in a 59-year-old Black patient with scattered fibroglandular breast density by an FDA-approved AI algorithm. (A) Left craniocaudal and (B) mediolateral oblique views demonstrate vascular calcifications in the upper outer quadrant at middle depth (box) that were singularly identified by the algorithm as a suspicious finding and assigned an individual lesion score of 90. This resulted in an overall case score assigned to the mammogram of 96.RSNA

An example mammogram was assigned a false-positive case score of 96 in a 59-year-old Black patient with scattered fibroglandular breast density by an FDA-approved AI algorithm. (A) Left craniocaudal and (B) mediolateral oblique views demonstrate vascular calcifications in the upper outer quadrant at middle depth (box) that were singularly identified by the algorithm as a suspicious finding and assigned an individual lesion score of 90. This resulted in an overall case score assigned to the mammogram of 96.RSNA

All exams had two years of follow-up without a diagnosis of atypia or breast malignancy, indicating that they are true-negative cases. The team also included a subset of unique patients that was randomly selected to provide a broad distribution of race and ethnicity.

The FDA-approved algorithm (ProFound AI 3.0, iCAD) generated case scores (malignancy certainty) and risk scores (one-year subsequent malignancy risk) for each mammogram.

The study included 4,855 women with a median age of 54 years. Of the total, 1,316 were white, 1,261 were Black, 1,351 were Asian, and 927 were Hispanic.

The algorithm was more likely to assign false-positive case and risk scores to Black women and less likely to assign case scores to Asian women compared to white women. The algorithm was also more likely to assign false-positive case scores to older patients and less likely to do so for younger patients.

| Likelihood of false-positive score assignment by AI algorithm | |||||

|---|---|---|---|---|---|

| Patient demographic | Suspicious case score | Suspicious risk score | |||

| |

Odds ratio | P-value | Odds ratio | P-value | |

| White | Reference | -- | Reference | -- | |

| Black | 1.5 | <0.001 | 1.5 | 0.02 | |

| Asian | 0.7 | 0.001 | 0.7 | 0.06 | |

| Age 51-60 | Reference | -- | Reference | -- | |

| Age 41-50 | 0.6 | <0.001 | 0.2 | <0.001 | |

| Age 61-70 | 1.1 | 0.51 | 3.5 | <0.001 | |

| Age 71-80 | 1.9 | <0.001 | 7.9 | <0.001 | |

Additionally, women with extremely dense breasts were more likely to be assigned false-positive risk scores compared to women with fatty breasts (odds ratio [OR], 2.8; p = 0.008). The same trend went for women with scattered areas of fibroglandular density (OR, 2; p = 0.01) and women with heterogeneously dense breasts (OR, 2.0; p = 0.05).

The team also reported no significant differences in both risk and case scores between Hispanic women and white women.

The study authors called for the FDA to provide clear guidance on the demographic characteristics of patient samples used to develop algorithms, and for vendors to be transparent about the algorithm development process. They also called for diverse data sets to be used in training future AI algorithms.

Nguyen said that radiology departments should thoroughly investigate the patient population datasets on which the AI algorithms were trained.

“Ensuring that the distribution of the training dataset closely matches the demographics of the patient population they serve can help optimize the accuracy and utility of the AI in clinical workflows,” he told AuntMinnie.com.

The full study can be found here.