Radiologists who are confident in their mammography exam interpretations tend to be more accurate, according to a new study published in the July issue of the American Journal of Roentgenology.

In a study involving 119 community radiologists, those who rated their global interpretive ability as above average or as expert had a higher positive predictive value (PPV) for cancer in a set of 109 screening mammograms, compared with radiologists who rated their ability as average or below average. This suggests that radiologists' perceptions of their performance match their ability to recall patients for workup based on screening mammograms, according to a team led by Dr. Berta Geller of the University of Vermont.

"Among high-performing individuals, such as competitive athletes, performance is often linked to self-efficacy, which is a generalized belief in one's ability to succeed in a particular situation," the group wrote. "Our study shows that both global self-efficacy and confidence related to a radiologist's ability to correctly interpret an individual patient's mammographic examination are associated with increased PPV."

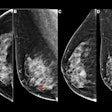

Even though screening mammography is currently the best way to detect early breast cancer, there's unexplained variability in radiologists' interpretation performance, according to Geller and colleagues (AJR, July 2012, Vol. 199:1, pp. W134-W141).

Previous studies on this issue have focused on radiologists' reading volume, years of experience, fellowship training, and even enjoyment of interpreting mammograms. But there has been no specific research that explores how radiologists' confidence when interpreting mammography contributes to reader performance, according to the researchers.

The study included 119 community radiologists who interpreted 109 screening mammography exams in test sets. The radiologists rated their confidence in their assessment for each case. Cases were chosen from analog screening mammography exams taken between 2000 and 2003 of women ages 40 to 69.

The overall cancer rate of the test sets was higher than that found in a general screened population; a panel of three expert radiologists reviewed and agreed on all the cases to be recalled. Geller's group used log-linear regression to examine the effect of self-reported ability and examination-level confidence on test-set PPV and negative predictive value (NPV).

The study radiologists answered demographic and clinical practice survey questions, which included questions on their ability to perceive and identify important mammographic findings. For each test-set case, radiologists indicated whether or not they would recall the patient. The radiologists also rated their level of confidence in their recall/no-recall assessment, choosing "not confident," "not very confident," "neutral," "confident," or "very confident."

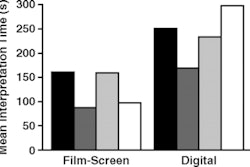

Study radiologists' self-rating of their interpretation ability varied by test set, with a higher proportion of radiologists rating their ability as "average" for test sets 1 and 2 (which contained relatively lower prevalence of cancer, or 15 out of 109 cases); "above average" for test sets 3 and 4 (which contained higher cancer prevalence, or 30 out of 109); and "expert" for tests 1 and 4.

Those radiologists who expressed higher interpretive ability tended to read more mammograms per week, were more likely to specialize in their radiology work, and were more likely to have completed a fellowship in breast or women's imaging.

The mean PPV relative to cancer differed across groups, with radiologists reporting above-average ability having 1.06 times higher PPV than the average ability group, and those reporting expert ability having 1.08 times higher PPV than the average ability group, Geller's team found. These groups did not differ much in their mean PPVs relative to the expert panel's recall decision or in their mean NPVs relative to either self-reported interpreting ability or expert recall.

The study also found that radiologists' confidence in their assessments to recall a patient on the basis of screening mammography tended to match the expert radiologist panel's recall decision: Confidence was higher for both cancer and noncancer cases that the expert panel determined should be recalled and lower for cases for which the expert panel determined that recall was unnecessary.

Geller's group also found a relationship between confidence and accuracy of a negative assessment depended on whether the radiologists interpreted 100 or more mammograms per week or fewer than 100 mammograms per week.

Is performance connected to confidence? It seems so, the authors concluded.