Making use of a false-color enhancement technique that converts black-and-white shadows on mammograms into color, a deep-learning algorithm can achieve accuracy similar to an expert radiologist's for detecting breast cancer, according to research published online June 27 in the Journal of Digital Imaging.

A team from artificial intelligence (AI) software developer Zebra Medical Vision applied deep convolutional neural networks (CNNs) to digital mammograms that had been processed first with contrast-limited adaptive histogram equalization (CLAHE) and then with false-color enhancement to optimize the images for deep learning. In testing, the algorithm yielded 91% sensitivity and 80% specificity -- as good a performance as any published results for radiologists working with or without computer-aided detection (CAD) software, said senior author and Zebra Chief Medical Officer Dr. Eldad Elnekave.

Dr. Eldad Elnekave, chief medical officer at Zebra Medical Vision.

Dr. Eldad Elnekave, chief medical officer at Zebra Medical Vision.The developers believe the algorithm will have the most impact in areas of the world that do not have enough mammographers to support breast cancer screening.

"Most of the world doesn't implement routine breast cancer screening, and the barrier isn't hardware or physical access: It's the immense human resource required to provide value once a mammogram has been produced," Elnekave told AuntMinnie.com. "What we have aimed to do is consolidate an immense volume of radiologic knowledge into a piece of code that can be applied to digital mammograms anywhere, potentially bringing the barrier down. A single mammographer can review more films with greater confidence and learn continually in the process."

An elusive skill

Breast cancer was one of the first clinical targets for Zebra outside of the company's initial focus on population health applications such as chronic lung disease, cardiovascular disease, and osteoporosis, Elnekave said. The project to apply deep learning for breast cancer diagnosis was led by Zebra's Phil Teare, who served as first author and lead machine-learning researcher (JDI, June 27, 2017).

Although digital mammography is the only imaging modality shown to reduce mortality with a screening program, it continues to suffer from variable sensitivity and specificity -- even with the use of traditional CAD software, according to the researchers. Mammographic skill is elusive and unique, Elnekave noted.

"Its mastery has little to do with elucidating anatomical labyrinths or memorizing differential-diagnoses," Elnekave said. "Experienced mammographers know a diagnosis because they've seen it thousands of times in various forms. We were confident that, given enough examples, a deep CNN would be successful in detecting the various features of breast cancer in a manner similar to how mammographers do."

Adding color

After testing a number of image enhancement methods, the Zebra team found it could optimize the representation of mammographic features for deep learning by applying "false color" to mammography images that had been processed with CLAHE. Areas of black and white density are converted on an image into a spectrum of color on the red, green, and blue (RGB) scale.

Next, these processed images are input into two independent CNN algorithms: One CNN focuses on a lower-resolution image of the whole breast, while the second receives smaller patches of the same image. Both CNNs were based on the Google Inception V3 model and were pretrained on data from the ImageNet database. Training and testing were performed on datasets of mammography images from the Digital Database for Screening Mammography and the proprietary Zebra Mammography Dataset of 1,739 full-sized mammograms.

Output from both CNNs is entered into a random-forest classifier, which combines both the whole-image and patch-based assessments into a final prediction of "suspicious" or "nonsuspicious" for malignancy.

"This approach is intuitively aligned with how radiologists assess mammograms: first globally and then 'zooming in' to analyze discrete regions," the authors wrote. "Indeed, some features of malignancy, such as regional architectural distortion or asymmetry are best revealed on the image level; whereas others, such as microcalcifications or masses, are best seen in magnification."

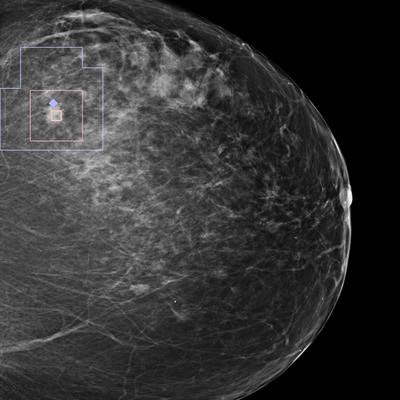

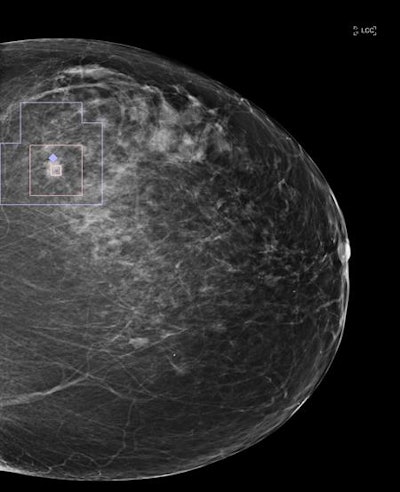

Single mammography view with an automatic overlay indicating a region containing a finding with a high likelihood of malignancy. Image courtesy of Zebra Medical Vision.

Single mammography view with an automatic overlay indicating a region containing a finding with a high likelihood of malignancy. Image courtesy of Zebra Medical Vision.High accuracy

In testing, the algorithm achieved 91% sensitivity, 80% specificity, and an area under the curve (AUC) of 0.922 for diagnosing malignancy. The AUC value is superior to those reported in the literature for expert interpretations of digital mammography exams and comparable to those reported for digital breast tomosynthesis, according to the researchers.

The team was initially surprised, however, by how well the whole-image CNN performed on its own; in many cases the malignancy was quite small, Elnekave said.

"Although this surprised us, the results resonated with some mammographers who told us they got a meaningful impression for an image at first glance before zooming in on details," he said.

The researchers were also surprised to discover that "coloring" the breast tissue improved the algorithm's performance, Elnekave said.

"It made sense because in the transfer learning model, we were leveraging a tremendous amount of machine learning performed on color images," he said.

The next step

Zebra is finalizing CE Mark approvals for the algorithm, and commercial availability is expected in the summer for the European Union and other countries outside of the U.S., Elnekave said. The company hopes to receive U.S. Food and Drug Administration (FDA) clearance for the algorithm in the second quarter of 2018.

In addition, Zebra plans to continue developing the algorithm and also apply it to digital breast tomosynthesis studies, Elnekave said.