The combination of an artificial intelligence (AI)-based computer-aided detection (CAD) algorithm with radiologist interpretation can detect more cases of breast cancer on screening mammograms than double reading by radiologists, according to research published online August 27 in JAMA: Oncology.

Researchers from the Karolinska University Hospital in Stockholm, Sweden, retrospectively compared three commercially available AI models in a case-control study involving nearly 9,000 women who had undergone screening mammography. They found that one of the models demonstrated sufficient diagnostic performance to merit further prospective evaluation as an independent reader.

What's more, the best results -- 88.6% sensitivity with 93% specificity -- were achieved when utilizing that algorithm's results along with the first radiologist interpretation.

"Combining the first readers with the best algorithm identified more cases positive for cancer than combining the first readers with second readers," wrote the authors, led by Dr. Mattie Salim. "No other examined combination of AI algorithms and radiologists surpassed this sensitivity level."

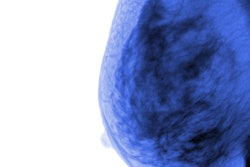

The researchers used a study sample of 8,805 women ages 40 to 74 who had received screening mammography at their academic hospital from 2008 to 2015 and who did not have implants or prior breast cancer. All exams were performed on a full-field digital mammography system from Hologic.

Of these women from the public mammography screening program, 8,066 were a random sample of healthy controls and 739 were diagnosed with breast cancer. These 739 cancer cases included 618 actual screening-detected cancers and 121 clinically detected cancers. In order to mimic the 0.5% screening-detected cancer rate in the source screening cohort, a stratified bootstrapping method was used to increase the simulated number of screenings to 113,663.

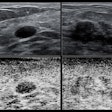

The researchers then applied AI CAD software from three different vendors, who asked to remain anonymous. None of the algorithms had been trained on the mammograms in the study.

After processing the images, the CAD software provided a prediction score for each breast ranging from 0 (lowest suspicion) to 1 (highest suspicion). To enable comparison of the algorithm's results with the recorded radiologist decisions, the researchers elected to choose an algorithm output cutpoint that corresponded as closely as possible to the specificity of that of the first-reader radiologists, i.e. 96.6%.

| Breast cancer detection performance | |||||||

| First-reader radiologists | Second-reader radiologists | Double reading consensus | AI algorithm #3 | AI algorithm #2 | AI algorithm #1 | Combination of AI algorithm #1 and first-reader radiologists | |

| Area under the curve | n/a | n/a | n/a | 0.920 | 0.922 | 0.956 | n/a |

| Sensitivity | 77.4% | 80.1% | 85% | 67.4% | 67% | 81.9% | 88.6% |

| Specificity | 96.6% | 97.2% | 98.5% | 96.7% | 96.6% | 96.6% | 93% |

The researchers noted that the differences in sensitivity between AI algorithm #1 and the other two algorithms and the first reader were statistically significant (p < 0.001 and p = 0.03, respectively).

In an accompanying commentary, Dr. Constance Lehman, PhD, of Harvard Medical School in Boston said that it's now time to move beyond simulation and reader studies and enter the critical phase of rigorous, prospective clinical evaluation of AI.

"The need is great and a more rapid pace of research in this domain can be partnered with safe, careful, and effective testing in prospective clinical trials," she wrote. "If AI models can sort women with cancer detected on their mammograms from those without cancer detected on their mammograms, the value of screening mammography can be made available and affordable to a large population of women globally who currently have no access to the life-saving potential of quality screening mammography."