Virtual reality (VR) and augmented reality (AR) technologies can help radiologists perceive 3D image data more intuitively and show promise for significantly enhancing both diagnostic and interventional radiology, according to a June 29 webinar sponsored by the Society for Imaging Informatics in Medicine (SIIM).

Although current devices have a number of important limitations, VR and AR are poised to have a major impact in the areas of diagnostic imaging, procedure training, image-guided intervention, and interdisciplinary collaboration, according to presenters from the University of Maryland School of Medicine.

"I think it's going to be a major game-changer for interventional radiology and for surgery; I believe it's going to be commonplace that it's ... used, particularly interventionally," said Dr. Eliot Siegel. "As far as diagnostic applications, if we're able to get the resolution and the comfort level significantly higher, I'm absolutely convinced that this is going to change the way we end up practicing."

Technology breakthroughs

VR and AR technologies have become widely available recently due to breakthroughs in optical head-mounted display devices, said Dr. Alaa Beydoun, a resident at the University of Maryland. He noted that virtual reality completely replaces one's environment and is highly immersive, while augmented reality supplements one's environment and allows users to see through the device.

Oculus Rift, HTC Vive, and Samsung Gear VR are all examples of VR technology. If you've seen the Pokémon Go app, you have experienced augmented reality technology, which superimposes virtual objects on the environment but without any spatial relationship to the environment. Google Glass and smartphones -- when not using computer vision technology -- are examples of space-agnostic AR, Beydoun said.

Another type of AR -- space-cognizant AR -- superimposes virtual objects on the surroundings; the virtual objects have spatial relationships with real objects in 3D space. Examples of space-cognizant AR devices include Microsoft HoloLens, Magic Leap, and ODG R9, Beydoun said.

The initial version of Google Glass was commercially available for the consumer market from February 2013 to January 2015 and featured voice input and a touch interface. Google Glass is still available for research and professional applications, and it has been used in healthcare for procedure and patient encounter recording, electronic medical record notification display, and telemedicine, Beydoun said.

Another device, Microsoft HoloLens, has been available since March 2016 and is priced at $3,000, rising to $5,000 with the inclusion of a commercial software suite. Healthcare applications for HoloLens include telemedicine, medical education, telemonitoring, and intuitive image fusion, Beydoun said.

Imaging implications

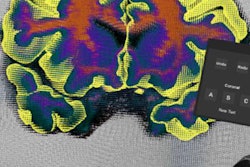

VR and AR could have a number of applications in diagnostic imaging, including the potential for supplementing traditional displays, said resident Dr. Vikash Gupta. AR displays could be created to provide easy access to the electronic health record and Web resources or to serve as additional PACS "monitors," he said.

"This allows the radiologists to have an eight-monitor setup, or even more, for a fraction of the cost [of purchasing more monitors]," Gupta said.

VR and AR workstations could enable deployment of fully customizable, virtual workstations that are mobile and have a small physical footprint. This could change how and where radiology is practiced, he said.

"Using this kind of an approach, we can have a fully customizable utility that can be based on user preferences such as hanging protocols or for how multiple studies are presented," Gupta said.

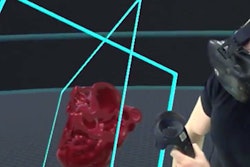

What's more, VR and AR workstations could provide an intuitive stereoscopic 3D viewer that can dynamically zoom, scale, and rotate images. Imaging could be perceived in real space by users, who could walk around a virtual object to appreciate subtle anatomic relationships.

Enhancing interventional procedures

Importantly, VR and AR technologies offer significant potential for interventional procedures, including providing simulation capabilities for training. VR and AR simulation centers could allow trainees to orient to the environment and the equipment, as well as to experience a variety of treatment scenarios and roles in a healthcare scenario, Gupta said.

These technologies can also yield an untethered display device that offers touch-free manipulation of images during image-guided procedures. While traditional monitors do not allow for hands and the display monitor to be in the same field-of-view for ultrasound-guided procedures, for example, AR could produce an unobtrusive, wireless, and available display, he said. This would allow users to focus on the procedure field.

"Now the operator can see not only the patient where the physical needle is, but also the ultrasound imaging screen [to see] where the needle is in the patient," Gupta said.

AR optical head-mounted display devices could facilitate touch-free and sterile access to the patient chart in the procedure suite, he said. Controlled by voice and/or gestures, these devices could be used to review preprocedural images, look up references, and control imaging equipment.

In addition, the devices could be used to record first-person-perspective videos for training and quality assurance purposes, according to Gupta. Medicolegal and patient privacy protection implications would need to be addressed, however.

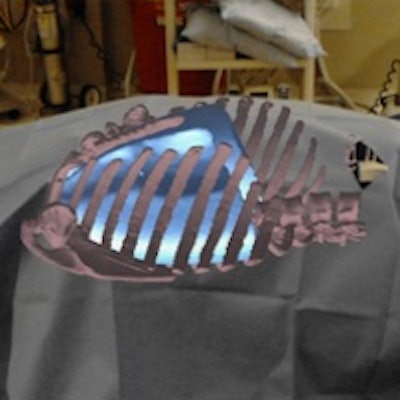

VR and AR technologies could also be useful in CT-guided interventional procedures, which currently require interpreting 3D anatomical relationships from 2D images. These techniques could provide in vivo projection of medical images and offer an intuitive way to visualize 3D imaging, he said. DICOM-derived holograms could be superimposed directly onto a patient.

In this proof of concept, a CT scan of a phantom was used to create a hologram. Once registered using a paper clip as a fiducial marker, the hologram could be viewed on Microsoft HoloLens during image-guided procedures. Image courtesy of Dr. Vikash Gupta.

In this proof of concept, a CT scan of a phantom was used to create a hologram. Once registered using a paper clip as a fiducial marker, the hologram could be viewed on Microsoft HoloLens during image-guided procedures. Image courtesy of Dr. Vikash Gupta.The technologies could potentially even augment the practice of teleradiology by allowing radiologists to have a virtual "telepresence" to consult with surgeons or other clinicians, he said.

Limitations

Currently available AR and VR technologies suffer from extensive limitations related to their displays and processing and battery power, as well as their human interface and practical use, Beydoun said. But new advances are on the horizon, driven by consumer devices. The fast-growing consumer VR and AR market is expected to exceed $100 billion by 2020, he said.

Future developments involving optical head-mounted display devices include eye tracking, electroencephalogram mining, higher-resolution displays, and larger fields-of-view, Beydoun said. We may also see the display of holographic images at multiple focal points to produce a more natural and intuitive appearance.

"That's going to be very exciting because it's going to allow you to actually interact with these objects as if they are truly in the environment," he said. "What's currently being shown is an object that has been rotated and resized based on your position, not based on your distance to it and actually portraying what the actual focal point would be."

Faster processors will allow for better rendering of complex 3D models, while enhanced spatial mapping and environmental tracking capabilities will yield faster and more accurate co-registration functionality, Beydoun said. Better cameras will enhance AR tracking and first-person recordings. In addition, new display technologies will be able to obscure light and render dark colors -- including black.

Future in imaging?

Will optical head-mounted display devices become the imaging workstations of the future? Although the technology isn't quite there yet, these devices would be mobile, would have a low footprint, and could potentially offer higher functionality than current workstation environments, Beydoun said. They could also allow radiologists to have a telepresence to facilitate interdisciplinary collaboration.

"And, in conjunction with eye tracking and machine learning, you can actually potentially allow for some very impressive computer-aided detection algorithms that will make interventional radiologists, radiologists, and clinicians in general much better at their jobs," Beydoun said. "Obviously, a lot of these devices will need to be vetted to develop diagnostic standards, but it is within reach."

As for image-guided procedures, immersive VR and AR simulation apps will likely become the gold standard for procedural training, he said. In addition, accurate in vivo image navigation will shorten interventional procedure time, lowering radiation dose and potentially improving quality of care.

"Eventually, we'll end up with more accurate interventions with smaller incisions, which ... is going to hopefully decrease healthcare costs and improve the quality we provide our patients as well," he said.

Ultimately, clinical trials will be needed to assess the risks and benefits of these technologies to win U.S. Food and Drug Administration (FDA) approval. Cost, procedure time, technical success rates, postprocedural recovery duration, and complications will need to be evaluated, Beydoun said.

He predicted that within five years, however, these types of devices will be used significantly in interventional suites, and potentially within diagnostic radiology.