The photorealistic quality of cinematically rendered CT scans may reduce the amount of imaging data needed to train artificial intelligence (AI) algorithms for medical endoscopy applications, according to an August 16 accepted manuscript in Physics in Medicine and Biology.

Numerous studies have demonstrated the capacity of deep-learning algorithms to help interpret radiological images in a variety of ways, from triaging CT exams to monitoring bone lesions on PET/CT scans.

Ensuring that such algorithms perform well requires vast amounts of annotated imaging data that are often difficult to access or are simply unavailable, noted senior author Dr. Nicholas Durr from Johns Hopkins University and colleagues. One response to this barrier has been to train algorithms on synthetically created imaging data. However, synthetic data often fail to capture the realistic details necessary to train algorithms effectively.

Seeking to facilitate this process, the researchers trained a convolutional neural network (CNN) designed to estimate the depth of structures found in the colon on endoscopy exams. The algorithm was trained on a dataset consisting of 200,000 synthetic endoscopy images; this was followed by fine-tuning of the CNN on 1,200 CT colonography scans. Subsequently, the group validated this training and fine-tuning technique on a set of real pig endoscopy images.

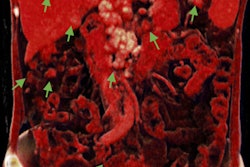

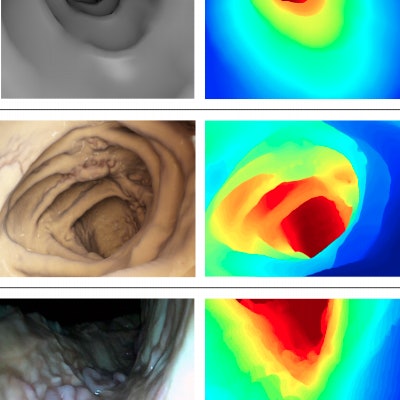

Synthetically generated endoscopy images (top), cinematically rendered CT scans (middle), and real endoscopy images (bottom). Image courtesy of Dr. Faisal Mahmood and Dr. Nicholas Durr.

Synthetically generated endoscopy images (top), cinematically rendered CT scans (middle), and real endoscopy images (bottom). Image courtesy of Dr. Faisal Mahmood and Dr. Nicholas Durr.The researchers used cinematic rendering technology (syngo.via VB20, Siemens Healthineers) to enhance the depth and shape perception of the CT colonography scans -- believing that the improved image quality of the scans would increase the efficiency of training the CNN.

"We hypothesize that networks trained on synthetic medical data, that might not have previously adapted to real data, would generalize better if fine-tuned using cinematically rendered photorealistic data ... [and] require less amount of training data," the authors wrote.

Overall, fine-tuning the CNN with cinematically rendered images, on top of standard training, led to 58.7% fewer errors for the synthetic endoscopy images and 27.5% fewer errors for the real pig endoscopy images when estimating depth in the colon, compared with conventional training alone.

| Errors in depth estimation with deep-learning algorithm | ||

| Image type | Training on endoscopy images only | Training on endoscopy images + cinematically rendered images |

| Synthetic colon | 39.4% | 16.6% |

| Real pig colon | 41.1% | 29.8% |

Using cinematically rendered CT data to help train the CNN led to several additional advantages over the standard training method:

- Better adaptation to real medical images, as demonstrated by more robust performance

- Less data needed for effective training

- Ability to generalize the CNN for broader clinical application

"We demonstrate one of the first successful uses of cinematically rendered data for generalizing a network trained on synthetic data to real data. ... We show that a synthetic data-driven [CNN] model can be successfully trained for accurate depth estimation on real tissue given no real optical endoscopy training data," the authors wrote.