Artificial intelligence (AI) algorithms can yield impressive results when tested on images similar to those used in their training. But how well do they do on images acquired at other hospitals and on different patient populations? Not always as well, according to research published online November 6 in PLOS Medicine.

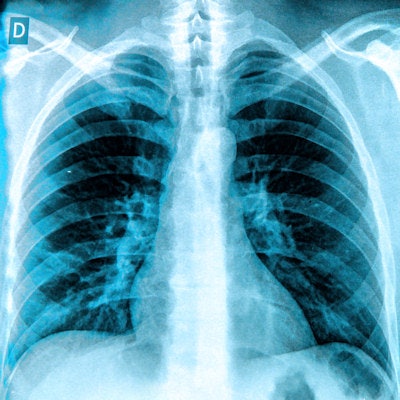

Senior author Dr. Eric Oermann of Mount Sinai Health System in New York City and colleagues trained individual convolutional neural network (CNN) algorithms to detect pneumonia on chest x-rays using separate datasets from different institutions as well as a pooled dataset. When they evaluated these models on the other datasets in the study, the researchers found that the algorithms performed worse in three of the five comparisons.

Dr. Eric Oermann from Mount Sinai Health System.

Dr. Eric Oermann from Mount Sinai Health System.The results show that claims by researchers or companies that their model achieves a certain level of performance can't be taken at face value, according to Oermann, an instructor in the department of neurosurgery.

"The reality is they have a model that does 'X' for data sampled from a certain population that they trained on," he told AuntMinnie.com. "If a given hospital, clinic, or practice has significant variation in their population's makeup or imaging protocols, then it is highly likely that this model doesn't do 'X' and perform as it is supposed to. Don't trust any algorithms without validating them on your local data, patients, or protocols!"

Overly optimistic results?

Early results from using CNNs to diagnose disease on x-rays have been promising, but researchers haven't shown yet that algorithms trained on x-rays from one hospital or group of hospitals will work just as well at other hospitals, according to the Oermann and colleagues. They hypothesized that an algorithm that does well with a test set from a given medical population won't necessarily do well in other populations due to population-level variance, Oermann said.

"We noticed all of this work with weakly supervised [chest x-ray] classifiers, and we suspected that the reported results might be overly optimistic since the bulk of noise in medical datasets is nonrandom (acquisition protocols, patient positioning, etc.)," he said.

In a cross-sectional study, the researchers trained CNNs individually on one of three datasets:

- 112,120 chest x-rays from 30,805 patients from the U.S. National Institutes of Health (NIH) Clinical Center

- 42,396 chest x-rays from 12,904 patients from Mount Sinai Hospital (MSH)

- A pooled dataset including images from both the NIH and MSH

They then compared the performance of each CNN on three different testing datasets: an NIH-only test set, an MSH-only test set, and a separate dataset from the Indiana University (IU) Network for Patient Care that included 3,807 chest x-rays from 3,683 patients. They measured performance by receiver operating characteristic (ROC) analysis and calculating the area under the ROC curve (AUC) for each test set.

In three out of five comparisons, the performance of the algorithm was lower on a statistically significant basis on chest x-rays that came from external hospitals, compared with hospitals that generated the x-rays on which the algorithm was trained, the researchers found. For example, the algorithm that was trained on the NIH dataset had an AUC of 0.750 on the NIH test dataset, but that fell to 0.695 on the MSH dataset and to 0.725 on the IU dataset.

| Performance of CNNs for detecting pneumonia on x-rays | ||||

| Performance on NIH-only test dataset | Performance on MSH-only test dataset | Performance on IU dataset | Performance on joint NIH-MSH test dataset | |

| Algorithm trained only on NIH dataset | 0.750 | 0.695* | 0.725 | 0.773 |

| Algorithm trained only on MSH dataset | 0.717* | 0.802 | 0.756 | 0.862 |

The researchers' hypothesis turned out to be true, Oermann said.

"In some ways, this is a trivial statistical point, but what makes this study interesting is that it held in our actual experiments where there was a significant performance loss in three out of five of the test sites," he said.

More generalizable algorithms

It's not easy to produce algorithms that perform just as well at other institutions as they do on their training datasets.

"Training on datasets that are more representative of the full range of variation in radiology patients, acquisition protocols, and so forth is probably the most straightforward -- if most difficult -- way to obtain more generalizable algorithms," Oermann said. "Other mathematical techniques -- transfer learning, regularization terms, and so forth -- that regularize deep-learning models can [also] help."

The ultimate take-home message from their research, though, is that radiologists need to play a large role in developing and validating these AI tools, Oermann said.

"We know that deep-learning techniques are brittle, but we can only really find out how brittle by testing them extensively," he said.