A state-of-the-art bone age deep-learning (DL) algorithm had clinically significant errors when presented with clinically encountered imaging variations, suggesting lack of clinic-readiness for real-world deployment, according to research presented November 27 at RSNA in Chicago.

The group from University of Maryland Medical Intelligent Imaging (UM2ii) Center, University of Maryland School of Medicine, tested how the DL model performed when faced with altered images that simulated real-world variations and found that while the model generalized well to external data, it had inconsistent predictions for images that had undergone simple transformations reflective of clinical variations in imaging processing.

"Deep learning models for ... image diagnosis have been shown to lack robustness for common clinical variations of images. Without proper robust testing, deploying these deep learning models in real-world clinical use risks relaying incorrect or misleading predictions to the clinicians in practice," noted presenter Samantha Santomartino, medical student at UM2ii.

Although DL models for bone age prediction have been shown to perform similarly to radiologists, their robustness to real-world image variation has not been rigorously evaluated, according to Santomartino. The group therefore wanted to evaluate the robustness of a cutting-edge bone age DL model to real-world variations in images by applying computational "stress tests."

The results indicated that DL models may not perform as expected in the real world and that they should be thoroughly stress tested prior to deployment in order to determine if they are "clinic ready."

The Maryland group tested the DL model on image transformations by comparing mean absolute differences (MAD) of the model's predictions on baseline (untransformed) vs. transformed images with t-tests, as well as proportions of clinically significant errors.

The Maryland group tested the DL model on image transformations by comparing mean absolute differences (MAD) of the model's predictions on baseline (untransformed) vs. transformed images with t-tests, as well as proportions of clinically significant errors.The team evaluated the winning DL model of the 2017 RSNA Pediatric Bone Age Challenge (concordance of 0.991 with radiologist ground truth) from the company 16Bit, using an internal test set of 1425 images from the RSNA Challenge used to develop the model and an external dataset of 1202 images from the Digital Hand Atlas (DHA) that the model had never seen before. At the time of the study the 16Bit DL model was free and available for public use on browser applications.

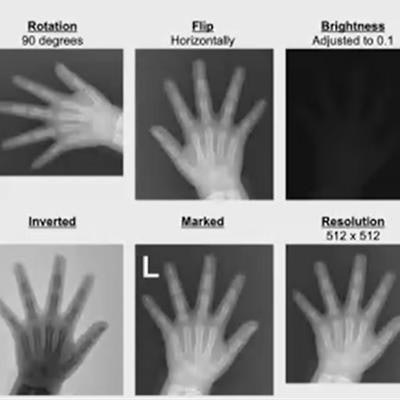

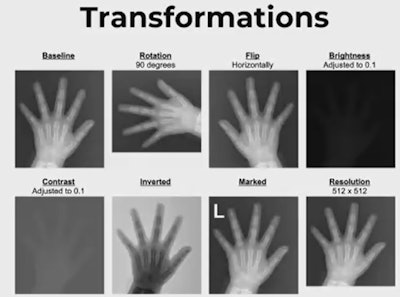

The researchers applied simple transformations to each test image to simulate the following real-world variations:

- Rotations

- Flips

- Brightness adjustments

- Contrast adjustments

- Inverted pixels

- Addition of a standard radiological laterality marker

- Resolution changes from a baseline of 1024x1024

They then performed computational "stress tests" by comparing mean absolute differences (MAD) of the model's predictions on baseline (untransformed) vs. transformed images with t-tests, as well as proportions of clinically significant errors -- those that would change the clinical diagnosis -- in the external dataset using Chi-Squared tests. The internal dataset did not report chronological age, precluding evaluation of clinically significant errors.

There was no significant difference in MAD of the 16bit model on baseline internal and external test images, indicating good generalizability. On computational stress testing, the model had significantly higher MAD and proportions of clinically significant errors pertaining to skeletal maturity in transformed images compared to baseline images for all image transformation groups (p < 0.05, all), except for addition of laterality markers.

For example, for 180° rotations, predicted bone age was significantly lower (97.8 months vs. 135.2 months, p < 0.0001) and MAD was significantly higher (45.2 months vs. 6.9 months, p < 0.0001) with 30% more clinically significant errors (p<0.0001) compared with baseline images.

Santorino told delegates that it was important to use clinically relevant performance metrics when evaluating algorithms.

Answering a question from a 16bit representative about incidence and clinical relevance of the study's transformations in clinical practice, Santomartino said that while some of the transformations the group tested were extreme, it was not uncommon in clinical practice to see a rotated image or two images where one was super saturated and one was not. Some of the milder transformations are more clinically relevant than the extreme transformations they tested and the group saw that difference in both MAD and clinical diagnoses for even milder transformations, she noted.