In a study described as a “competition between radiologists,” participants tasked with identifying abnormal findings on chest x-rays performed better with AI assistance than without AI assistance – though not by much and not in all cases, according to a group in Nanjing, Jiangsu, China.

Significantly, however, AI assistance allowed participants to cut reading times by nearly half, noted lead author Lili Guo, MD, of Nanjing Medical University, and colleagues.

“This competition demonstrated the value of AI in detecting and localizing many pathologies in chest radiographs by simulating the real work situations of radiologists,” the group wrote. The study was published February 8 in the Journal of Imaging Informatics in Medicine.

In China – as elsewhere – radiologists face heavy daily workloads of reading patient x-rays, and this is especially the case for young doctors, the authors noted. Yet diagnosing findings on chest x-rays requires experience and time and reducing subjective human errors and improving efficiency remains a major goal in the field, they added.

Thus, to test whether a deep-learning AI algorithm the group previously developed could be clinically useful, the researchers recruited 111 radiologists to participate in a reading study. Fifty were junior radiologists (less than 6 years of working experience), 32 were intermediate radiologists (6-14 years of working experience), and 29 were senior radiologists (at least 15 years of working experience).

The deep-learning algorithm was designed to concurrently detect the presence of normal findings and 14 different abnormalities. Each radiologist interpreted 100 chest x-rays curated from images from 4,098 patients who had been admitted to their hospital: a set of 50 without AI assistance (control group) and 50 images with AI assistance (test group).

Participants were given an initial score of 14 points for each image read, with one point deducted for an incorrect answer and zero points given for a correct answer, with final mean scores calculated based on performance on each set.

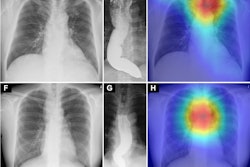

The AI system output the bounding boxes and labels of the lesions to assist radiologists. Image courtesy of the Journal of Imaging Informatics in Medicine.

The AI system output the bounding boxes and labels of the lesions to assist radiologists. Image courtesy of the Journal of Imaging Informatics in Medicine.

According to the analysis, the average score of the 111 radiologists was 597 (587-605) in the control group and 619 (612-626) in the test group (p < 0.001). The time spent by the 111 radiologists was 3,279 seconds (54.65 minutes) without AI assistance and 1,926 seconds (32.1 minutes) with AI assistance.

The radiologists showed better performance with AI assistance in identifying normal findings, pulmonary fibrosis, heart shadow enlargement, mass, pleural effusion, and pulmonary consolidation recognition, the group wrote.

However, the radiologists alone showed better performance in aortic calcification, calcification, cavity, nodule, pleural thickening, and rib fractures, they noted.

“The performance of all radiologists with and without AI assistance showed that AI improved the diagnostic accuracy of the doctors,” the group wrote.

Significantly, the authors noted that the average scores of intermediate and senior doctors were significantly higher with than without AI assistance (p < 0.05), while the average scores of junior doctors were similar with and without AI assistance. They suggested that this was due to the false positives or false negatives generated by the AI, which are more difficult for junior doctors to eliminate.

“Further studies are necessary to determine the feasibility of these outcomes in a prospective clinical setting,” the group concluded.

The full study is available here.