The privacy of patient data in AI models trained on chest x-rays can be guaranteed – importantly, without significantly reducing the accuracy of models on large “real-world” data sets, according to a study published March 14 in Communications Medicine.

A team in Germany used an approach called “differential privacy” when training large-scale AI models and then evaluated its effects on model performance. They found high accuracy was attainable, despite the stringent privacy guarantees, noted lead authors and doctoral students Soroosh Tayebi Arasteh, of University Hospital RWTH Aachen, and Alexander Ziller, of the Technical University of Munich.

“Our study shows that – under the challenging realistic circumstances of a real-life clinical dataset – the privacy-preserving training of diagnostic deep-learning models is possible with excellent diagnostic accuracy and fairness,” the group wrote.

Most, if not all, currently deployed machine-learning AI models are trained without any formal technique to preserve privacy of the data, the researchers noted. Although an approach called federated learning has been proposed, even it has been shown to be vulnerable to potential malicious attacks through reverse engineering, they wrote.

Thus, formal privacy preservation methods are required to protect the patients whose data is used to train diagnostic AI models and in this regard, the gold standard is a technique called differential privacy, according to the authors. In brief, differential privacy involves introducing randomness (mathematical “noise”) to data used to train deep-learning models so that individual data points can’t be distinguished.

“To the best of our knowledge, our study is the first work to investigate the use of differential privacy in the training of complex diagnostic AI models on a real-world dataset,” the researchers added.

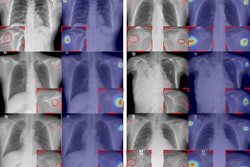

The researchers used two data sets: a large dataset (n = 193,311) of high-quality clinical chest x-rays and a data set (n = 1,625) of 3D abdominal CT images, with the task of classifying the presence of pancreatic ductal adenocarcinoma. Both data sets were retrospectively collected and manually labeled by experienced radiologists.

Next, they compared nonprivate deep convolutional neural networks and privacy-preserving models with respect to performance trade-offs.

According to the findings, while the privacy-preserving training yielded lower accuracy, it did not amplify the ability of the AI to discriminate among the x-ray images based on age, sex, or patients with multiple health conditions. Significantly, these are data points that could be used to identify specific patients, they noted.

“[Differential privacy] offers the ability to upper-bound the risk of successful privacy attacks while still being able to draw conclusions from the data,” the group wrote.

Ultimately, trade-offs between model performance and privacy preservation are a matter of ethical, societal, and political debate, the researchers added.

“Our results are of interest to medical practitioners, deep-learning experts in the medical field and regulatory bodies such as legislative institutions, institutional review boards, and data protection officers," the group concluded.

The full study is available here.